Welcome to

Singularity Weblog

Discover the future of technology. Avoid dangers. Embrace opportunities. Create a better future, better you.

What is Singularity Weblog?

A Conversation about Exponential Technology, Accelerating Change, Artificial Intelligence, and Ethics

Discover how to: Identify unprecedented dangers and opportunities. See a better future, better you. Give birth to your own ideas. Live long and prosper.

AI Blog

Stay informed about how Artificial Intelligence and other exponential technologies will change your business and your life.

Singularity.FM Podcast

Listen to the best singularity podcast: 300+ expert interviews and 7 million downloads. It's the place where you interview the future.

Keynote Speaker

Hire the “Larry King of the Singularity.” Make your event unforgettable. Inspire your audience to not fear but embrace and create the future.

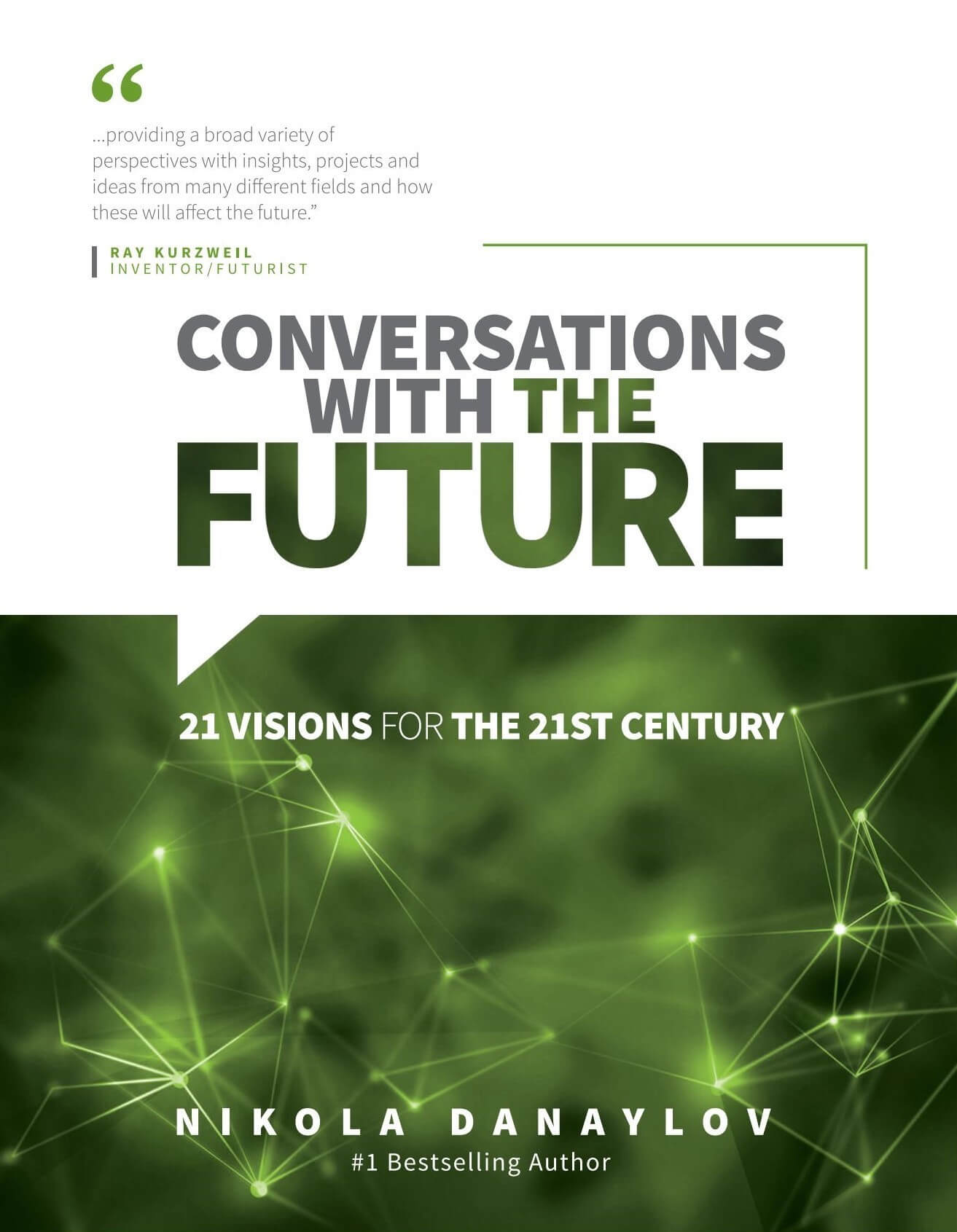

Conversations With The Future

In “Conversations with the Future: 21 Visions for the 21st Century,” we embark on a thought-provoking journey through the complexities and possibilities that the future holds. This collection of enlightening dialogues offers readers an in-depth look at the potential scenarios and challenges of the 21st century.

Each chapter presents a unique vision of the future, shaped by conversations with leading thinkers, innovators, and scholars. From the role of AI in shaping our lives to the impact of genetics, robotics, nanotechnology, 3D printing, and life extension, the book explores a wide range of topics that are crucial to our collective future.

“Conversations with the Future” is more than just a book; it’s an invitation to engage in the dialogue about our future and to consider how our actions today will shape the world of tomorrow. Whether you’re a futurist, a student, or simply curious about what lies ahead, this book will provide you with insights and perspectives that will provoke thought, inspire action, and ignite conversation.