Robin Hanson (part 2): Social Science or Extremist Politics in Disguise?!

Podcast: Play in new window | Download | Embed

Subscribe: RSS

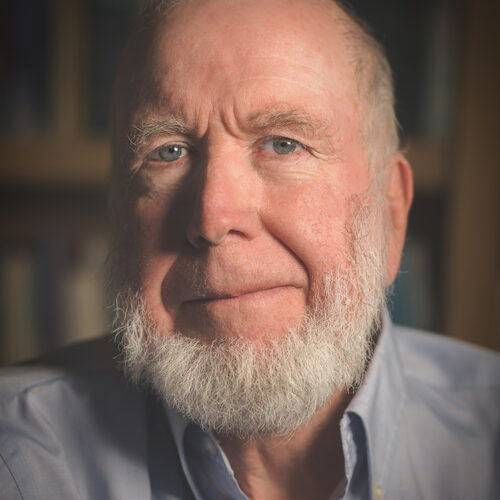

My second interview with economist Robin Hanson was by far the most vigorous debate ever on Singularity 1 on 1. I have to say that I have rarely disagreed more with any of my podcast guests before. So, why do I get so fired up about Robin’s ideas you may ask?!

Well, here is just one reason why I do:

The ideas of economists and political philosophers, both when they are right and when they are wrong, are more powerful than is commonly believed. Indeed, the world is ruled by little else. Practical men, who believe themselves to be quite exempt from any intellectual influences, are usually the slaves of some defunct economist. Madmen in authority, who hear voices in the air, are distilling their frenzy from some academic scribbler of a few years back. I am sure that the power of vested interests is vastly exaggerated compared with the gradual encroachment of ideas. Soon or late, it is ideas, not vested interests, which are dangerous for good or evil.

John Maynard Keynes, The General theory of Employment, Interest and Money

To be even more clear, I believe that it is ideas like Robin’s that may, and often do, have a direct impact on our future.

And so I am very conflicted. Ever since I finished recording my second interview with Hanson I have been torn inside:

On the one hand, I really like Robin a lot: He is that most likable fellow, from the trailer of The Methuselah Generation: The Science of Living Forever, who like me, would like to live forever and is in support of cryonics. In addition, Hanson is also clearly a very intelligent person with a diverse background and education in physics, philosophy, computer programming, artificial intelligence, and economics. He’s got a great smile and, as you will see throughout the interview, is apparently very gracious to my verbal attacks on his ideas.

On the other hand, after reading his book draft on the Em Economy, I believe that some of his suggestions have much less to do with social science and much more with his libertarian bias and what I will call “an extremist politics in disguise.”

So, here is, the way I see it, the gist of our disagreement:

I say that there is no social science that, in between the lines of its economic reasoning, can logically or reasonably suggest details such as policies of social discrimination and collective punishment; the complete privatization of law, detection of crime, punishment and adjudication; that some should be run 1,000 times faster than others, while at the same time giving them 1,000 times more voting power; that emulations who can’t pay for their storage fees should be either restored from previous back-ups or be outright deleted (isn’t this like saying that if you fail to pay your rent you should be shot dead?!)…

Suggestions like the above are no mere details: they are extremist bias for Laissez-faire ideology while dangerously masquerading as (impartial) social science.

Suggestions like the above are no mere details: they are extremist bias for Laissez-faire ideology while dangerously masquerading as (impartial) social science.

During the 2007 OSCON conference Robin Hanson said:

“It’s [Bias] much worse than you think. And you think you are doing something about it and you are not.”

I will go on to claim that Prof. Hanson himself is indeed a prime example of exhibiting precisely such a bias, while at the same time thinking he is not. Because not only that he doesn’t give any justification for the above suggestions of his, but also because, in principle, no social science could ever give justification for issues that are profoundly ethical and political in nature. (Thus you can say that I am in a way arguing about the proper limits, scope and sphere of economics, where using its tools can give us any worthy and useful insights for the benefit of our whole society. That is why the “father of economics” – Adam Smith, was a moral philosopher.)

I also agree with Robin’s final message during our first interview – namely that “details matter.” And so it is for this reason that I paid attention and was so irked by some of the side “details” in his book’s draft.

The two quotes that I will no doubt remember – one from Robin’s book draft and another one, that frankly shocked me during our second interview, are the quotes that I respectively, absolutely like and agree with, and totally hate and find completely abhorrent:

We can’t do anything about the past, however. People often excuse this by saying that we know a lot more about the past. But modest efforts have often given substantial insights into our future, and we would know much more about the future if we tried harder.

The Third Reich will be a democracy by now! (Yes, you can give Robin the benefit of the doubt for he said this in the midst of our vigorous argument. On the other hand, as Hanson says – “details do matter.”)

So, my question to you is this: Is Robin Hanson’s upcoming book on the Em Economy social science or extremist politics in disguise?!

To answer this properly I would recommend that you see both the first and the second interview, read Robin’s book when it comes out, and be the judge for yourself. Either way, don’t hesitate to let me know what you think and, in particular, where and how I am either misunderstanding and/or misrepresenting Prof. Hanson’s work and ideas.

As always you can listen to or download the audio file above or scroll down and watch the video interview in full. To show your support you can write a review on iTunes, make a direct donation, or become a patron on Patreon.

Who is Robin Hanson?

Robin Hanson is an associate professor of economics at George Mason University, a research associate at the Future of Humanity Institute of Oxford University, and chief scientist at Consensus Point. After receiving his Ph.D. in social science from the California Institute of Technology in 1997, Robin was a Robert Wood Johnson Foundation health policy scholar at the University of California at Berkeley. In 1984, Robin received a masters in physics and a masters in the philosophy of science from the University of Chicago, and afterward spent nine years researching artificial intelligence, Bayesian statistics, and hypertext publishing at Lockheed, NASA, and independently.

Robin Hanson is an associate professor of economics at George Mason University, a research associate at the Future of Humanity Institute of Oxford University, and chief scientist at Consensus Point. After receiving his Ph.D. in social science from the California Institute of Technology in 1997, Robin was a Robert Wood Johnson Foundation health policy scholar at the University of California at Berkeley. In 1984, Robin received a masters in physics and a masters in the philosophy of science from the University of Chicago, and afterward spent nine years researching artificial intelligence, Bayesian statistics, and hypertext publishing at Lockheed, NASA, and independently.

Robin has over 70 publications, including articles in Applied Optics, Business Week, CATO Journal, Communications of the ACM, Economics Letters, Econometrica, Economics of Governance, Extropy, Forbes, Foundations of Physics, IEEE Intelligent Systems, Information Systems Frontiers, Innovations, International Joint Conference on Artificial Intelligence, Journal of Economic Behavior and Organization, Journal of Evolution and Technology, Journal of Law Economics and Policy, Journal of Political Philosophy, Journal of Prediction Markets, Journal of Public Economics, Medical Hypotheses, Proceedings of the Royal Society, Public Choice, Social Epistemology, Social Philosophy and Policy, Theory and Decision, and Wired.

Robin has pioneered prediction markets, also known as information markets or idea futures, since 1988. He was the first to write in detail about people creating and subsidizing markets in order to gain better estimates on those topics. Robin was a principal architect of the first internal corporate markets, at Xanadu in 1990, of the first web markets, the Foresight Exchange since 1994, and of DARPA’s Policy Analysis Market, from 2001 to 2003. Robin has developed new technologies for conditional, combinatorial, and intermediated trading, and has studied insider trading, manipulation, and other foul play. Robin has written and spoken widely on the application of idea futures to business and policy, being mentioned in over one hundered press articles on the subject, and advising many ventures, including GuessNow, Newsfutures, Particle Financial, Prophet Street, Trilogy Advisors, XPree, YooNew, and undisclosable defense research projects. He is now chief scientist at Consensus Point.

Robin has diverse research interests, with papers on spatial product competition, health incentive contracts, group insurance, product bans, evolutionary psychology and bioethics of health care, voter information incentives, incentives to fake expertize, Bayesian classification, agreeing to disagree, self-deception in disagreement, probability elicitation, wiretaps, image reconstruction, the history of science prizes, reversible computation, the origin of life, the survival of humanity, very long term economic growth, growth given machine intelligence, and interstellar colonization.

Related articles