James Barrat on the Singularity, AI and Our Final Invention

Socrates / Podcasts

Posted on: October 1, 2013 / Last Modified: May 29, 2022

Podcast: Play in new window | Download | Embed

Subscribe: RSS

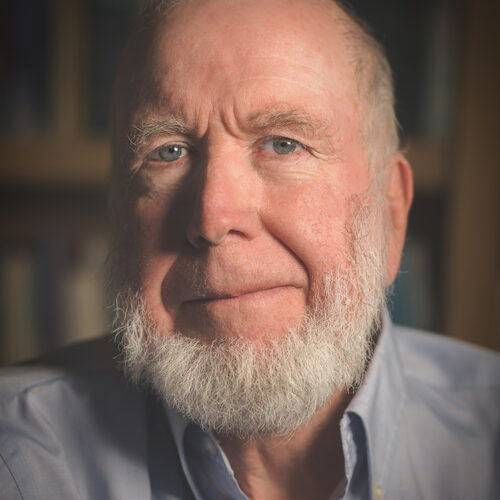

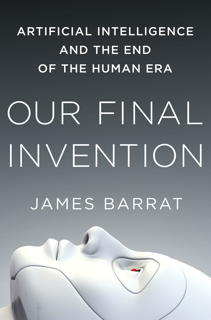

For 20 years James Barrat has created documentary films for National Geographic, the BBC, Discovery Channel, History Channel, and public television. In 2000, during the course of his career as a filmmaker, James interviewed Ray Kurzweil and Arthur C. Clarke. The latter interview transformed entirely Barrat’s views on artificial intelligence and made him write a book on the technological singularity called Our Final Invention: Artificial Intelligence and the End of the Human Era

For 20 years James Barrat has created documentary films for National Geographic, the BBC, Discovery Channel, History Channel, and public television. In 2000, during the course of his career as a filmmaker, James interviewed Ray Kurzweil and Arthur C. Clarke. The latter interview transformed entirely Barrat’s views on artificial intelligence and made him write a book on the technological singularity called Our Final Invention: Artificial Intelligence and the End of the Human Era.

I read an advance copy of Our Final Invention and it is by far the most thoroughly researched and comprehensive anti-The Singularity is Near book that I have read so far. And so I couldn’t help but invite James on Singularity 1 on 1 so that we can discuss the reasons for his abrupt change of mind and consequent fear of the singularity.

During our 70 minute conversation with Barrat, we cover a variety of interesting topics such as his work as a documentary film-maker who takes interesting and complicated subjects and makes them simple to understand; why writing was his first love, and how he got interested in the technological singularity; how his initial optimism about AI turned into pessimism; the thesis of Our Final Invention; why he sees artificial intelligence more like ballistic missiles rather than video games; why true intelligence is inherently unpredictable “black box”; how we can study AI before we can actually create it; hard vs slow take-off scenarios; the positive bias in the singularity community; our current chances of survival and what we should do…

As always you can listen to or download the audio file above or scroll down and watch the video interview in full. To show your support you can write a review on iTunes, make a direct donation, or become a patron on Patreon.

Who is James Barrat?

For twenty years filmmaker and author of Our Final Invention

For twenty years filmmaker and author of Our Final Invention, James Barrat, has created documentary films for broadcasters including National Geographic Television, the BBC, the Discovery Channel, the History Channel, the Learning Channel, Animal Planet, and public television affiliates in the US and Europe.

Barrat scripted many episodes of National Geographic Television’s award-winning Explorer series, and went on to produce one-hour and half-hour films for the NGC’s Treasure Seekers, Out There, Snake Wranglers, and Taboo series. In 2004 Barrat created the pilot for History Channel’s #1-rated original series Digging for the Truth. His high-rating film Lost Treasures of Afghanistan, created for National Geographic Television Specials, aired on PBS in the spring of 2005.

The Gospel of Judas which he produced and directed, set ratings records for NGC and NGCI when it aired in April 2006. Another NGT Special, the 2007 Inside Jerusalem’s Holiest, features unprecedented access to the Muslim Noble Sanctuary and the Dome of the Rock. In 2008 Barrat returned to Israel to create the NGT Special Herod’s Lost Tomb, the film component of a multimedia exploration of the discovery of King Herod the Great’s Tomb by archeologist Ehud Netzer. In 2009 Barrat produced Extreme Cave Diving, an NGT/NOVA special about the science of the Bahamas Blue Holes.

For UNESCO’s World Heritage Site series, he wrote and directed films about the Peking Man Site, The Great Wall, Beijing’s Summer Palace, and the Forbidden City.

Barrat’s lifelong interest in artificial intelligence got a boost in 2000, when he interviewed Ray Kurzweil, Rodney Brooks, and Arthur C. Clarke for a film about Stanley Kubrick’s 2001: A Space Odyssey.

For more information see http://www.jamesbarrat.com