Edsger Dijkstra and the Paradox of Complexity

Socrates / Profiles

Posted on: June 16, 2025 / Last Modified: June 16, 2025

When we talk about pioneers of computer science, names like Turing, von Neumann, and Shannon often dominate the conversation. But Edsger W. Dijkstra — though far less publicly known — was one of the true intellectual giants who laid the foundation for modern computing. His work quietly shapes much of our digital world today. And as we approach the Technological Singularity, some of Dijkstra’s warnings sound more urgent than ever.

When we talk about pioneers of computer science, names like Turing, von Neumann, and Shannon often dominate the conversation. But Edsger W. Dijkstra — though far less publicly known — was one of the true intellectual giants who laid the foundation for modern computing. His work quietly shapes much of our digital world today. And as we approach the Technological Singularity, some of Dijkstra’s warnings sound more urgent than ever.

Who Was Edsger Dijkstra?

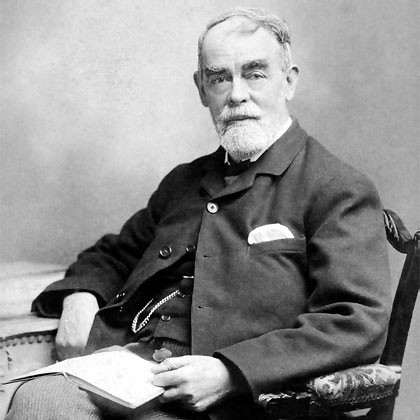

Born in Rotterdam, Netherlands, on May 11, 1930, Edsger Wybe Dijkstra originally studied mathematics and theoretical physics at the University of Leiden. But it was at the Mathematical Center in Amsterdam where Dijkstra made history: he became the first person ever formally employed as a computer programmer.

His academic career was nothing short of prolific. Dijkstra made groundbreaking contributions to:

• Algorithm design (inventing the famous Dijkstra’s shortest path algorithm)

• Programming languages

• Operating systems

• Program design and verification

• Distributed processing

• Formal specification of software systems

In 1972, Dijkstra received the prestigious Turing Award — often referred to as the Nobel Prize of Computer Science — for his fundamental contributions to programming language design.

From 1984 until his retirement in 2000, Dijkstra held the Schlumberger Centennial Chair in Computer Science at the University of Texas at Austin. He passed away in 2002 after a long battle with cancer.

Dijkstra’s Warnings: Complexity is the Enemy

Long before conversations about artificial general intelligence (AGI), exponential growth, or the Singularity filled our headlines, Dijkstra was already warning about the true challenge of computing: growing complexity.

Some of his most famous quotes remain chillingly relevant today:

The question of whether a computer can think is no more interesting than the question of whether a submarine can swim.

Computer Science is no more about computers than astronomy is about telescopes.

Testing shows the presence, not the absence of bugs.

But perhaps his most prescient warning was about the accelerating scale of computational power — and the corresponding software crisis:

When we had no computers, we had no programming problem either. When we had a few computers, we had a mild programming problem. Confronted with machines a million times as powerful, we are faced with a gigantic programming problem.

In other words, as machines grow exponentially more powerful, our capacity to control, program, verify, and secure these systems becomes exponentially more fragile.

Dijkstra and the Singularity

Unlike many contemporary futurists, Dijkstra never used terms like Singularity or AGI. Yet, his insights point directly at the core dilemma we face as AI and machine autonomy advance:

• Raw hardware is easy; reliable, secure software is not.

• Exponential increases in machine power create exponentially more challenging control problems.

• We may build machines whose complexity exceeds human cognitive limits.

This is precisely the kind of scenario envisioned by modern thinkers, such as Nick Bostrom and Eliezer Yudkowsky, who are concerned with AI alignment and safety.

Dijkstra’s warnings foreshadow a world where our inability to fully control or even understand the systems we’ve built becomes the greatest existential threat — a key issue at the heart of Singularity debates today.

The Paradox of Complexity

And herein lies the deeper paradox Dijkstra saw long before most:

Computers were invented to make life easier, yet the more powerful they become, the more complex, fragile, and difficult to control our world grows.

Instead of simplifying reality, we are entangling ourselves in vast, intricate systems whose inner workings often defy complete comprehension — even by their creators. The tools designed to liberate us may instead entrap us in layers of complexity we can no longer master.

Dijkstra understood that technological progress doesn’t automatically deliver simplicity or safety. Quite the opposite: complexity scales faster than our ability to manage it.

Complexity Is Still the Core Problem

As we move ever closer toward AGI and increasingly autonomous systems, Dijkstra’s voice echoes as both a warning and a guide. The problem isn’t whether computers can “think.” The problem is whether humans can still manage, verify, and maintain control over systems whose complexity rapidly outpaces our own intellectual limits.

The Singularity may not arrive with a bang, but with a quiet failure to comprehend the intricacies of our own code.