Greg Bear, Ramez Naam and William Hertling on the Singularity

Socrates / Podcasts

Posted on: October 2, 2014 / Last Modified: January 19, 2025

Podcast: Play in new window | Download | Embed

Subscribe: RSS

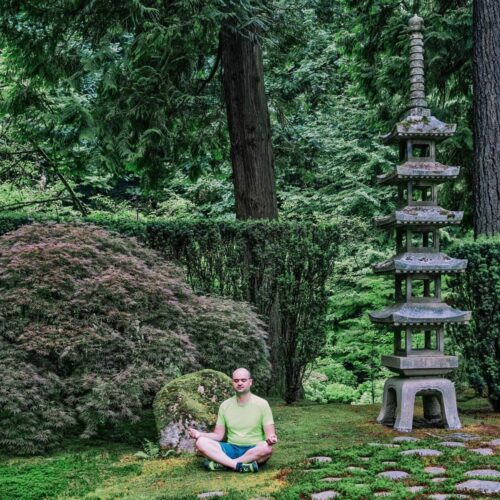

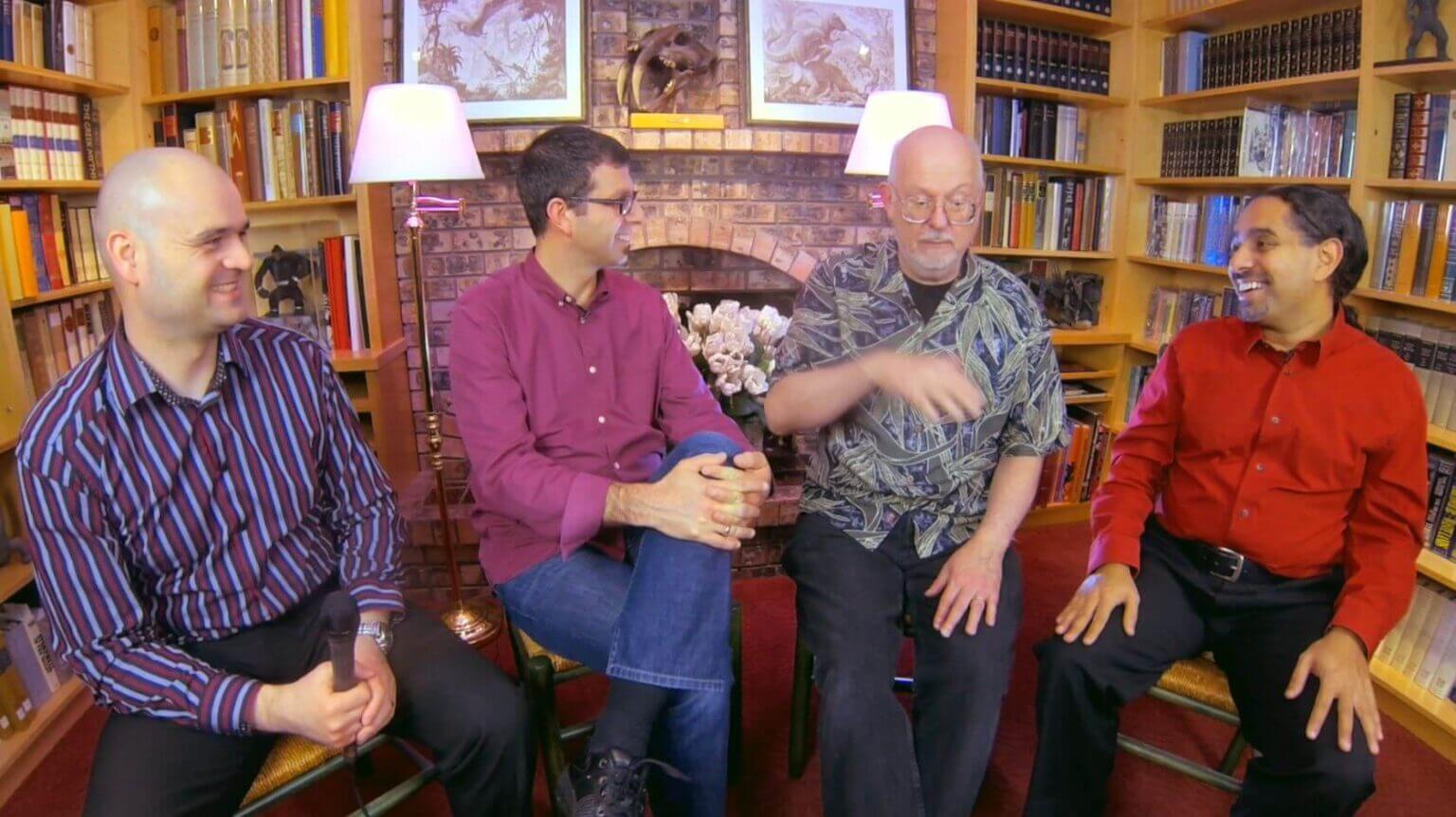

This is the concluding sci fi round-table discussion of my Seattle 1-on-1 interviews with Ramez Naam, William Hertling and Greg Bear. The video was recorded last November and was produced by Richard Sundvall, shot by Ian Sun and generously hosted by Greg and Astrid Bear. (Special note of thanks to Agah Bahari who did the interview audio re-mix and basically saved the footage.)

This is the concluding sci fi round-table discussion of my Seattle 1-on-1 interviews with Ramez Naam, William Hertling and Greg Bear. The video was recorded last November and was produced by Richard Sundvall, shot by Ian Sun and generously hosted by Greg and Astrid Bear. (Special note of thanks to Agah Bahari who did the interview audio re-mix and basically saved the footage.)

During our 30 minute discussion with Greg Bear, Ramez Naam and William Hertling we cover a variety of interesting topics such as: what is science fiction; the technological singularity and whether it could or would happen; the potential of conflict between humans and AI; the definition of the singularity; emerging AI and evolution; the differences between thinking and computation; whether the singularity is a religion or not…

As always you can listen to or download the audio file above or scroll down and watch the video interview in full. To show your support you can write a review on iTunes, make a direct donation or become a patron on Patreon.