Suzanne Gildert on Kindred AI: Non-Biological Sentiences are on the Horizon

Socrates / Podcasts

Posted on: November 5, 2016 / Last Modified: March 30, 2024

Podcast: Play in new window | Download | Embed

Subscribe: RSS

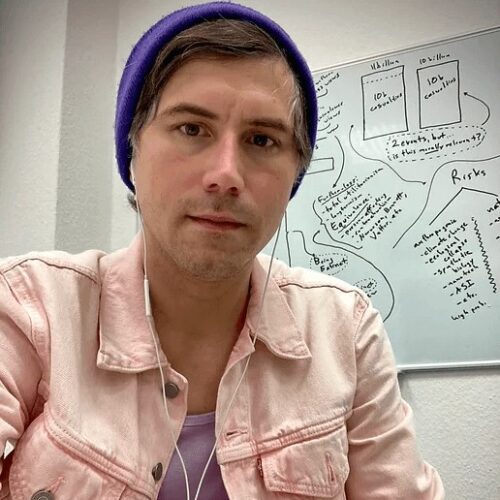

Suzanne Gildert is a founder and CTO of Kindred AI – a company pursuing the modest vision of “building machines with human-like intelligence.” Her startup just came out of stealth mode and I am both proud and humbled to say that this is the first ever long-form interview that Suzanne has done. Kindred AI has raised 15 million dollars from notable investors and currently employs 35 experts in their offices in Toronto, Vancouver, and San Francisco. Even better, Suzanne is a long-term Singularity.FM podcast fan, total tech geek, Gothic artist, Ph.D. in experimental physics and former D-Wave Quantum Computer maker. Right now I honestly can’t think of a more interesting person to have a conversation with.

Suzanne Gildert is a founder and CTO of Kindred AI – a company pursuing the modest vision of “building machines with human-like intelligence.” Her startup just came out of stealth mode and I am both proud and humbled to say that this is the first ever long-form interview that Suzanne has done. Kindred AI has raised 15 million dollars from notable investors and currently employs 35 experts in their offices in Toronto, Vancouver, and San Francisco. Even better, Suzanne is a long-term Singularity.FM podcast fan, total tech geek, Gothic artist, Ph.D. in experimental physics and former D-Wave Quantum Computer maker. Right now I honestly can’t think of a more interesting person to have a conversation with.

During our 100 min discussion with Suzanne Gildert we cover a wide variety of interesting topics such as: why she sees herself as a scientist, engineer, maker and artist; the interplay between science and art; the influence of Gothic art in general and the images of angels and demons in particular; her journey from experimental physics into quantum computers and embodied AI; building tools to answer questions versus intelligent machines that can ask questions; the importance of massively transformative purpose; the convergence of robotics, the ability to move large data across networks and advanced machine learning algorithms; her dream of a world with non-biological intelligences living among us; whether she fears AI or not; the importance of embodying intelligence and providing human-like sensory perception; whether consciousness is classical Newtonian emergent properly or a Quantum phenomenon; ethics and robot rights; self-preservation and Asimov’s Laws of Robotics; giving robots goals and values; the magnifying mirror of technology and the importance of asking questions…

As always you can listen to or download the audio file above or scroll down and watch the video interview in full. To show your support you can write a review on iTunes, make a direct donation or become a patron on Patreon.

Who is Suzanne Gildert?

Suzanne Gildert is co-founder and CTO of Kindred. She oversees the design and engineering of the company’s human-like robots and is responsible for the development of cognitive architectures that allow these robots to learn about themselves and their environments. Before founding Kindred, Suzanne worked as a physicist at D-Wave, designing and building superconducting quantum processors, and as a researcher in quantum artificial intelligence software applications.

Suzanne Gildert is co-founder and CTO of Kindred. She oversees the design and engineering of the company’s human-like robots and is responsible for the development of cognitive architectures that allow these robots to learn about themselves and their environments. Before founding Kindred, Suzanne worked as a physicist at D-Wave, designing and building superconducting quantum processors, and as a researcher in quantum artificial intelligence software applications.

Suzanne likes science outreach, retro tech art, coffee, cats, electronic music and extreme lifelogging. She is a published author of a book of art and poetry. She is passionate about robots and their role as a new form of symbiotic life in our society.

Suzanne received her Ph.D. in experimental physics from the University of Birmingham (UK) in 2008, specializing in quantum device physics, microfabrication techniques, and low-temperature measurements of novel superconducting circuits.