Luke Muehlhauser: Superhuman AI is Coming This Century

Socrates / Podcasts

Posted on: January 16, 2012 / Last Modified: November 22, 2021

Podcast: Play in new window | Download | Embed

Subscribe: RSS

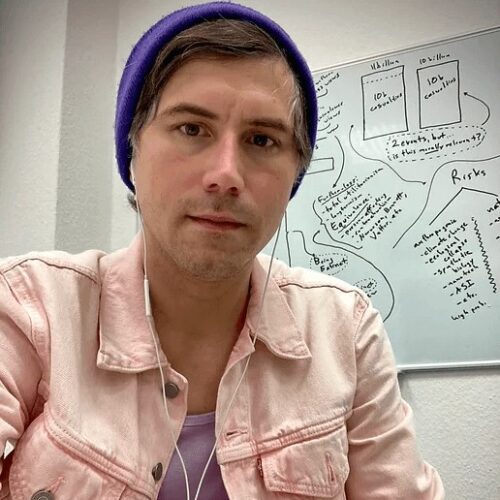

Last week I interviewed Luke Muehlhauser for Singularity 1 on 1.

Last week I interviewed Luke Muehlhauser for Singularity 1 on 1.

Luke Muehlhauser is the Executive Director of the Singularity Institute, the author of many articles on AI safety and the cognitive science of rationality, and the host of the popular podcast “Conversations from the Pale Blue Dot.” His work is collected at lukeprog.com.

I have to say that despite his young age and lack of a University Degree – a criticism which we discuss during our interview, Luke was one of the best and clearest spoken guests on my show and I really enjoyed talking to him. During our 56 min-long conversation we discuss a large variety of topics such as Luke’s Christian-Evangelico personal background as the first-born son of a pastor in northern Minnesota; his fascinating transition from religion and theology to atheism and science; his personal motivation and desire to overcome our very human cognitive biases and help address existential risks to humanity; the Singularity Institute – its mission, members and fields of interest; the “religion for geeks” (or “rapture of the nerds”) and other popular criticisms and misconceptions; our chances of surviving the technological singularity.

My favorite quote from the interview:

Superhuman AI is coming this century. By default, it will be disastrous for humanity. If you want to make AI a really good thing for humanity please donate to organizations already working on that or – if you are a researcher – help us solve particular problems in mathematics, decision theory, or cognitive science.

As always you can listen to or download the audio file above or scroll down and watch the video interview in full. To show your support you can write a review on iTunes, make a direct donation or become a patron on Patreon.

Related articles

- The Complete 2011 Singularity Summit Video Collection

- Spencer Greenberg on Singularity 1 on 1: To Become Better Thinkers – Study Our Cognitive Biases and Logical Fallacies

- Facing the Singularity

- 80,000 Hours

- Video Q&A about Singularity Institute.

- Robert J. Sawyer on Singularity 1 on 1: The Human Adventure is Just Beginning

- So You Want to Save the World