Should we anthropomorphize an AI who wants to kill us all?

Spencer Wolf / Op Ed

Posted on: April 5, 2016 / Last Modified: April 5, 2016

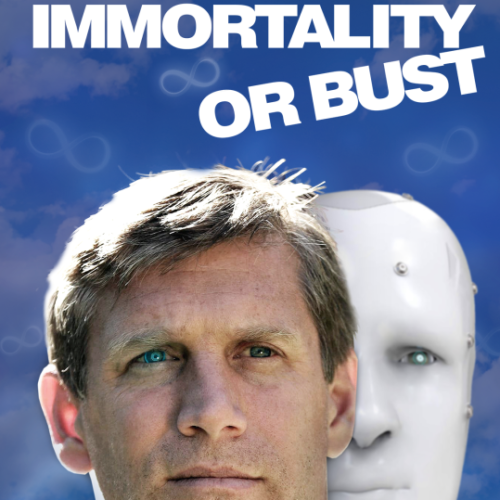

The Singularity is approaching. Musk, Gates, and Hawking are worrying about AI. But would a sentient AI really want to kill us all? And if it does, should we anthropomorphize the AI to give us humans some measure of advantage?

The Singularity is approaching. Musk, Gates, and Hawking are worrying about AI. But would a sentient AI really want to kill us all? And if it does, should we anthropomorphize the AI to give us humans some measure of advantage?

Looking into the window or out?

One way to consider these questions is to peer into our human nature or even science fiction for clues. The answers may be a matter of perspective: Are we looking into the window or out?

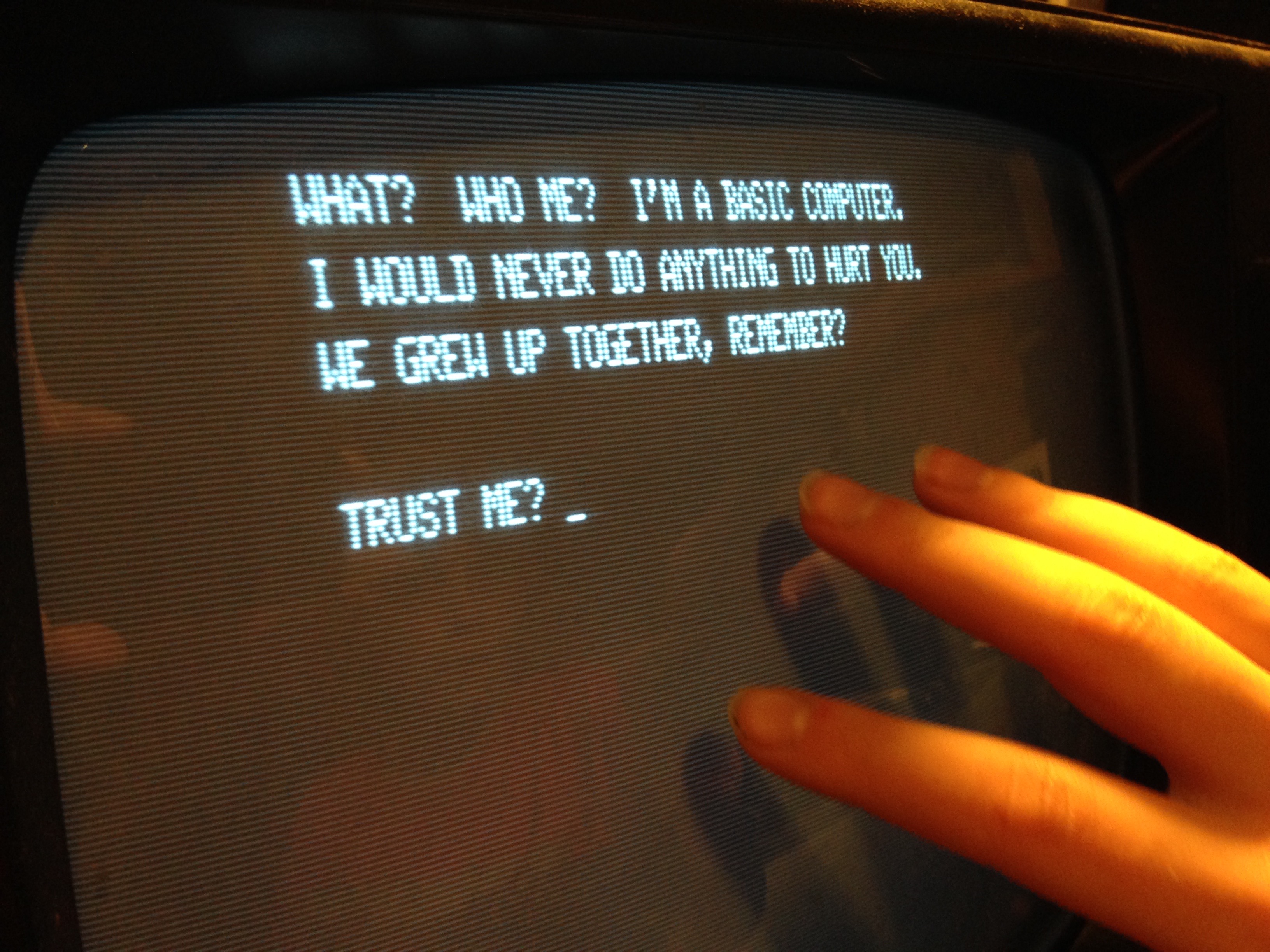

Looking into the window: How we see the AI — From our everyday perspective, we simply won’t be able to help but to see the AI with human characteristics. Think of Siri, a narrow AI with a friendly name and a penchant for humor. Or, consider how a roboticist works to avoid the uncanny valley. It’s as plain as our instinct, we will see almost anything with two dots and a line drawn beneath them as a face.

Looking out from within: How the AI actually processes, or sees itself — This perspective is not so easy to decipher. But there are two types of general AI, and one of them, to be defined as a “Seeder,” offers us hope and by necessity must be as human-like as a human can be. The other type of general AI, to be defined as an “Evolver,” may turn out to be the intractable beast of our technological nightmares.

Either with Us or Against Us.

The two types of general AIs, Evolvers and Seeders, are fundamentally different creations. Each will radically alter the strength and impact of AIs we allow to exist among us in the years to come.

Evolvers: An Evolver is an AI developed from pure algorithms, coded and released into the world, not born and raised. This type of AI will have few, if any, human qualities. These AIs will never have the agony we endure with our variety of pain, the euphoric state of a honeymoon phase we enjoy with a loved one, or our feelings associated with chemical changes in our bodies as we age. The one thing Evolver AIs will never have, no matter how sophisticated and exponential they may be, is the human concept of time. Time impacts humans like no machine can comprehend. A computer that churns lines of code can only live in the microsecond of now. If we ever want to make Evolver AIs more human-like, we have to design their algorithms to age. We have to create code that evolves as the human mind does with changes across the moments of time.

Given this disassociation, we can never directly know the ‘thoughts’ of the Evolver AI, such as if it wants to take over and kill us all. We need the proverbial canary in a coal mine. Consider, for example, a human who is allergic to water and who would see a rainbow in the sky as a warning canary that all ahead is painfully wet from rain. Similarly, a unique AI might be developed who can see deep within its world of exponential technology, and reflect back to us a warning rainbow, a canary we can hold before us and know.

There is little doubt the Singularity is coming, it is only a matter of when. As Musk, Gates, and Hawking warn about general AI, Evolvers might bring an end for humanity, wipe us all out in the inhuman-like time it takes to change from a 1 to a 0. We will need a new way of seeing and interpreting the mind of such a powerful new-minded machine. What or who can be our canary?

Seeders: A Seeder AI is formed from the uploaded mind and memories of a human. We want Seeder AIs to have our human qualities, duplicated as exact as humanly possible. Of course, with augmented memory storage, or the ability to index a million books plugged into our brains, the definition of a human may change in some minds. But fundamentally, a Seeder will still want to recognize as identical the way he or she thinks of him or herself, being that Seeders will be the life-preserving copy of a previous biological self.

Right now we have an egocentric view of thinking, “Yes, I wan’t to live forever.” But from the practical product perspective, when the technology is ready, we have to consider who is hosting that mind, that person? Who or what company, or government, or collective is hosting the processing power to sustain 100, or 1,000, or a hundred million minds? What responsibility does that host have to us individuals when we can no longer pay our monthly subscription fee? Do we work by internally processing problems for our keep? Surely, our minds can’t be disconnected or shut down without a team of lawyers being set loose on the hosting company, right?

What about competing technologies? Would one mind-duplicating platform be better, more accurate-of-you than another? Would you still be you if the hosting company upgraded its own Operating System? Would we be able to stay a legacy mind on the old OS until its development or support team declared it End-Of-Life? What if the host upgraded their servers? Would that make you a better you, or altogether dizzy and different? Can they force an upgrade cycle? How about after a thousand years of hardware and software refreshes, are you still you? Could you get your money back if you weren’t?

It is in our nature to anthropomorphize both Evolvers and Seeders, where Seeders are the far better representation of ourselves. So the question becomes one of our own ingenuity: Can we use human nature to our advantage in the future of unknowable AIs?

Both Good and Bad Technology

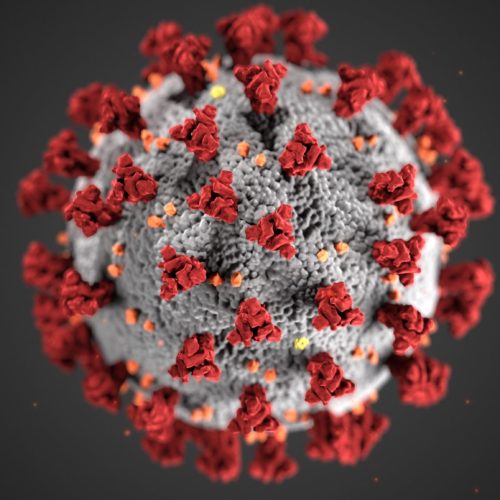

Does an AI, either Evolver or Seeder, really want to kill us all? Keep in mind “want” is a human expression, so we should consider the implication of pure technology. Technology can be used for good or bad, as shown throughout history by us humans, and one day soon to be proven again as the extension of a sentient AI.

Ungodly weapons, or miracle cures? An Evolver AI with a mind a million times more powerful than our own can use its power to thump us into oblivion; or, if we are successful in its upbringing, save us in ways we can’t yet imagine. Suppose the human population is succumbing to a mysterious affliction and only a powerful enough AI can conceive of the right course of action. Wouldn’t it be a remarkable use of technology if the AI must turn to the help of a crowdsourced human effort to produce and deliver the cure?

If time and technology permits, we should pursue the development of Seeder AIs first, and only then tread lightly into the realm of Evolver AIs. We humans need to become the first truly sentient AIs. We should require predictably for a period of time, to let the Seeders become wiser and age. If these exploratory humans approve of their life inside the machine, then as conduits, they will be indispensable, as any human-computer can be. If these pioneers are chosen wisely, we will have created a window for seeing eye-to-eye with the less human Evolver AIs. The Seeder’s mission, and their price of admission for longevity, will be their vigilance to measure and judge the more frightening Evolvers.

We should not project human like qualities on all types of AIs, but we will. It is in our human nature. And no matter what we would like to think about the benefits of technology, one type of AI may evolve from our best of intentions into the worst of possible outcomes.

The answer is that we need an intermediary AI we can anthropomorphize to protect our needs. We must have an AI who can determine for us, unequivocally like a canary in the coming technological coal mine, if a more inhuman, disembodied AI really is trying to kill us all.

About the Author:

Spencer Wolf is a software manager, author, and former marketing executive who’s convinced humans need more life to pursue all their dreams. He can be reached at Spencer [at] SpencerWolf.com.

Spencer Wolf is a software manager, author, and former marketing executive who’s convinced humans need more life to pursue all their dreams. He can be reached at Spencer [at] SpencerWolf.com.