Hugo de Garis on AI: Are We Building Gods or Terminators?

Podcast: Play in new window | Download | Embed

Subscribe: RSS

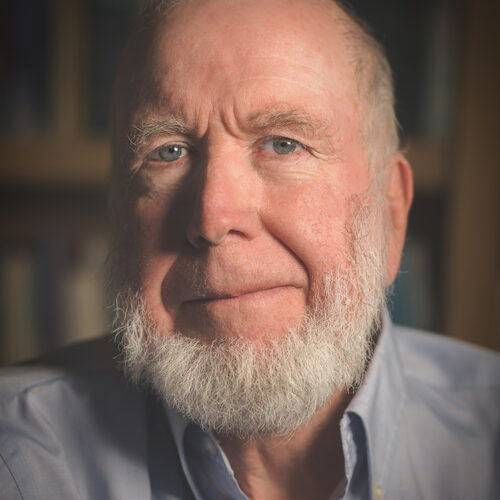

Hugo de Garis is the past director of the Artificial Brain Lab (ABL) at Xiamen University in China. Best known for his doomsday book The Artilect War, Dr. de Garis has always been on my wish-list of future guests on Singularity 1 on 1. Finally, a few weeks ago I managed to catch him for a 90 minutes interview via Skype.

Hugo de Garis is the past director of the Artificial Brain Lab (ABL) at Xiamen University in China. Best known for his doomsday book The Artilect War, Dr. de Garis has always been on my wish-list of future guests on Singularity 1 on 1. Finally, a few weeks ago I managed to catch him for a 90 minutes interview via Skype.

During our discussion with Dr. de Garis, we cover a wide variety of topics such as: how and why he got interested in artificial intelligence; Moore’s Law and the laws of physics; the hardware and software requirements for artificial intelligence; why cutting edge experts are often missing the writing on the wall; emerging intelligence and other approaches to AI; Dr. Henry Markram‘s Blue Brain Project; the stakes in building AI and his concepts of ArtIlects, Cosmists and Terrans; cosmology, the Fermi Paradox, and the Drake equation; the advance of robotics and the political, ethical, legal and existential implications thereof; species dominance as the major issue of the 21st century; the technological singularity and our chances of surviving it in the context of fast and slow take-off.

As always you can listen to or download the audio file above or scroll down and watch the video interview in full. To show your support you can write a review on iTunes, make a direct donation, or become a patron on Patreon.

Who is Hugo de Garis?

Prof. Hugo de Garis is 64 and has lived in 7 countries (Australia, England, Holland, Belgium, Japan, US, China). He got a Ph.D. in Artificial Life and Artificial Intelligence from Brussels University in 1991. He was formerly director of the Artificial Brain Lab (ABL) at Xiamen University in China, where he was building China’s first artificial brain, by evolving large numbers of neural net modules using supercomputers. He guest-edited, with Ben Goertzel, the planet’s first special issue of an academic journal on Artificial Brains, and is currently writing a book Artificial Brains: An Evolved Neural Net Module Approach for World Scientific.

He is probably best known for his concept of the Artilect War in which he predicts that a sizable proportion of humanity will not accept being cyborged and will not permit the risk of human extinction at the hands of advanced cyborgs and artilects. Such people he labels Terrans who he claims will go to war against the Cosmists (i.e. people in favor of building artilects) and the Cyborgists (who want to become artilect gods themselves). This artilect war will take place in the second half of the 21st century with 21st century, probably nanotech-based, weapons and may kill billions of people – Gigadeath.

Hugo de Garis is the author of two books:

and

Since his retirement in 2010, Hugo has been “ARCing” (after retirement careering) taking up a new (actually old) career of intensive study of Ph.D. level Pure Math, and Math Physics. He makes home videos of his lectures on these topics and puts them on YouTube for the world to be given a comprehensive education in graduate-level pure math, math physics, and computer theory. He is doing at the high end what Khan of Khanacademy is doing at the low end, i.e. teaching people for free.

Dr. de Garis is also very interested in Globism – the ideology in favor of the creation of a global state. He sees the annual doubling speed of the internet having a huge impact on the growth of a global language, global cultural homogenization, and the formation of economic and political blocs, pushing for the creation of a fully democratic global state (world government) “Globa”

Prof. de Garis is the technical consultant for a major Hollywood movie on the theme of species dominance coming out in 2013, along with Spielberg’s upcoming Robopocalypse. Thus he believes that by 2014 the issue of Species Dominance should be mainstream.

His website is http://profhugodegaris.wordpress.com