Michael Shermer: Be Skeptical! (Even of Skeptics)

Socrates / Podcasts

Posted on: January 18, 2012 / Last Modified: November 21, 2021

Podcast: Play in new window | Download | Embed

Subscribe: RSS

I couple of days ago I interviewed Michael Shermer for Singularity 1 on 1.

I couple of days ago I interviewed Michael Shermer for Singularity 1 on 1.

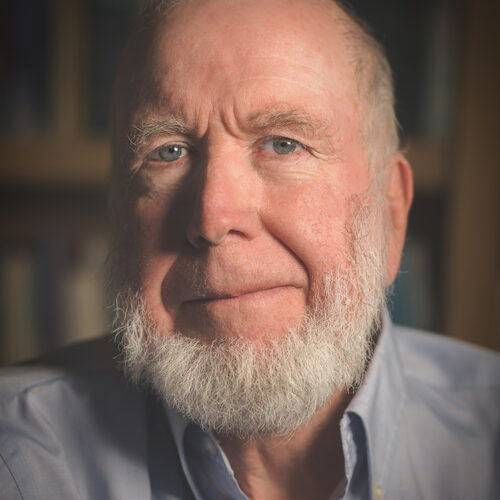

I met Dr. Shermer at the recent Singularity Summit in New York where he was one of the most entertaining, engaging, and optimistic speakers. Since he calls himself a skeptic and not a singularitarian, I thought he would bring not only balance to my singularity podcast but also a healthy dose of skepticism, and I was not disappointed.

During our conversation we discuss a variety of topics such as his education at a Christian college and original interest in religion and theology; his eventual transition to atheism, skepticism, science, and the scientific method; SETI, the singularity and religion; scientific progress and the dots on the curve as precursors of big breakthroughs; life-extension, cloning and mind uploading; being a skeptic and an optimist at the same time; the “social singularity”; global warming; the tricky balance between being a skeptic while still being able to learn and make progress.

As always you can listen to or download the audio file above or scroll down and watch the video interview in full. To show your support you can write a review on iTunes, make a direct donation, or become a patron on Patreon.

Michael Shermer’s Singularity Summit presentation: “Social Singularity: Transitioning from Civilization 1.0 to 2.0”

Who is Michael Shermer?

Dr. Michael Shermer is the Founding Publisher of Skeptic magazine (www.skeptic.com), the Executive Director of the Skeptics Society, a monthly columnist for Scientific American, the host of the Skeptics Distinguished Science Lecture Series at Caltech, and Adjunct Professor at Claremont Graduate University and Chapman University.

Dr. Shermer’s latest book is The Mind of the Market, on evolutionary economics. His last book was Why Darwin Matters: The Case Against Intelligent Design

, and he is the author of Science Friction: Where the Known Meets the Unknown

, about how the mind works and how thinking goes wrong. His book The Science of Good and Evil: Why People Cheat, Gossip, Care, Share, and Follow the Golden Rule

, is on the evolutionary origins of morality and how to be good without God. He wrote a biography, In Darwin’s Shadow, about the life and science of the co-discoverer of natural selection, Alfred Russel Wallace. He also wrote The Borderlands of Science

, about the fuzzy land between science and pseudoscience, and Denying History

, on Holocaust denial and other forms of pseudohistory. His book How We Believe

, presents his theory on the origins of religion and why people believe in God. He is also the author of Why People Believe Weird Things

on pseudoscience, superstitions, and other confusions of our time.

According to the late Stephen Jay Gould (from his Foreword to Why People Believe Weird Things): “Michael Shermer, as head of one of America’s leading skeptic organizations, and as a powerful activist and essayist in the service of this operational form of reason, is an important figure in American public life.”

Dr. Shermer received his B.A. in psychology from Pepperdine University, M.A. in experimental psychology from California State University, Fullerton, and his Ph.D. in the history of science from Claremont Graduate University (1991). He was a college professor for 20 years (1979-1998), teaching psychology, evolution, and the history of science at Occidental College (1989-1998), California State University Los Angeles, and Glendale College. Since his creation of the Skeptics Society, Skeptic magazine, and the Skeptics Distinguished Science Lecture Series at Caltech, he has appeared on such shows as The Colbert Report, 20/20, Dateline, Charlie Rose, Larry King Live, Tom Snyder, Donahue, Oprah, Lezza, Unsolved Mysteries (but, proudly, never Jerry Springer!), and other shows as a skeptic of weird and extraordinary claims, as well as interviews in countless documentaries aired on PBS, A&E, Discovery, The History Channel, The Science Channel, and The Learning Channel. Shermer was the co-host and co-producer of the 13-hour Family Channel television series, Exploring the Unknown.

Related articles

- Head-Transplantation: A Short Documentary about Dr. R. J. White’s Controversial Experiments

- Randal Koene on Singularity 1 on 1: Mind Uploading is not Science Fiction

- Singularity University Lectures: Dr. Alex Jadad on Making Longer Life Worth Living

- The Complete 2011 Singularity Summit Video Collection