Dawn of the Kill-Bots: the Conflicts in Iraq and Afghanistan and the Arming of AI (part 4)

Socrates / Op Ed

Posted on: December 18, 2009 / Last Modified: December 18, 2009

Part 4: Military Turing Test — Can robots commit war-crimes?

Now that we have identified the trend of moving military robots to the forefront of military action from their current largely secondary and supportive role to becoming a primary direct participant or (as Foster-Miller proudly calls its MAARS bots) “war fighters” we have to also recognize the profound implications that such a process will have not only on the future of warfare but also potentially on the future of mankind. In order to do so we will have to briefly consider what for now are assumed to be broad philosophical but, as robot technology advances and becomes more prevalent, will eventually become highly political, legal and ethical issues:

Can robots be intelligent?

Can robots have conscience?

Can Robots commit war crimes?

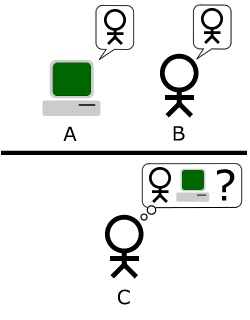

In 1950 Alan Turing introduced what he believed was a practical test for computer intelligence which is now commonly known as the Turing Test. The Turing test is an interactive test involving three participants – a computer, a human interrogator and a human participant. It is a blind test where the interrogator asks questions via keyboard and receives answers via display screen on the basis of which he or she has to determine which answers come from the computer and which from the human subject. According to Alan Turing if a statistically sufficient number of different people play the roles of interrogator and human subject, and, if a sufficient proportion of the interrogators are unable to distinguish the computer from the human being, then the computer is considered an intelligent, thinking entity or AI.

If we agree that AI is very likely to have substantial military application then there is clearly a need of developing an even more sophisticated and very practical military Turing Test which ought to ensure that autonomous armed robots abide by the rules of engagement and the laws of war. On the other hand, while whether a robot or a computer is (or will be) self-conscious thinking entity (or not) is an important question, it is not necessarily a requirement for considering the issue of war crimes. Given that those MAARS-type bots have literally killer applications, the potential for a unit of those going “nuts on a shooting spree” or getting “hacked,” ought to be considered carefully and hopefully quite early in advance of any potentialities.

Robots capable of shooting on their own are also a hotly debated legal issue. According to Gordon Johnson, who leads robotics efforts at the Joint Forces Command research center in Suffolk (Virginia), “The lawyers tell me there are no prohibitions against robots making life-or-death decisions.” When asked about the potential for war crimes Johnson replied “I have been asked what happens if the robot destroys a school bus rather than a tank parked nearby. We will not entrust a robot with that decision until we are confident they can make it.” (Thus the decision really is not “if” robots will be entrusted with such decisions but “when.” Needless to say historically government confidence in different projects is hardly a guarantee that things will not go wrong.)

On the other hand, in complete opposition to Johnson’s claims, according to barrister and engineer Chris Eliot, it is currently illegal for any state to deploy a fully autonomous system. Eliot claims that “Weapons intrinsically incapable of distinguishing between civilian and military targets are illegal” and only when war robots can successfully pass a “military Turing test” could they be legally used. At that point any autonomous system ought to be no worse than a human at taking military decisions about legitimate targets in any potential engagement. Thus, in contrast to the original Turing Test this test would use decisions about legitimate targets and Chris Elliot believes that “Unless we reach that point, we are unable to [legally] deploy autonomous systems. Legality is a major barrier.”

The gravity of both the practical and legal issues surrounding the kill-bots is not overlooked by the people directly engaged in their production, adoption and deployment in the field. In 2005 during his keynote address at the fifth annual Robo Business Conference in Pittsburgh, program executive director of ground combat systems for the U.S. army Kevin Fahey said: “Armed robots haven’t yet been deployed because of the extensive testing involved […] When weapons are added to the equation, the robots must be fail-safe because if there’s an accident, they’ll be immediately relegated to the drawing board and may not see deployment again for another 10 years. […] You’ve got to do it right.” (Note again that any such potential delays will put into question “when” and not “If” such armed robots will be used on a large scale.)

While for anyone who has seen and read science fiction classics such as the Terminator and Matrix series the risks of using armed robots may be apparent, we should not underestimate the variety of political, military, economic and even ethical reasons supporting their usage. Some robotics researchers believe that robots could make the perfect warrior.

For example, according to Ronald Arkin, a robot researcher at Georgia Tech, robots could make even more ethical soldiers than humans. Dr. Arkin is working with Department of Defense to program ethics into the next generation of battle robots, including the Geneva Convention rules. He says that robots will act more ethically than humans because they have no desire for self-preservation, no emotions, and no fear of disobeying their commanders’ orders in case that they are illegitimate or in conflict with the laws of war. In addition, Dr Arkin supports the somewhat ironic claim that robots will act more humanely than people because stress or battle fatigue does not affect their judgment in the way it affects a soldier’s. It is for those reasons that Dr. Arkin is developing a set of rules of engagement for battlefield robots to ensure that their use of lethal force follows the rules of ethics and the laws of war. In other words, he is indeed working on the creation of an artificial conscience which will have the potential to pass a potential military Turing test. Other advantages supportive of Dr. Arkin’s view are expressed by Gordon Johnson observation that

“A robot can shoot second. […] Unlike its human counterparts, the armed robot does not require food, clothing, training, motivation or a pension. […] They don’t get hungry […] (t)hey’re not afraid. They don’t forget their orders. They don’t care if the guy next to them has just been shot. Will they do a better job than humans? Yes.”

In addition to the economic and military reasons behind the adoption of robot soldiers there is also the issue often referred to as the “politics of body bags.”

Using robots will make waging war less liable to domestic politics and hence will make it easier domestically for both political and military leaders to gather support for any conflict that is fought on foreign soil. As a prescient article in the Economist noted “Nobody mourns a robot.” On, the other hand the concern raised by that possibility is that it may make war-fighting more likely given that there is less pressure from mounting casualties on the leaders. At any rate, the issues surrounding the usage of kill-bots have already passed beyond being merely theoretical or legal ones. Their practical importance comes to light with some of the rare but worryingly conflicting reports about the deployment of the SWORDS robots in Iraq and Afghanistan.

The first concern raising report came from the blog column of one of the writers for Popular Mechanics. In it Erik Sofge noted that in 2007 three armed ground bots were deployed to Iraq. All three units were the SWORDS model produced by Foster-Miller Inc. Interestingly enough, all three units were almost immediately pulled out. According to Sofge when Kevin Fahey (the Army’s Program Executive Officer for Ground Forces.) was asked about the SWORDS’ pull out he tried to give a vague answer while stressing that the robots never opened any unauthorized fire and no humans were hurt. Pressed to elaborate Fahey said that it was his understanding that “the gun [of one of the robots] started moving when it was not intended to move.” That is to say that the weapon of the SWORDS robot swung or was swinging around randomly or in the wrong direction. At that point in time Sofge pointed out that no specific reason for the withdrawal was given either from the military or from Foster-Miller Inc.

A similar though even stronger report came from Jason Mick who was also present at the Robotic Business Conference in Pittsburgh in 2008. In his blog on the Daily Tech Web Site Mick claimed that “First generation war-bots deployed in Iraq recalled after a wave of disobedience against their human operators.”

It was just a few days later that Foster-Miller published the following statement:

“Contrary to what you may have read on other web sites, three SWORDS robots are still deployed in Iraq and have been there for more than a year of uninterrupted service. There have been no instances of uncommanded or unexpected movements by SWORDS robots during this period, whether in-theater or elsewhere.

[…] TALON Robot Operations has never “refused to comment” when asked about SWORDS. For the safety of our war fighters and due to the dictates of operational security, sometimes our only comment is, “We are unable to comment on operational details.”

Several days after that Jason Mick also published a correction though it seems at least some of the details are still unclear and there is a persistent disharmony between statements coming from Foster-Miller and DoD. For example, Foster Miller spokeswoman Cynthia Black claimed that “The whole thing is an urban legend.” Yet Kevin Fahey was reported as saying the robots recently did something “very bad.”

Two other similarly troubling cases pertaining to the Predator and Reaper UAV’s were recently reported in the media.

The first one Air Force Shoots Down Runaway Drone over Afghanistan reported that:

“A drone pilot’s nightmare came true when operators lost control of an armed MQ-9 Reaper flying a combat mission over Afghanistan on Sunday. That led a manned U.S. aircraft to shoot down the unresponsive drone before it flew beyond the edge of Afghanistan airspace.”

“A drone pilot’s nightmare came true when operators lost control of an armed MQ-9 Reaper flying a combat mission over Afghanistan on Sunday. That led a manned U.S. aircraft to shoot down the unresponsive drone before it flew beyond the edge of Afghanistan airspace.”

The report goes on to point that this is not the only accident of that kind and that there is certainly the potential of some of those drones going “rogue.” Judging by the reported accidents it is clear that this is not only a potential but actual problem.

While on the topic of unmanned drones going “rogue” a most recent news report disclosed that Iraq Insurgents “Hacked in Video Feeds from US Drones”

Thus going rogue is not only an accident related issue but also a network security one. Allegedly, the insurgents used a 26 dollar Russian software to tap into the video streamed live by the drones. What is even more amazing is that the video feed was not even encrypted. (Can we really blame the US Air Force for not encrypting their killer-drone video feeds?! I mean, there are still a whole-lot of laptop home users without access password for their home WiFi connection?! 😉 So it is only natural that the US Air Force will be no different, right?)

And what about the actual flight and weapons control signal? How “secure” is it? Can we really be certain that some terrorist cannot “hack” into the control systems and throw our stones onto our own heads. (Hm, it wasn’t stones we are talking about, it is hell-fire missiles that the drones are armed with…)

As we can see there a numerous legal, political, ethical and practical issues surrounding the deploying, usage, command and control, safety and development of kill-bots. Obviously it may take some time before the dust around the above cases settles and the accuracy of the reports is confirmed or denied beyond any doubt. Yet Jason Mick’s point in his original report still stands whatever the specific particularities of each case. Said Mick:

“Surely in the meantime these developments will trigger plenty of heated debate about whether it is wise to deploy increasingly sophisticated robots onto future battlefields, especially autonomous ones. The key question, despite all the testing and development effort possible, is it truly possible to entirely rule out the chance of the robot turning on its human controllers?”

End of Part 4 (see Part 1; Part 2; Part 3 and Part 5)