Dawn of the Kill-Bots: the Conflicts in Iraq and Afghanistan and the Arming of AI (part 5)

Part 5: The Future of (Military) AI — Singularity

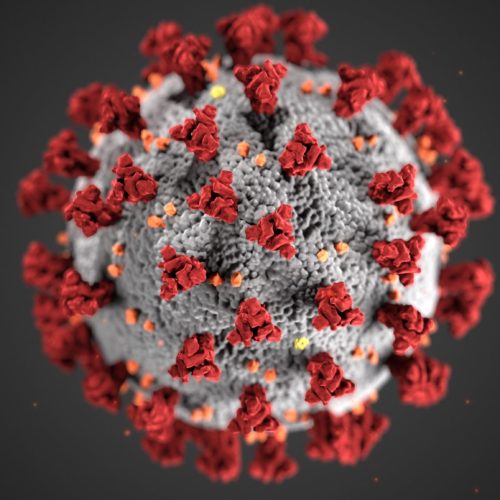

While being certainly dangerous for humans, especially the ones that are specifically targeted by the kill-bots, arming machines is not on its own a process that can threaten the reign of homo sapiens in general. What can though is the fact that it is occurring within the larger confluent revolutions in Genetics, Nanotechnology and Robotics (GNR). Combined with exponential growth trends such as Moore’s law we arguably get the right conditions for what is referred to as the Technological Singularity.

In 1945 Alan Turing famously predicted that computers would one day play better chess than people. Fifty years later, a computer called Deep Blue defeated the reigning world champion Gary Kasparov. Today, whether it is a mouse with a blue-tooth brain implant that directs the movements of the mouse via laptop, a monkey moving a joystick with its thoughts, humans talking through their thoughts via computers or robots with rat-brain cells for CPU, we have already accomplished technological feats which mere years ago were considered complete science fiction.

Isn’t it plausible, then, to consider that one day, not too many decades from now, machines may not only reach human levels of intelligence but ever surpass it?

(Facing the pessimists Arthur C. Clark famously said once that “If a … scientist says that something is possible he is almost certainly right, but if he says that it is impossible he is very probably wrong.”)

Isn’t that the potential, if not actual direction towards which the multiple confluent and accelerating technological developments lead us?

It is this moment – the birth of AI (machine sapiens), which is often referred to as the Technological Singularity.

So, let us look at the concept of Singularity. For some it is an overblown myth or, at best, science fiction. For others, it is the next step in evolution and the greatest scientific watershed. According to Ray Kurzweil’s definition “It’s a future period during which the pace of technological change will be so rapid, its impact so deep, that human life will be irreversibly transformed. Although neither utopian nor dystopian, this epoch will transform the concepts that we rely on to give meaning to our life’s, from our business models to the cycle of human life, including death itself.”

According to Kutzweil’s argument the Singularity is nothing short of the next step in evolution. (A position often referred to as Transhumanism) For many millions of years biology has been indeed (our) destiny. But if we consider our species to be a cosmological phenomenon, with its unique feature being its intelligence and not its structural make up, then our biological past is indeed highly unlikely to depict the nature of our future. So, Kurzweil and other transhumanists, see biology as nothing more but our past and technology as our future. To illuminate the radical implications of such a claim it is worth quoting 2 whole paragraphs from Ray Kurzweil:

“The Singularity will represent the culmination of the merger of our biological thinking and existence with our technology, resulting in a world that is still human but transcends our biological roots. […] if you wonder what will remain unequivocally human in such a world, it’s simply this quality: ours is the species that inherently seeks to extend its physical and mental reach beyond current limitations.”

“Some observers refer to this merger as creating a new “species.” But the whole idea of a species is a biological concept, and what we are doing is transcending biology. The transformation underlying the Singularity is not just another in a long line of steps in biological evolution. We are upending biological evolution altogether.”

So how does the Singularity relate to the process of arming AI?

Well, most singularitarians believe that the technological Singularity is a probable and even highly likely event, but most of them certainly do not believe that it is inevitable. Thus there are several potential reasons that can either delay or altogether prevent the event itself or any of the potential benefits for homo sapiens. Global war is, of course, on the top of the list, and it can lead into both of the above directions. In the first instance, a sufficiently large-scale non-conventional war could destroy much or all of human capacity to further technological progress. In that case, the Singularity will be at least delayed or, in the case that homo sapience goes extinct, will become altogether impossible. In the latter instance, if at the point of or around the Singularity there is a conflict between homo sapiens and AI (machine sapiens), then, given our complete dependence on the machines, there may be no merging between the two races (humans and machines) and humanity may forever remain trapped in biology. In turn, this may mean either our extinction or becoming nothing more but an inferior i.e. subservient race to the superiority of the ever growing machine intelligence.

It is for reason like those that some scientists believe that Ray Kurzweil is dangerously naive about the Singularity and especially the benevolence of AI with respect to the human race, and argue that the post-Singularity ArtIlects (artificial intellects) will take us not to immortality but, at least to war, if not complete oblivion. In a way this is a debate about the potential for either techno salvation — as foreseen by Ray Kurzweil, or techno holocaust — as predicted by his critics. Whatever the case, the more and the better the machines of the future are trained and armed the more possible it becomes that one day they may have the capability, if not (yet) the intent to destroy the whole of the human race.

The potential for conflict is arguably likely to increase as the singularity approaches and it does not need to be necessarily a war between man and machine, but can also be among humans. Looking at the current global geopolitical realities one may argue that a global non-conventional war is unlikely if not completely impossible. Yet, for the next several decades, the potential of such war may indeed grow with the pace of technology.

First of all, it is very likely that there will be a large and accelerating proliferation of advanced weapons and military, technological and scientific capabilities all throughout the twenty-first century. Thus many more state and non-state actors will be capable of waging or, at least, starting war.

Secondly, as the singularity approaches the breakpoint and becomes a visible possibility, there are likely to be fundamental rifts within humanity as to whether we ought to continue or stop such developments. Thus many people may push for a global neo-luddite rebellion against the machines and all of those that support the Singularity. This may lead to a realignment of the whole global geo-political reality with both overt and covert centers of resistance. For example, one potentiality may be an alliance between radical Muslim, Christian and Judaic fundamentalists. (It may currently seem impossible but it is people such as the former chief counter-terrorism adviser Richard A. Clarke who raises those as possibilities.)

It was in the rather low-tech mid 19th century that Samuel Butler wrote his Darwin among the Machines and argued that machines will eventually replace man as the next step in evolution. Butler concluded that

“Our opinion is that war to the death should be instantly proclaimed against them. Every machine of every sort should be destroyed by the well-wisher of his species. Let there be no exceptions made, no quarter shown; let us at once go back to the primeval condition of the race. If it be urged that this is impossible under the present condition of human affairs, this at once proves that the mischief is already done, that our servitude has commenced in good earnest, that we have raised a race of beings whom it is beyond our power to destroy, and that we are not only enslaved but are absolutely acquiescent in our bondage.”

Another well known modern neo-luddite is Ted Kaczynski aka the Unabomber. Kaczynski not only called for resistance to the rise of the machines via his Manifesto (See Industrial Society and its Future) but even started a terrorist bombing campaign to support and popularize his cause. While Samuel Butler’s argument was largely unknown or ignored by the majority of his contemporaries and the Unabomber was called a terrorist psycho, history may take a second look at them both. It may not be impossible that as the Singularity becomes more visible, if not for the whole humanity, at least for the neo-luddites, Butler may come to be seen as a visionary and Kaczynski – as a hero who stood up against the rise of the machines. Thus, if humanity gets divided into transhumanists and neo-luddites, or if the machines rebel against humanity, conflict may be impossible to avoid.

It may be ironic that Karel Čapek, who first used the term robot, ended his play R.U.R. with the demise of humanity and robots taking over the world. The good news, however, is that this possibility is brought about by our own ingenuity and at our own pace. Hence the technology which we create doesn’t have to be nihilistic – similarly to the Terminator; it may be our exterminator or our savior, our end or a new beginning…

This blog does not try to address the issue of arming AI exhaustively or provide solutions or policy recommendations. What it attempts to do is to put forward an argument about the issues, the context and the stakes within which the above process takes place. Thus, it has been successful if after reading it one is at least willing to consider the possibility that the crude and lightly armed robots currently tested in the conflicts in Iraq and Afghanistan are not simply one of the latest tools in the large US military inventory for what they are today is not what they can turn out to be tomorrow.

Today we are witnessing the dawn of the kill-bots. How high and under what conditions will the robot star rise tomorrow is up for us to consider…

the End (see Part 1; Part 2; Part 3; Part 4)

Related articles by Zemanta

- SINGULARITY UPDATE: Machines could ultimately match human intelligence, says Intel CTO. “The notio… (pajamasmedia.com)

- Video of Kurzweil’s Latest Talk at Google (singularityhub.com)

- Exit Brain, Enter Computer (abcnews.go.com)

- A school for changing the world (guardian.co.uk)

- Singularity University, Day One: Infinite, In All Directions (wired.com)