Neuromorphic Chips: a Path Towards Human-level AI

Dan Elton / Op Ed

Posted on: September 2, 2016 / Last Modified: December 7, 2018

Recently we have seen a slew of popular films that deal with artificial intelligence – most notably The Imitation Game, Chappie, Ex Machina, and Her. However, despite over five decades of research into artificial intelligence, there remain many tasks which are simple for humans that computers cannot do. Given the slow progress of AI, for many the prospect of computers with human-level intelligence seems further away today than it did when Isaac Asimov‘s classic I, Robot was published in 1950. The fact is, however, that today the development of neuromorphic chips offers a plausible path to realizing human-level artificial intelligence within the next few decades.

Recently we have seen a slew of popular films that deal with artificial intelligence – most notably The Imitation Game, Chappie, Ex Machina, and Her. However, despite over five decades of research into artificial intelligence, there remain many tasks which are simple for humans that computers cannot do. Given the slow progress of AI, for many the prospect of computers with human-level intelligence seems further away today than it did when Isaac Asimov‘s classic I, Robot was published in 1950. The fact is, however, that today the development of neuromorphic chips offers a plausible path to realizing human-level artificial intelligence within the next few decades.

Starting in the early 2000’s there was a realization that neural network models – based on how the human brain works – could solve many tasks that could not be solved by other methods. The buzzphrase ‘deep learning‘ has become a catch-all term for neural network models and related techniques, as is shown by a plotting of the frequency of the phrase using Google Trends:

//

trends.embed.renderExploreWidget(“TIMESERIES”, {“comparisonItem”:[{“keyword”:”neural network”,”geo”:””,”time”:”all”},{“keyword”:”deep learning”,”geo”:””,”time”:”all”},{“keyword”:”machine learning”,”geo”:””,”time”:”all”}],”category”:0,”property”:””}, {});

//

Most deep learning practitioners acknowledge that the recent popularity of ‘deep learning’ is driven by hardware, in particular GPUs. The core algorithms of neural networks, such as the backpropagation algorithm for calculating gradients was developed in the 1970s and 80s, and convolutional neural networks were developed in the late 90s.

Neuromorphic chips are the logical next step from the use of GPUs. While GPU architectures are designed for computer graphics, neuromorphic chips can implement neural networks directly into hardware. Neuromorphic chips are currently being developed by a variety of public and private entities, including DARPA, the EU, IBM and Qualcomm.

The representation problem

A key difficulty solved by neural networks is the problem of programming conceptual categories into a computer, also called the “representation problem”. Programming a conceptual category requires constructing a representation in the computer’s memory to which phenomena in the world can be mapped. For example “Clifford” would be mapped to the category of “dog” and also “animal” and “pet”, while a VW Beatle would be mapped to “car”. Constructing a robust mapping is very difficult since the members of a category can vary greatly in their appearance – for instance a “human” may be male or female, old or young, and tall or short. Even a simple object, like a cube, will appear different depending on the angle it is viewed from and how it is lit. Since such conceptual categories are constructs of the human mind, it makes sense that we should look at how the brain itself stores representations. Neural networks store representations in the connections between neurons (called synapses), each of which contains a value called a “weight”. Instead of being programmed, neural networks learn what weights to use through a process of training. After observing enough examples, neural networks can categorize new objects they have never seen before, or at least offer a best guess. Today neural networks have become the dominant methodology for solving classification tasks such as handwriting recognition, speech to text, and object recognition.

Massive parallelism

Neural networks are based on simplified mathematical models of how the brain’s neurons operate. Today’s hardware is very inefficient when it comes to simulating neural network models, however. This inefficiency can be traced to fundamental differences between how the brain operates vs how digital computers operate. While computers store information as a string of 0s and 1s, the synaptic “weights” the brain uses to store information can fall anywhere in a range of values – ie. the brain is analog rather than digital. More importantly, in a computer the number of signals that can be processed at the same time is limited by the number of CPU cores – this may be between 8-12 on a typical desktop or 1000-10,000 on a supercomputer. While 10,000 sounds like a lot, this is tiny compared to the brain, which simultaneous processes up to a trillion (1,000,000,000,000) signals in a massively parallel fashion.

Low power consumption

The two main differences between brains and today’s computers (parallelism & analog storage) contribute to another difference, which is the brain’s energy efficiency. Natural selection made the brain remarkably energy efficient, since hunting for food is difficult. The human brain consumes only 20 Watts of a power, while a supercomputing complex capable of simulating a tiny fraction of the brain can consume millions of Watts. The main reason for this is that computers operate at much higher frequencies than the brain and power consumption typically grows with the cube of frequency. Additionally, as a general rule digital circuitry consumes more power than analog – for this reason, some parts of today’s cellphones are being built with analog circuits to improve battery life. A final reason for the high power consumption of today’s chips is that they require all signals be perfectly synchronized by a central clock, requiring a timing distribution system that complicates circuit design and increases power consumption by up to 30%. Copying the brain’s energy efficient features (low frequencies, massive parallelism, analog signals, and asynchronicity) makes a lot of economic sense and is currently one of the main driving forces behind the development of neuromorphic chips.

Fault tolerance

Another difference between neuromorphic chips and conventional computer hardware is the fact that, like the brain, they are fault-tolerant – if a few components fail the chip continues functioning normally. Some neuromorphic chip designs can sustain defect rates as high as 25%. This is very different than today’s computer hardware, where the failure of a single component usually renders the entire chip unusable. The need for precise fabrication has driven up the cost of chip production exponentially as component sizes have become smaller. Neuromorphic chips require lower fabrication tolerances and thus are cheaper to make.

The Crossnet approach

Many different design architectures are being pursued and developed, with varying degrees of brain-like architecture. Some chips, like Google’s tensor processing unit — which powered Deep Mind’s much lauded victory in Go – are proprietary. Plenty of designs for neuromorphic hardware can be found in the academic literature, though. Many designs use a pattern called a crossbar latch, which is a grid of nanowires connected by ‘latching switches’. At Stony Brook University, professor Konstantin K. Likharev has designed a neuromorphic network called the “Crossnet”.

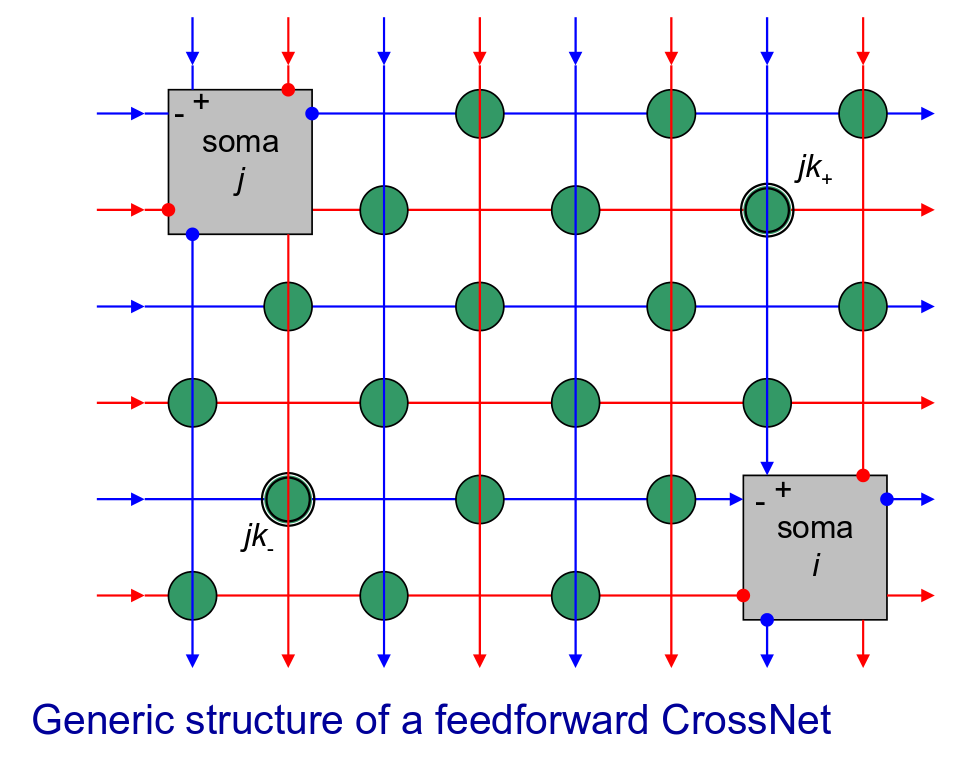

[Figure about depicts a layout, showing two ‘somas’, or circuits that simulate the basic functions of a neuron. The green circles play the role of synapses. From presentation of K.K. Likharev, used with permission.]

One possible layout is shown above. Electronic devices called ‘somas’ play the role of the neuron’s cell body, which is to add up the inputs and fire an output. In neuromorphic hardware, somas may mimic neurons with several different levels of sophistication, depending on what is required for the task at hand. For instance, somas may generate spikes (sequences of pulses) just like neurons in the brain. There is growing evidence that sequences of spikes in the brain carry more information than just the average firing rate alone, which previously had been considered the most important quantity. Spikes are carried through the two types of neural wires, axons and dendrites, which are represented by the red and blue lines in figure 2. The green circles are connections between these wires that play the role of synapses. Each of these ‘latching switches’ must be able to hold a ‘weight’, which is encoded in either a variable capacitance or variable resistance. In principle, memristors would be an ideal component here, if one could be developed that could be mass produced. Crucially, all of the crossnet architecture can be implemented in traditional silicon-based (“CMOS”-like) technology. Each crossnet (as shown in the figure) is designed so they can be stacked, with additional wires connecting somas on different layers. In this way, neuromorphic crossnet technology can achieve component densities that rival the human brain.

Likarev’s design is still theoretical, but there are already several neuromorphic chips in production, such as IBM’s TrueNorth chip, which features spiking neurons, and Qualcomm’s “Zeroeth” project. NVIDIA is currently making major investments in deep learning hardware, and the next generation of NVIDIA devices dedicated for deep learning will likely look closer to neuromorphic chips than traditional GPUs. Another important player is the startup Nervana systems, which was recently acquired by Intel for $400 million. Many governments are are investing large amounts of money into academic research on neuromorphic chips as well. Prominent examples include the EU’s BrainScaleS project, the UK’s SpiNNaker project, and DARPA’s SyNAPSE program.

Near-future applications

Neuromorphic hardware will make deep learning orders of magnitude faster and more cost effective and thus will be the key driver behind enhanced AI in the areas of big data mining, character recognition, surveillance, robotic control and driverless car technology. Because neuromorphic chips have low power consumption it is conceivable that some day in the near future all cell phones will contain a neuromorphic chip which will perform tasks such as speech to text or translating road signs from foreign languages. Currently apps that perform deep learning tasks require connecting to the cloud to perform the necessary computations. The low power consumption of neuromorphic chips also makes them attractive for military field robotics, which currently are limited by their high power consumption, which quickly drains their batteries.

Cognitive architectures

According to Prof. Likharev, neuromorphic chips are the only current technology which can conceivably “mimic the mammalian cortex with practical power consumption”. Prof. Likharev estimates that his own ‘crossnet’ technology can in principle implement the same number of neurons and connections as the brain on approximately 10 x 10 cms of silicon. Conceivably, production of a 10×10 cm chip will be practical in only a few years, as most of the requisite technologies are already in place. However, implementing a human level AI or artificial general intelligence (AGI) with a neuromorphic chip will require much more than just just creating the requisite number of neuron and connections. The human brain consists of thousands of interacting components or subnetworks. A collection of components and their pattern of connection is known as a ‘cognitive architecture’. The cognitive architecture of the brain is largely unknown, but there are serious efforts underway to map it, most notably Obama’s BRAIN initiative and the EU’s Human Brain Project, which has the ambitious (some say overambitious) goal of simulating the entire human brain in the next decade. Neuromorphic chips are perfectly suited to testing out different hypothetical cognitive architectures and simulating how cognitive architectures may change due to aging or disease. In principle, AGI could also be developed using an entirely different cognitive architecture, that bares little resemblance to the human brain.

Conclusion

Considering how much money is being invested in neuromorphic chips, already one can now see a path which leads to AGI. The major unknown is how long it will take for a suitable cognitive architecture to be developed. The fundamental physics of neuromorphic hardware is solid – they can mimic the brain in component density and power consumption and with thousands of times the speed. Even if some governments seek to ban the development of AGI, it will be realized by someone, somewhere. What happens next is a matter of intense speculation. If an AGI is capable of recursive self-improvement and had access to the internet, the results could be disastrous for humanity. As discussed by the philosopher Nick Bolstrom and others, developing containment and ‘constrainment’ methods for AI is not as easy as merely ‘installing a kill switch’ or putting the hardware in a Faraday cage. Therefore, we best start thinking hard about such issues now, before it is too late.

About the Author:

Dan Elton is a physics PhD candidate at the Institute for Advanced Computational Science at Stony Brook University. He is currently looking for employment in the areas of machine learning and data science. In his spare time he enjoys writing about the effects of new technologies on society. He blogs at www.moreisdifferent.com and tweets at @moreisdifferent.

Dan Elton is a physics PhD candidate at the Institute for Advanced Computational Science at Stony Brook University. He is currently looking for employment in the areas of machine learning and data science. In his spare time he enjoys writing about the effects of new technologies on society. He blogs at www.moreisdifferent.com and tweets at @moreisdifferent.

Further reading:

Monroe, Don. “Neuromorphic Computing Gets Ready for the (Really) Big Time” Communications of the ACM, Vol. 57 No. 6, Pages 13-15