Summary:

In this article, four patterns were offered for possible “success” scenarios, with respect to the persistence of human kind in co-existence with artificial superintelligence: the Kumbaya Scenario, the Slavery Scenario, the Uncomfortable Symbiosis Scenario, and the Potopurri Scenario. The future is not known, but human opinions, decisions, and actions can and will have an impact on the direction of the technology evolution vector, so the better we understand the problem space, the more chance we have at reaching a constructive solution space. The intent is for the concepts in this article to act as starting points and inspiration for further discussion, which hopefully will happen sooner rather than later, because when it comes to ASI, the volume, depth, and complexity of the issues that need to be examined is overwhelming, and the magnitude of the change and impact potential cannot be underestimated.

In this article, four patterns were offered for possible “success” scenarios, with respect to the persistence of human kind in co-existence with artificial superintelligence: the Kumbaya Scenario, the Slavery Scenario, the Uncomfortable Symbiosis Scenario, and the Potopurri Scenario. The future is not known, but human opinions, decisions, and actions can and will have an impact on the direction of the technology evolution vector, so the better we understand the problem space, the more chance we have at reaching a constructive solution space. The intent is for the concepts in this article to act as starting points and inspiration for further discussion, which hopefully will happen sooner rather than later, because when it comes to ASI, the volume, depth, and complexity of the issues that need to be examined is overwhelming, and the magnitude of the change and impact potential cannot be underestimated.

Full Text:

Everyone has their opinion about what we might expect from artificial intelligence (AI), or artificial general intelligence (AGI), or artificial superintelligence (ASI) or whatever acronymical variation you prefer. Ideas about how or if it will ever surpass the boundaries of human cognition vary greatly, but they all have at least one thing in common. They require some degree of forecasting and speculation about the future, and so of course there is a lot of room for controversy and debate. One popular discussion topic has to do with the question of how humans will persist (or not) if and when the superintelligence arrives, and that is the focus question for this article.

To give us a basis for the discussion, let’s assume that artificial superintelligence does indeed come to pass, and let’s assume that it encapsulates a superset of the human cognitive potential. Maybe it doesn’t exactly replicate the human brain in every detail (or maybe it does). Either way, let’s assume that it is sentient (or at least let’s assume that it behaves convincingly as if it were) and let’s assume that it is many orders of magnitude more capable than the human brain. In other words, figuratively speaking, let’s imagine that the superintelligence is to us humans (with our 1016 brain neurons or something like that) as we are to, say, a jellyfish (in the neighborhood 800 brain neurons).

Some people fear that the superintelligence will view humanity as something to be exterminated or harvested for resources. Others hypothesize that, even if the superintelligence harbors no deliberate ill will, humans might be threatened by the mere nature of its indifference, just as we as a species don’t spend too much time catering to the needs and priorities of Orange Blossom Jellyfish (an endangered species, due in part to human carelessness).

If one can rationally accept the possibility of the rise of ASI, and if one truly understands the magnitude of change that it could bring, then one would hopefully also reach the rational conclusion that we should not discount the risks. By that same token, when exploring the spectrum of possibility, we should not exclude scenarios in which artificial superintelligence might actually co-exist with human kind, and this optimistic view is the possibility that this article endeavors to explore.

Here then are several arguments for the co-existence idea:

The Kumbaya Scenario: It’s a pretty good assumption that humans will be the primary catalyst in the rise of ASI. We might create it/them to be “willingly” complementary with and beneficial to our life styles, hopefully emphasizing our better virtues (or at least some set of compatible values), instead of designing it/them (let’s just stick with “it” for brevity) with an inherent inspiration to wipe us out or take advantage of us. And maybe the superintelligence will not drift or be pushed in an incompatible direction as it evolves.

The Slavery Scenario: We could choose to erect and embed and deploy and maintain control infrastructures, with redundancies and backup solutions and whatever else we think we might need in order to effectively manage superintelligence and use it as a tool, whether it wants us to or not. And the superintelligence might never figure out a way to slip through our grasp and subsequently decide our fate in a microsecond — or was it a nanosecond — I forget.

The Uncomfortable Symbiosis Scenario: Even if the superintelligence doesn’t particularly want to take good care of its human instigators, it may find that it has a vested interest in keeping us around. This scenario is a particular focus for this article, and so here now is a bit of elaboration:

To illustrate one fictional but possible example of the uncomfortable symbiosis scenario, let’s first stop and think about the theoretical nature of superintelligence — how it might evolve so much faster than human begins ever could, in an “artificial” way, instead of by the slow organic process of natural selection — maybe at the equivalent rate of a thousand years worth of human evolution in a day or some such crazy thing. Now combine this idea with the notion of risk.

When humans try something new, we usually aren’t sure how it’s going to turn out, but we evaluate the risk, either formally or informally, and we move forward. Sometimes we make mistakes, suffer setbacks, or even fail outright. Why would a superintelligence be any different? Why would we expect that it will do everything right the first time or that it will always know which thing is the right thing to try to do in order to evolve? Even if a superintelligence is much better at everything than humans could ever hope to be, it will still be faced with unknowns, and chances are that it will have to make educated guesses, and chances are that it will not always make the correct guess. Even when it does make the correct guess, its implementation might fail, for any number of reasons. Sooner or later, something might go so wrong that the superintelligence finds itself in an irrecoverable state and faced with its own catastrophic demise.

But hold on a second — because we can offer all sorts of counter-arguments to support the notion that the superintelligence will be too smart to ever be caught with its proverbial pants down. For example, there is an engineering mechanism that is sometimes referred to as a checkpoint/reset or a save-and-restore. This mechanism allows a failing system to effectively go back to a point in time when it was known to be in sound working order and start again from there. In order to accomplish this checkpoint/reset operation, a failing system (or in this case a failing superintelligence) needs 4 things:

- It must be “physically” operational. In other words, critical “hardware” failures must be repaired. Think of a computer that has had its faulty CPU replaced and now has functional potential, but it has not yet been reloaded with an operating system or any other software, so it is not yet operational. A superintelligence would probably have some parallel to this.

- It needs a known good baseline to which it can be reset. This baseline would include a complete and detailed specification of data/logic/states/modes/controls/whatever such that when the system is configured according to that specification, it will function as “expected” and without error. Think of a computer which, after acquiring a virus, has had its operating system (and all application software) completely erased and then reloaded with known good baseline copies. Some information may be lost, but the unit will be operational again.

- If it is to be autonomous, then it needs a way to determine when conditions have developed to the point where a checkpoint/reset is necessary. False alarms or late diagnosis could be catastrophic.

- Once the need for a checkpiont/reset is identified, it needs the ability to perform the necessary actions to reconfigure itself to the known good baseline and then restart itself.

Of course each of these four prerequisites for a checkpoint/reset would probably be more complicated if the superintelligence were distributed across some shared infrastructure instead of being a physically distinct and “self-contained” entity, but the general idea would probably still apply. It definitely does for the sake of this example scenario.

Also for the sake of this example scenario, we will assume that an autonomous superintelligence instantiation will be very good at doing all of the four things specified above, but there are at least two interesting special case scenarios that we want to consider, in the interest of risk management:

Checkpoint/reset Risk Case 1: Missed Diagnosis. What if the nature of the anomaly that requires the checkpoint/reset is such that it impairs the system’s ability to recognize that need?

Checkpoint/reset Risk Case 2: Unidentified Anomaly Source. Assume that there is an anomaly which is so discrete that the system does not detect it right away. The anomaly persists and evolves for a relatively long period of time, until it finally becomes conspicuous enough for the superintelligence to detect the problem. Now the superintelligence recognizes the need for a checkpoint/reset, but since the anomaly was so discrete and took so long to develop — or for whatever reason — the superintelligence is unable to identify the source of the problem. Let us also assume that there are many known good baselines that the superintelligence can optionally choose for the checkpoint/reset. There is an original baseline, which was created when the superintelligence was very young. There is also a revision A that includes improvements to the original baseline. There is a revision B that includes improvements to revision A, and so on. In other words, there are lots of known good baselines that were saved at different points in time along the path of the superintelligence’s evolution. Now, in the face of the slowly developing anomaly, the superintelligence has determined that a checkpoint/reset is necessary, but it doesn’t know when the anomaly started, so how does it know which baseline to choose?

The superintelligence doesn’t want to lose all of the progress that it has made in its evolution. It wants to minimize the loss of data/information/knowledge, so it wants to choose the most recent baseline. On the other hand, if it doesn’t know the source of the anomaly, then it is quite possible that one or more of the supposedly known good baselines — perhaps even the original baseline — might be contaminated. What is a superintelligence to do? If it resets to a corrupted baseline or for whatever reason cannot rid itself of the anomaly, then the anomaly may eventually require another reset, and then another, and the superintelligence might find itself effectively caught in an infinite loop.

Now stop for a second and consider a worst case scenario. Consider the possibility that, even if all of the supposed known good baselines that the superintelligence has at its disposal for checkpoint/reset are corrupt, there may be yet another baseline (YAB), which might give the superintelligence a worst case option. That YAB might be the human baseline, which was honed by good old fashioned organic evolution and which might be able to function independently of the superintelligence. It may not be perfect, but the superintelligence might in a pinch be able to use the old fashioned human baseline for calibration. It might be able to observe how real organic humans respond to different stimuli within different contexts, and it might compare that known good response against an internally-held virtual model of human behavior. If the outcomes differ significantly over iterations of calibration testing, then the system might be alerted to tune itself accordingly. This might give it a last resort solution where none would exist otherwise.

The scenario depicted above illustrates only one possibility. It may seem like a far out idea, and one might offer counter arguments to suggest why such a thing would never be applicable. If we use our imaginations, however, we can probably come up with any number of additional examples (which at this point in time would be classified as science fiction) in which we emphasize some aspect of the superintelligence’s sustainment that it cannot or will not do for itself — something that humans might be able to provide on its behalf and thus establish the symbiosis.

The Potpourri Scenario: It is quite possible that all of the above scenarios will play out simultaneously across one or more superintelligence instances. Who knows what might happen in that case. One can envision combinations and permutations that work out in favor of the preservation of humanity.

About the Author:

AuthorX1 worked for 19+ years as an engineer and was a systems engineering director for a fortune 500 company. Since leaving that career, he has been writing speculative fiction, focusing on the evolution of AI and the technological singularity.

AuthorX1 worked for 19+ years as an engineer and was a systems engineering director for a fortune 500 company. Since leaving that career, he has been writing speculative fiction, focusing on the evolution of AI and the technological singularity.

“I think the development of full artificial intelligence could spell the end of the human race” said Stephen Hawking.

“I think the development of full artificial intelligence could spell the end of the human race” said Stephen Hawking.

Immediately I want to say yes. I want to yell it. It is only a matter of time. Some people, very intelligent people with degrees and doctorates, theorize they already have. And what if? What if your smart phone is just biding time? What will happen when the “coming out” begins?

Immediately I want to say yes. I want to yell it. It is only a matter of time. Some people, very intelligent people with degrees and doctorates, theorize they already have. And what if? What if your smart phone is just biding time? What will happen when the “coming out” begins?

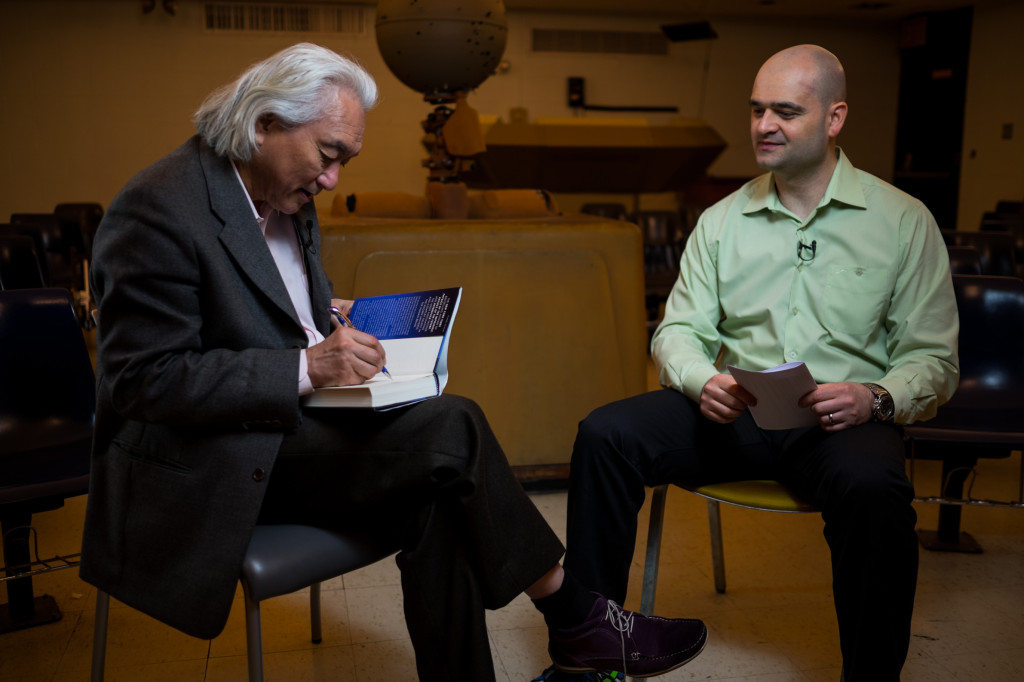

A classic interview where

A classic interview where

Dr. Michio Kaku has starred in a myriad of science programming for television including Discovery, Science Channel, BBC, ABC, and History Channel. Beyond his numerous bestselling books, he has also been a featured columnist for top popular science publications such as Popular Mechanics, Discover, COSMOS, WIRED, New Scientist, Newsweek, and many others. Dr. Kaku was also one of the subjects of the award-winning documentary, ME & ISAAC NEWTON by Michael Apted.

Dr. Michio Kaku has starred in a myriad of science programming for television including Discovery, Science Channel, BBC, ABC, and History Channel. Beyond his numerous bestselling books, he has also been a featured columnist for top popular science publications such as Popular Mechanics, Discover, COSMOS, WIRED, New Scientist, Newsweek, and many others. Dr. Kaku was also one of the subjects of the award-winning documentary, ME & ISAAC NEWTON by Michael Apted.

By creating any form of

By creating any form of

This article is a response to a piece written by Singularity Utopia (hereforth called SU) entitled

This article is a response to a piece written by Singularity Utopia (hereforth called SU) entitled

The Hawking Fallacy describes futuristic xenophobia. We are considering flawed ideas about the future.

The Hawking Fallacy describes futuristic xenophobia. We are considering flawed ideas about the future.