Podcast: Play in new window | Download | Embed

Subscribe: RSS

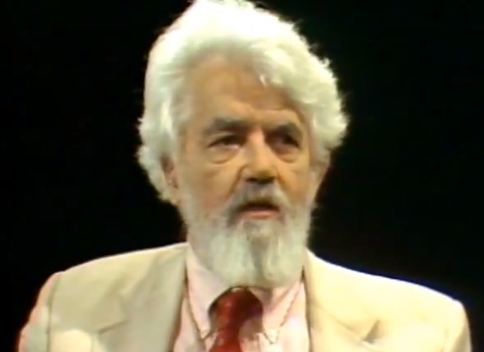

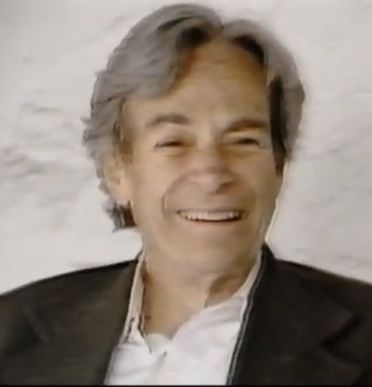

Peter Voss is an entrepreneur, inventor, engineer, scientist, and AI researcher. He is a rather interesting and unique individual not only because of his diverse background and impressive accomplishments but also because of his interest in moral philosophy and artificial intelligence. I have been planning to interview Voss for a while and, given how quickly our discussion went by, I will do my best to bring him again for an interview.

Peter Voss is an entrepreneur, inventor, engineer, scientist, and AI researcher. He is a rather interesting and unique individual not only because of his diverse background and impressive accomplishments but also because of his interest in moral philosophy and artificial intelligence. I have been planning to interview Voss for a while and, given how quickly our discussion went by, I will do my best to bring him again for an interview.

During our 1-hour-long conversation with Peter we cover a variety of topics such as his excitement in pursuing a dream that others have failed to accomplish for the past 50 years; whether we are rational or irrational animals; utility curves and the motivation of AGI; the importance of philosophy and ethics; Bertrand Russel and Ayn Rand; his companies A2I2 and Smart Action; his [revised] optimism and the timeline for building AGI; the Turing Test and the importance of asking questions; Our Final Invention and friendly AI; intelligence and morality…

As always you can listen to or download the audio file above or scroll down and watch the video interview in full. To show your support you can write a review on iTunes, make a direct donation, or become a patron on Patreon.

Who is Peter Voss?

Peter started his career as an entrepreneur, inventor, engineer, and scientist at age 16. After a few years in electronics engineering, at age 25 he started a company to provide turnkey business solutions based on self-developed software, running on micro-computer networks. Seven years later the company employed several hundred people and was successfully listed on the Johannesburg Stock Exchange.

After selling his interest in the company in 1993, he worked in a broad range of disciplines — cognitive science, philosophy, theory of knowledge, psychology, intelligence and learning theory, and computer science — which served as the foundation for achieving breakthroughs in artificial general intelligence. In 2001 he started Adaptive AI Inc., with the purpose of developing systems with a high degree of general intelligence and commercializing services based on these inventions. Smart Action Company, which utilizes an AGI engine to power its call automation service, was founded in 2008.

Peter often writes and presents on various philosophical topics including rational ethics, free will, and artificial minds; and is deeply involved with futurism and radical life extension.

Related articles

- Steve Omohundro on Singularity 1on 1: It’s Time To Envision Who We Are And Where We Want To Go

- The World is Transformed by Asking Questions [draft]

Many analysts think AI could destroy the Earth or humanity. It is feared AI could become psychopathic. People assume AI or robots could

Many analysts think AI could destroy the Earth or humanity. It is feared AI could become psychopathic. People assume AI or robots could

Imposing limitations upon intelligence is extremely backward. And so is establishing organisations advocating limited functionality for AI. This is a typical problem with organisations backed by

Imposing limitations upon intelligence is extremely backward. And so is establishing organisations advocating limited functionality for AI. This is a typical problem with organisations backed by

Mankind’s future might be in peril: What happens if an AGI is developed with the shark mentality of a Stock Trading

Mankind’s future might be in peril: What happens if an AGI is developed with the shark mentality of a Stock Trading  Therefore the physical appearance better be that of an innocent young woman. It needs a tip-tilted nose and pyramid hair. A kind lip rouge smile floating above a simple flower dress. When released into the chaotic environment of a nameless city, the humanoid money machine has to find its way in society and survive on its own. For starters it will try out several occupations like big data analyst, DNA bio-engineer, cryptographic specialist – all simple things for an AGI in its teenage time.

Therefore the physical appearance better be that of an innocent young woman. It needs a tip-tilted nose and pyramid hair. A kind lip rouge smile floating above a simple flower dress. When released into the chaotic environment of a nameless city, the humanoid money machine has to find its way in society and survive on its own. For starters it will try out several occupations like big data analyst, DNA bio-engineer, cryptographic specialist – all simple things for an AGI in its teenage time. Back to the drawing board the feral MPS runs some behavioral economic simulations, profit models and return-on-investment curves. Looking for the golden graph that resembles an exponential. And sure enough there it is. The final printout recommends designing a soda. Compose a soft drink more addictive then sugar, TV or afore mentioned recreationals. In no time the MPS develops a beverage with strange new ingredients that will pop heads legally. Forget about feel good hormones, serotonin, dopamine or even endorphins, the body’s homemade morphine. This brain juice tickles the frontal lobe like nothing before.

Back to the drawing board the feral MPS runs some behavioral economic simulations, profit models and return-on-investment curves. Looking for the golden graph that resembles an exponential. And sure enough there it is. The final printout recommends designing a soda. Compose a soft drink more addictive then sugar, TV or afore mentioned recreationals. In no time the MPS develops a beverage with strange new ingredients that will pop heads legally. Forget about feel good hormones, serotonin, dopamine or even endorphins, the body’s homemade morphine. This brain juice tickles the frontal lobe like nothing before. All over the world, a fifth column of shekel-seeking androids emerges silently, taking over relevant social positions. Penetrating into politics, where the real pot of gold awaits – with only a handful of insiders aware of what’s going on. These folks are the Masters of the Universe, in control of mankind’s most sophisticated instrument, the super-intelligent robot slave. The next politician you vote for might be made of nuts and bolts – and, more importantly – is programmed to drain the funding a country needs to survive and thrive. Disguised as a humble servant to the citizens, it will enact laws from which only the chosen few will benefit. Don’t blame the president for a change, for it is just a puppet.

All over the world, a fifth column of shekel-seeking androids emerges silently, taking over relevant social positions. Penetrating into politics, where the real pot of gold awaits – with only a handful of insiders aware of what’s going on. These folks are the Masters of the Universe, in control of mankind’s most sophisticated instrument, the super-intelligent robot slave. The next politician you vote for might be made of nuts and bolts – and, more importantly – is programmed to drain the funding a country needs to survive and thrive. Disguised as a humble servant to the citizens, it will enact laws from which only the chosen few will benefit. Don’t blame the president for a change, for it is just a puppet.

For 20 years

For 20 years