Dr. Anthony Atala from the Wake Forest Institute for Regenerative Medicine in an amazing video demonstrating the promise of regenerative medicine in general and artificially grown organs, in particular.

Here is the timeline description of the video as posted by Singularity Hub.

1:46 – Atala alerts the audience to the scope of the problems with organ transplants and limited organs. Starting with the quote from above.

2:28 – An interesting time-elapsed video of a salamander regrowing a fore-limb.

3:55 – Biomaterials can act as a bridge to encourage tissue growth over damaged areas or gaps. The bridge length, however, is limited to about 1 cm.

5:15 – Using cells that are cultured outside the body, Atala can take an artificial scaffold and create a new organ.

6:20 – Atala uses a bio-reactor to exercise and condition muscle tissue before it is placed in a patient. It’s really amazing to watch the tissue be stimulated outside the body.

6:58 – The same techniques can be used to create engineered blood vessels.

8:30 – The Wake Forest team can take cell samples to create an engineered bladder and place it into a patient in just six to eight weeks!

9:50 – Is a bladder not impressive enough for you? Check out this heart valve that Atala’s team grew and then exercised in a reactor.

10:12 – Ok…This is just incredible. Watch as Atala shows you an artificially grown ear! And then a few seconds later, the bones of a finger!

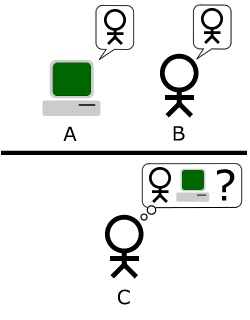

11:03 – Here we get to a demonstration that has generated a lot of interest. Using a common inkjet printer, the Wake Forest Institute can print muscle cells into tissue. The two chambered heart shown here is still very experimental and not for use in patients.

11:50 – Another possible means of regrowing tissue is to take donor organs and strip them of everything but collagen. This scaffold is then used as a base to grow a new organ from a patient’s own cells. We’ve seen this with stem cells and hearts, and also with a trachea.

13:20 – 90% of patients waiting for organs in the US need a kidney. The Wake Forest team has used wafers of cells to create miniature kidneys that are still in the test phase.

13:58 – Atala discusses strategies in regenerative medicines.

15:37 – The progression of developing these new medical techniques will not be easy.

16:55 – Atala ends with an example of how the (relatively) long history of regenerative medicine has already helped the lives of patients.

![Reblog this post [with Zemanta]](https://img.zemanta.com/reblog_e.png?x-id=dc4833ff-e1ae-442f-96a3-5dc3449134b4)

“A drone pilot’s nightmare came true when operators lost control of an armed MQ-9 Reaper flying a combat mission over Afghanistan on Sunday. That led a manned U.S. aircraft to shoot down the unresponsive drone before it flew beyond the edge of Afghanistan airspace.”

“A drone pilot’s nightmare came true when operators lost control of an armed MQ-9 Reaper flying a combat mission over Afghanistan on Sunday. That led a manned U.S. aircraft to shoot down the unresponsive drone before it flew beyond the edge of Afghanistan airspace.”