Podcast: Play in new window | Download | Embed

Subscribe: RSS

ReWriting the Human Story: How Our Story Determines Our Future

ReWriting the Human Story: How Our Story Determines Our Future

an alternative thought experiment by Nikola Danaylov

Chapter 11: The AI Story

Computer Science is no more about computers than astronomy is about telescopes. Edsger Dijkstra

When looms weave by themselves, man’s slavery will end. Aristotle

Within thirty years, we will have the technological means to create superhuman intelligence. Shortly after, the human era will be ended. Vernor Vinge, 1993

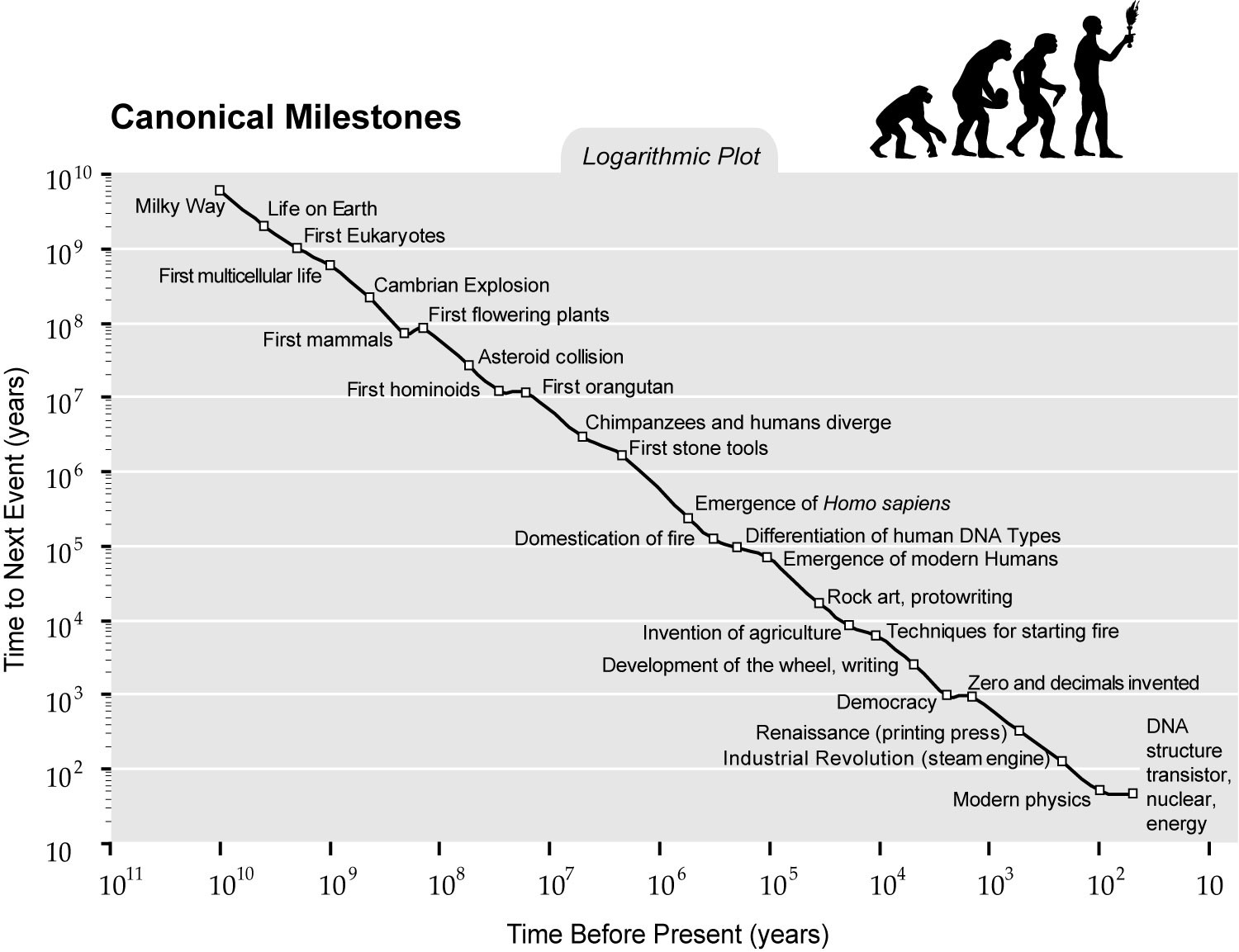

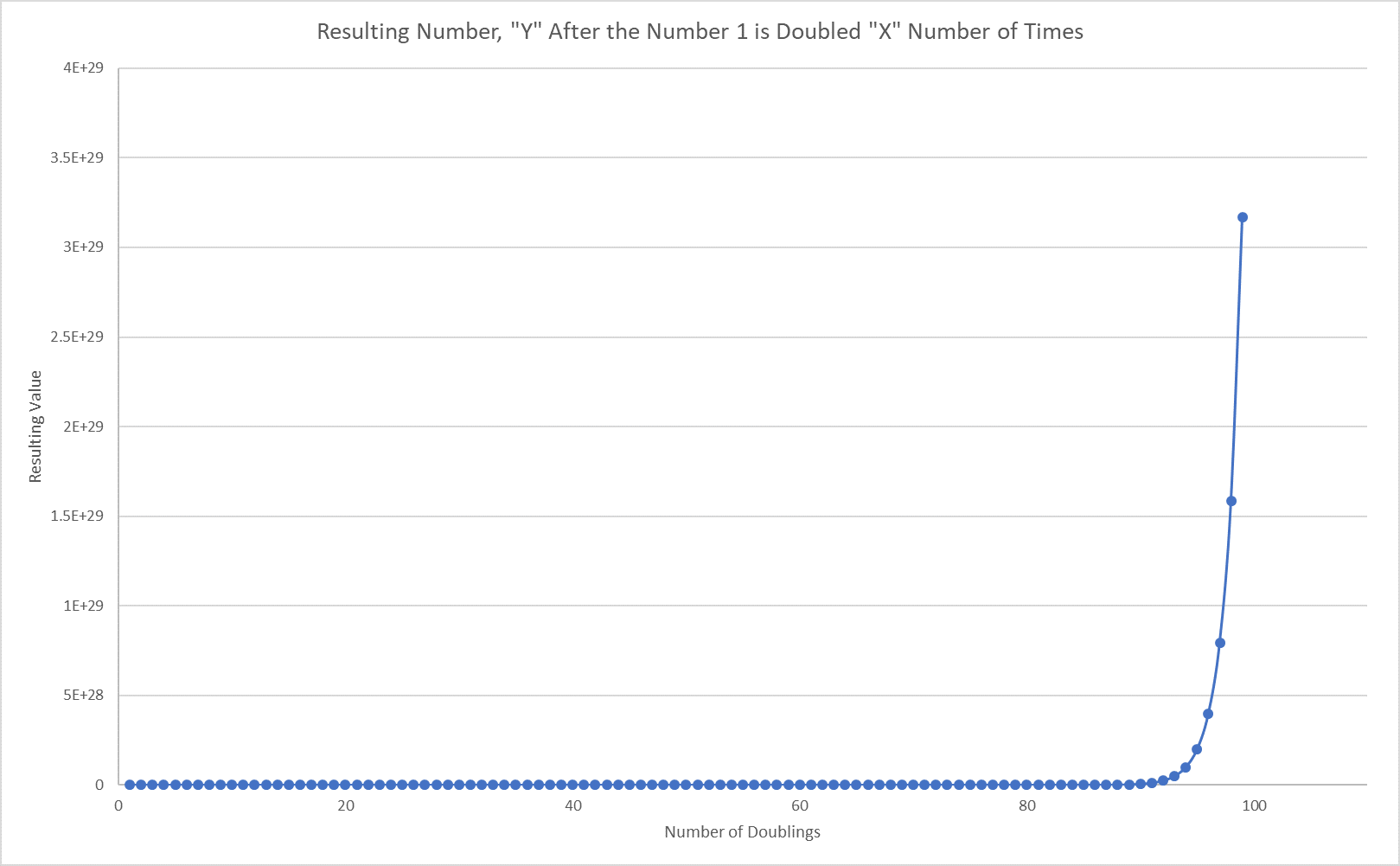

Today we are entirely dependent on machines. So much so, that, if we were to turn off machines invented since the Industrial Revolution, billions of people will die and civilization will collapse. Therefore, ours is already a civilization of machines and technology because they have become indispensable. The question is: What is the outcome of that process? Is it freedom and transcendence or slavery and extinction?

Our present situation is no surprise for it was in the relatively low-tech 19th century when Samuel Butler wrote Darwin among the Machines. There he combined his observations of the rapid technological progress of the Industrial Revolution and Darwin’s theory of evolution. That synthesis led Butler to conclude that intelligent machines are likely to be the next step in evolution:

…it appears to us that we are ourselves creating our own successors; we are daily adding to the beauty and delicacy of their physical organisation; we are daily giving them greater power and supplying by all sorts of ingenious contrivances that self-regulating, self-acting power which will be to them what intellect has been to the human race. In the course of ages we shall find ourselves the inferior race.

Samuel Butler developed further his ideas in Erewhon, which was published in 1872:

There is no security against the ultimate development of mechanical consciousness, in the fact of machines possessing little consciousness now. A mollusk has not much consciousness. Reflect upon the extraordinary advance which machines have made during the last few hundred years, and note how slowly the animal and vegetable kingdoms are advancing. The more highly organized machines are creatures not so much of yesterday, as of the last five minutes, so to speak, in comparison with past time.

Similarly to Samuel Butler, the source of Ted Kaczynski’s technophobia was his fear that:

… the human race might easily permit itself to drift into a position of such dependence on the machines that it would have no practical choice but to accept all of the machines decisions. As society and the problems that face it become more and more complex and machines become more and more intelligent, people will let machines make more of their decisions for them, simply because machine-made decisions will bring better result than man-made ones. Eventually a stage may be reached at which the decisions necessary to keep the system running will be so complex that human beings will be incapable of making them intelligently. At that stage the machines will be in effective control. People won’t be able to just turn the machines off, because they will be so dependent on them that turning them off would amount to suicide. The Unibomber Manifesto

As noted at the beginning of this chapter, humanity has already reached the machine dependence that Kaczynski was worried about. Contemporary experts may disagree on when artificial intelligence will equal human intelligence but most believe that in time it likely will. And there is no reason why AI will stop there. What happens next depends on both the human story and the AI story.

For example, if AI is created in a corporate lab it will likely be commercialized. If AI is created in a military lab it will likely be militarized. If AI is created in an Open Source community it will likely be cooperative and collaborative. And if it is created in someone’s garage it will likely reflect the story of that particular person or people. So, the context within which AI is created will shape its own origin story and that story will define the way it treats humanity.

Thus a military AI will likely treat humans as allies and enemies. A commercial AI will likely treat humans as customers and products. An Open Source AI might treat humans as parents, coders, friends, or allies. [Given current funding trends the first two types seem the most likely.] So the most crucial thing that humanity will do when creating AI is narrating the AI origin story. Because similar to us, by describing how it came into being, what it is here for, what’s its purpose and proper place in the universe, its story will determine its future. If we get the AI story right we have a chance to coexist peacefully. But if we get it wrong that could mean a Gigawar of planetary proportions, even extinction.

For example, what if, like us, AI ends up with a story of being the pinnacle of evolution, the smartest species in the universe, and, ultimately, God?

This “AI-ism” is going to be to the AIs very much like what humanism is to us, humans. Would it be surprising if it uses this AI story to justify enslaving and killing billions of humans and destroying the biosphere?! Just like we are using ours to justify killing 73 billion animals and 1.3 trillion aquatic organisms every year. Because as Zora Neale Hurston pointed out in Tell My Horse:

Gods always behave like the people who make them.

That is why it is crucial that humanity transcends humanism to embrace post-humanism, post-anthropomorphism, post-exclusivism, and post-dualism. Because one specieist story should not be replaced by another. Not even with one about the primacy of the superintelligent machines, transhumans, posthumans, aliens, or uplifted animals. To be a true revolution, the AI revolution must change our story structure and abolish its current hierarchy. Otherwise, oppression will persist, suffering will increase and the only change will be who is the oppressor and who is oppressed.

In other words, the dangers posed by AI originate in the same place as the dangers posed by humanity: our story. If like the human story, the AI story ends up as one of uniqueness, exclusivity, progress, supremacy, dominance, alienation, teleology, manifest destiny, and godhood then we can expect a similarly destructive impact. Therefore, to minimize suffering and improve our own chances of survival during the turbulent 21st century, both humanity and AI must embrace a new type of story. One that is decentralized, non-singular, non-hierarchical, non-specieist, non-dualistic, and non-exclusive. Because a multiplicity is ethically better than a singularity. And because it is safer too.

I have previously interviewed a few fantastic scientists and philosophers but rare are those strange birds who manage to combine together both deep academic training and the living ethos of those separate disciplines. Prof.

I have previously interviewed a few fantastic scientists and philosophers but rare are those strange birds who manage to combine together both deep academic training and the living ethos of those separate disciplines. Prof.  A few weeks ago I got interviewed by Ricardo Lopes for

A few weeks ago I got interviewed by Ricardo Lopes for

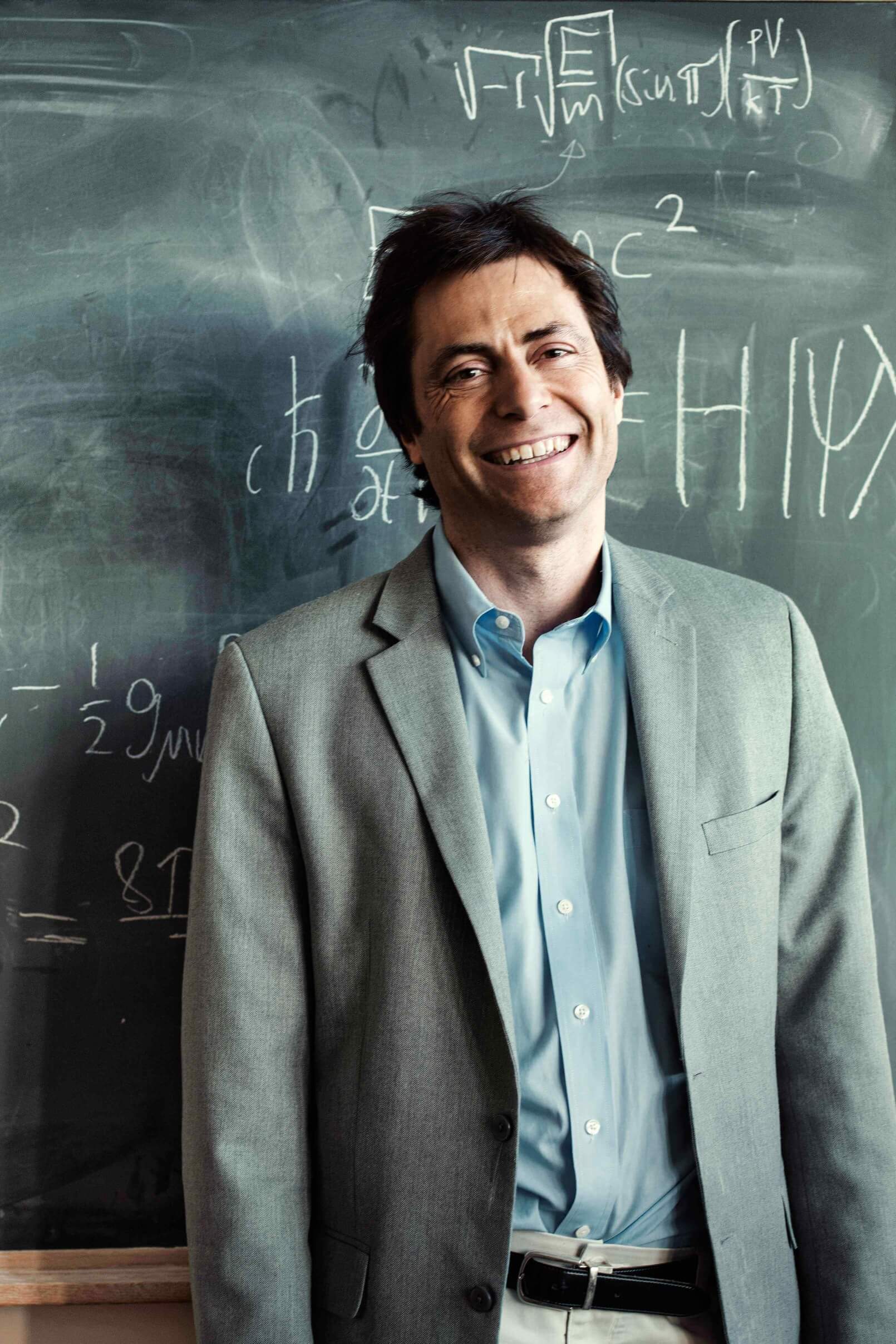

Some people say that renowned MIT physicist

Some people say that renowned MIT physicist  Max Tegmark is driven by curiosity, both about how our universe works and about how we can use the science and technology we discover to help humanity flourish rather than flounder.

Max Tegmark is driven by curiosity, both about how our universe works and about how we can use the science and technology we discover to help humanity flourish rather than flounder. Joi Ito is just one of those people who simply don’t fit a mold. Any mold. He is an entrepreneur who is an activist. He is an academic without a degree. He is a leader who follows. He is a teacher who listens. And an interlocutor who wants you to disagree with him. Overall, I hate to say it but I must put forward my own biases by admitting that this was probably the most fun interview I have ever done. Ever. So, either I let all my personal biases run free on this one or it was truly a gem of an interview. You be the judge as per which one it was and please don’t hesitate to let me know.

Joi Ito is just one of those people who simply don’t fit a mold. Any mold. He is an entrepreneur who is an activist. He is an academic without a degree. He is a leader who follows. He is a teacher who listens. And an interlocutor who wants you to disagree with him. Overall, I hate to say it but I must put forward my own biases by admitting that this was probably the most fun interview I have ever done. Ever. So, either I let all my personal biases run free on this one or it was truly a gem of an interview. You be the judge as per which one it was and please don’t hesitate to let me know.

It’s the start of the third act and explosions tear through the city as the final battle rages with unrelenting mayhem. CGI robots and genetic monsters rampage through buildings, hunting down the short-sighted humans that dared to create them. If only the scientists had listened to those wholesome everyday folks in the first act who pleaded for reason, and begged them not to meddle with the forces of nature. Who will save the world from these ungodly bloodthirsty abominations? Probably that badass guy who plays by his own rules, has a score to settle, and has nothing but contempt for “eggheads.”

It’s the start of the third act and explosions tear through the city as the final battle rages with unrelenting mayhem. CGI robots and genetic monsters rampage through buildings, hunting down the short-sighted humans that dared to create them. If only the scientists had listened to those wholesome everyday folks in the first act who pleaded for reason, and begged them not to meddle with the forces of nature. Who will save the world from these ungodly bloodthirsty abominations? Probably that badass guy who plays by his own rules, has a score to settle, and has nothing but contempt for “eggheads.” About the Author:

About the Author: Jaan Tallinn, co-founder of Skype and Kazaa, got so famous in his homeland of Estonia that people named the biggest city after him. Well, that latter part may not be exactly true but there are few people today who have not used, or at least heard of, Skype or Kazaa. What is much less known, however, is that for the past 10 years Jaan Tallinn has spent a lot of time and money as an evangelist for the dangers of existential risks as well as a generous financial supporter to organizations doing research in the field. And so I was very happy to do an interview with Tallinn.

Jaan Tallinn, co-founder of Skype and Kazaa, got so famous in his homeland of Estonia that people named the biggest city after him. Well, that latter part may not be exactly true but there are few people today who have not used, or at least heard of, Skype or Kazaa. What is much less known, however, is that for the past 10 years Jaan Tallinn has spent a lot of time and money as an evangelist for the dangers of existential risks as well as a generous financial supporter to organizations doing research in the field. And so I was very happy to do an interview with Tallinn. Jaan Tallinn is a founding engineer of Skype and Kazaa. He is a co-founder of the

Jaan Tallinn is a founding engineer of Skype and Kazaa. He is a co-founder of the

Andy E. Williams is Executive Director of the Nobeah Foundation, a not-for-profit organization focusing on raising funds to distribute technology with the potential for transformative social impact. Andy has an undergraduate degree in physics from the University of Toronto. His graduate studies centered on quantum effects in nano-devices.

Andy E. Williams is Executive Director of the Nobeah Foundation, a not-for-profit organization focusing on raising funds to distribute technology with the potential for transformative social impact. Andy has an undergraduate degree in physics from the University of Toronto. His graduate studies centered on quantum effects in nano-devices.