Podcast: Play in new window | Download | Embed

Subscribe: RSS

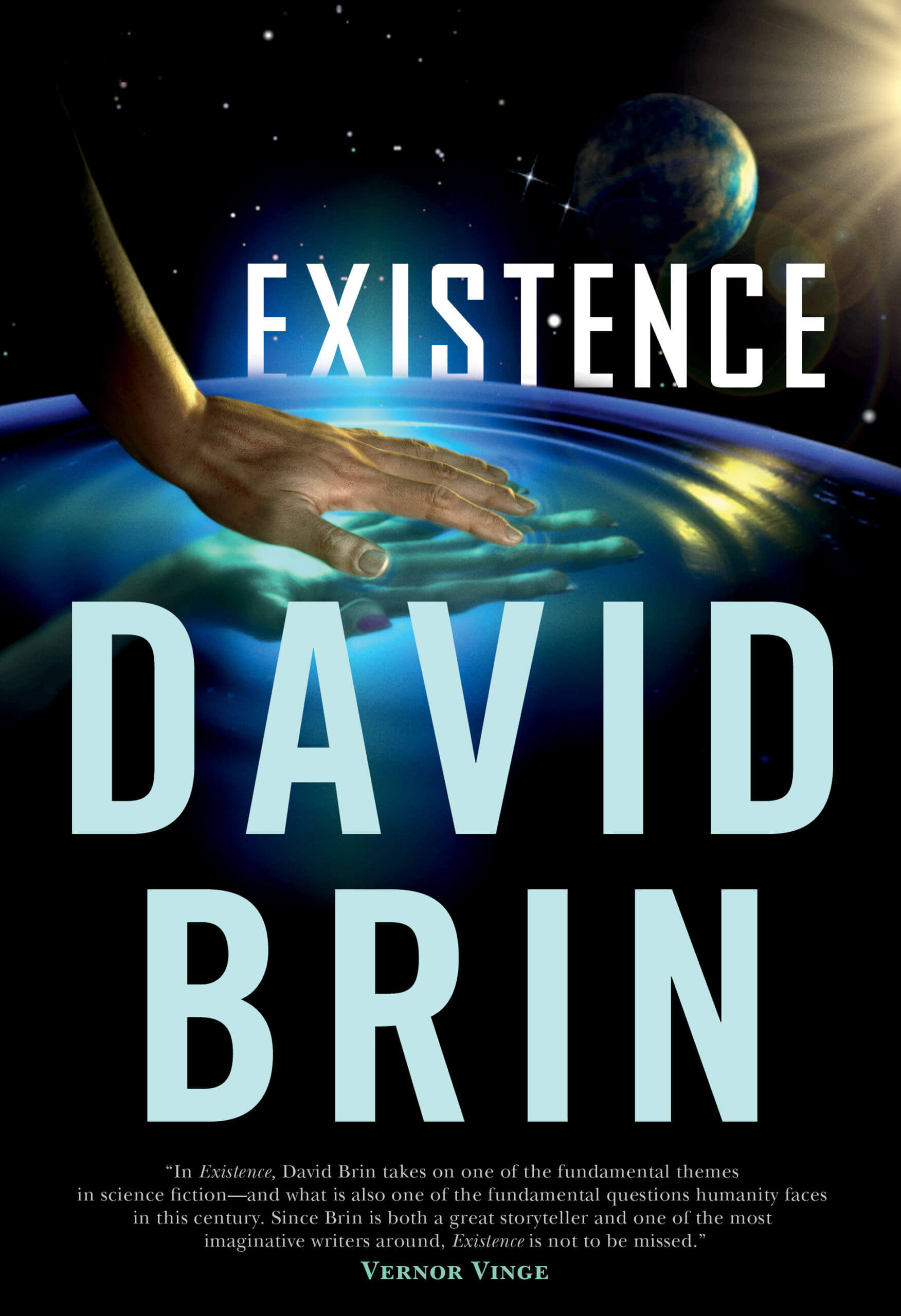

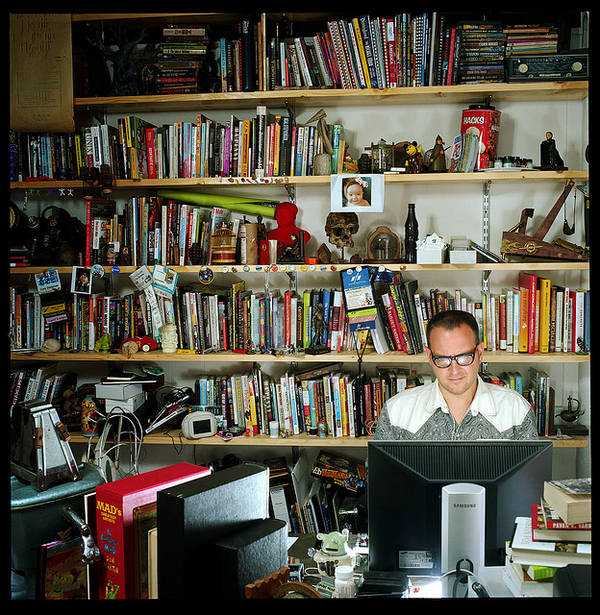

David Brin is not only a Ph.D. in astrophysics but also an award-winning, best-selling science fiction author, perhaps best known for his uplift series of novels and, most recently, Existence.

Originally, I was supposed to interview Brin in the summer. Unfortunately, I got a concussion the day before and thus had to delay it. David is a busy man so it took a while to book another date but eventually, we did and I have to say that I very much enjoyed talking to and being challenged by him.

During our conversation with Brin we cover a wide variety of topics such as his interest in science fiction, writing and civilization; his novel The Postman which later became a feature film with Kevin Costner; his views on post-modernism, progress, ethics, and objective reality; pessimistic versus optimistic science fiction; the self-preventing prophesy as the greatest form of science fiction (e.g. George Orwell’s 1984); his role of the lead prosecutor in Star Wars on Trial and Yoda as one of the most evil characters; his latest novel Existence…

My favorite quote that I will take away from this interview with David Brin is:

What’s important is not me. And it’s not you. It’s us!

Correction: the Oedipus tragedy was written by Sophocles, not by Aristophanes as I say during the interview.

As always you can listen to or download the audio file above or scroll down and watch the video interview in full. To show your support you can write a review on iTunes, make a direct donation, or become a patron on Patreon.

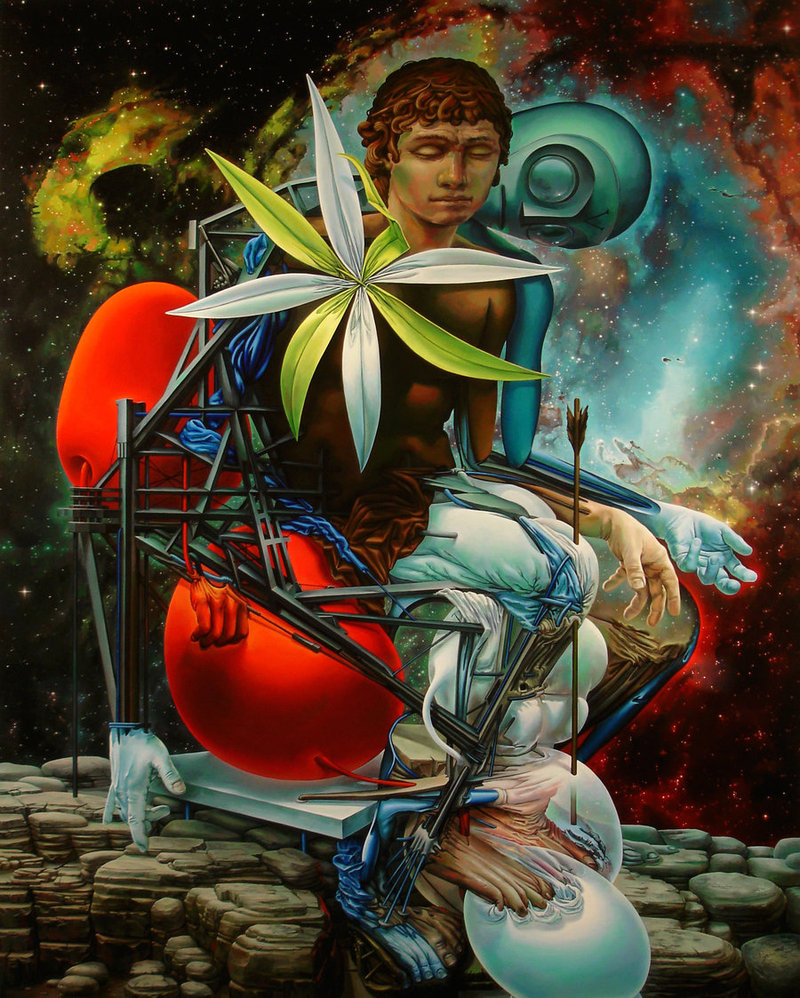

Existence: Book Trailer

Bestselling, award-winning futurist David Brin returns to globe-spanning, high concept fiction with EXISTENCE. This 3-minute preview offers glimpses and scenes from the novel, all painted especially for this trailer by renowned web artist Patrick Farley, conveying some of the drama and what may be at stake, in our near future.

Bestselling, award-winning futurist David Brin returns to globe-spanning, high concept fiction with EXISTENCE. This 3-minute preview offers glimpses and scenes from the novel, all painted especially for this trailer by renowned web artist Patrick Farley, conveying some of the drama and what may be at stake, in our near future.

Gerald Livingston is an orbital garbage collector. For a hundred years, people have been abandoning things in space, and someone has to clean them up. But there’s something spinning a little bit higher than he expects, something that isn’t on the decades-old old orbital maps. An hour after he grabs it and brings it in, rumors fill Earth’s info mesh about an “alien artifact.”

Thrown into the maelstrom of worldwide shared experience, the Artifact is a game-changer. A message in a bottle; an alien capsule that wants to communicate. The world reacts as humans always do: with fear and hope and selfishness and love and violence. And insatiable curiosity.

Who is David Brin?

David Brin is a scientist, speaker, technical consultant, and world-known author. His novels have been New York Times Bestsellers, winning multiple Hugo, Nebula, and other awards. At least a dozen have been translated into more than twenty languages.

David Brin is a scientist, speaker, technical consultant, and world-known author. His novels have been New York Times Bestsellers, winning multiple Hugo, Nebula, and other awards. At least a dozen have been translated into more than twenty languages.

His 1989 ecological thriller, Earth foreshadowed global warming, cyberwarfare, and near-future trends such as the World Wide Web*. A 1998 movie, directed by Kevin Costner, was loosely based on The Postman.

Brin serves on advisory committees dealing with subjects as diverse as national defense and homeland security, astronomy and space exploration, SETI and nanotechnology, future/prediction, and philanthropy. His non-fiction book — The Transparent Society: Will Technology Force Us To Choose Between Privacy And Freedom? — deals with secrecy in the modern world. It won the Freedom of Speech Prize from the American Library Association.

As a public “scientist/futurist” David appears frequently on TV, including, most recently, on many episodes of “The Universe” and on the History Channel’s best-watched show (ever) “Life After People.” He also was a regular cast member on “The ArciTECHS.” (For others, see “Media and Punditry.”)

Brin’s scientific work covers an eclectic range of topics, from astronautics, astronomy, and optics to alternative dispute resolution and the role of neoteny in human evolution. His Ph.D in Physics from UCSD – the University of California at San Diego (the lab of nobelist Hannes Alfven) – followed a master’s in optics and an undergraduate degree in astrophysics from Caltech. He was a postdoctoral fellow at the California Space Institute and the Jet Propulsion Laboratory. His patents directly confront some of the faults of old-fashioned screen-based interaction, aiming to improve the way human beings converse online.

David’s novel Kiln People has been called a book of ideas disguised as a fast-moving and fun noir detective story, set in a future when new technology enables people to physically be in more than two places at once.

A hardcover graphic novel The Life Eaters explored alternate outcomes to WWII, winning nominations and high praise in the nation that most loves and respects the graphic novel.

David’s science-fictional Uplift Universe explores a future when humans genetically engineer higher animals like dolphins to become equal members of our civilization. He also recently tied up the loose ends left behind by the late Isaac Asimov. Foundation’s Triumph brings to a grand finale Asimov’s famed Foundation Universe.

As a speaker and on television, David Brin shares unique insights — serious and humorous — about ways that changing technology may affect our future lives. Brin lives in San Diego County with his wife, three children, and a hundred very demanding trees.

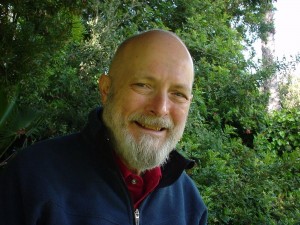

Selected by Foreign Policy magazine as one of their Top 100 Global Thinkers, Jamais Cascio writes about the intersection of emerging technologies, environmental dilemmas, and cultural transformation, specializing in the design and creation of plausible scenarios of the future. His work focuses on the importance of long-term, systemic thinking as a catalyst for building a more resilient society. Cascio’s work appears in publications as diverse as the Atlantic Monthly, the Wall Street Journal, and Foreign Policy. He has been featured in a variety of television programs on future issues, including National Geographic Television’s SIX DEGREES, its 2008 documentary on the effects of global warming, and Canadian Broadcasting Corporation’s 2010 documentary SURVIVING THE FUTURE. Cascio speaks about future possibilities around the world, at venues including the Aspen Environment Forum, Guardian Activate Summit in London, the National Academy of Sciences in Washington DC, and TED.

Selected by Foreign Policy magazine as one of their Top 100 Global Thinkers, Jamais Cascio writes about the intersection of emerging technologies, environmental dilemmas, and cultural transformation, specializing in the design and creation of plausible scenarios of the future. His work focuses on the importance of long-term, systemic thinking as a catalyst for building a more resilient society. Cascio’s work appears in publications as diverse as the Atlantic Monthly, the Wall Street Journal, and Foreign Policy. He has been featured in a variety of television programs on future issues, including National Geographic Television’s SIX DEGREES, its 2008 documentary on the effects of global warming, and Canadian Broadcasting Corporation’s 2010 documentary SURVIVING THE FUTURE. Cascio speaks about future possibilities around the world, at venues including the Aspen Environment Forum, Guardian Activate Summit in London, the National Academy of Sciences in Washington DC, and TED. Dr.

Dr.

GWEN IFILL: Next, we take a very different look at a future — the future for human health and longevity.

GWEN IFILL: Next, we take a very different look at a future — the future for human health and longevity.

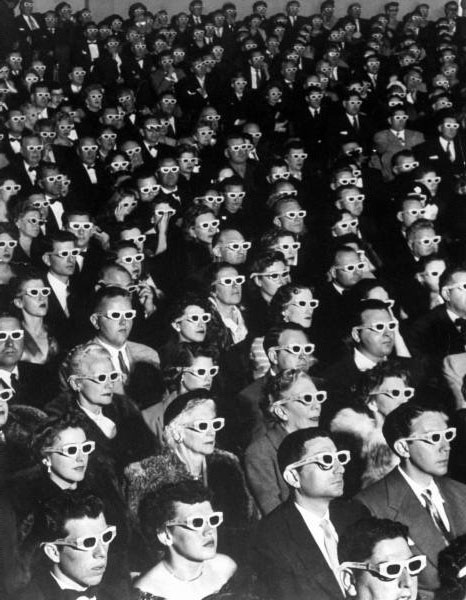

A culture jam is an action, expression, practice, or work of art that subverts mainstream cultural meanings or institutions. It is a popular form of civil disobedience among anti-consumerist groups and other social activists who attempt to re-appropriate cultural iconography and use it to critique mass culture.

A culture jam is an action, expression, practice, or work of art that subverts mainstream cultural meanings or institutions. It is a popular form of civil disobedience among anti-consumerist groups and other social activists who attempt to re-appropriate cultural iconography and use it to critique mass culture.

Jake Anderson is a writer/comedian/filmmaker living in San Diego, California. He is currently self-publishing an e-book of subversive science fictions stories about life after the Singularity. Check out his

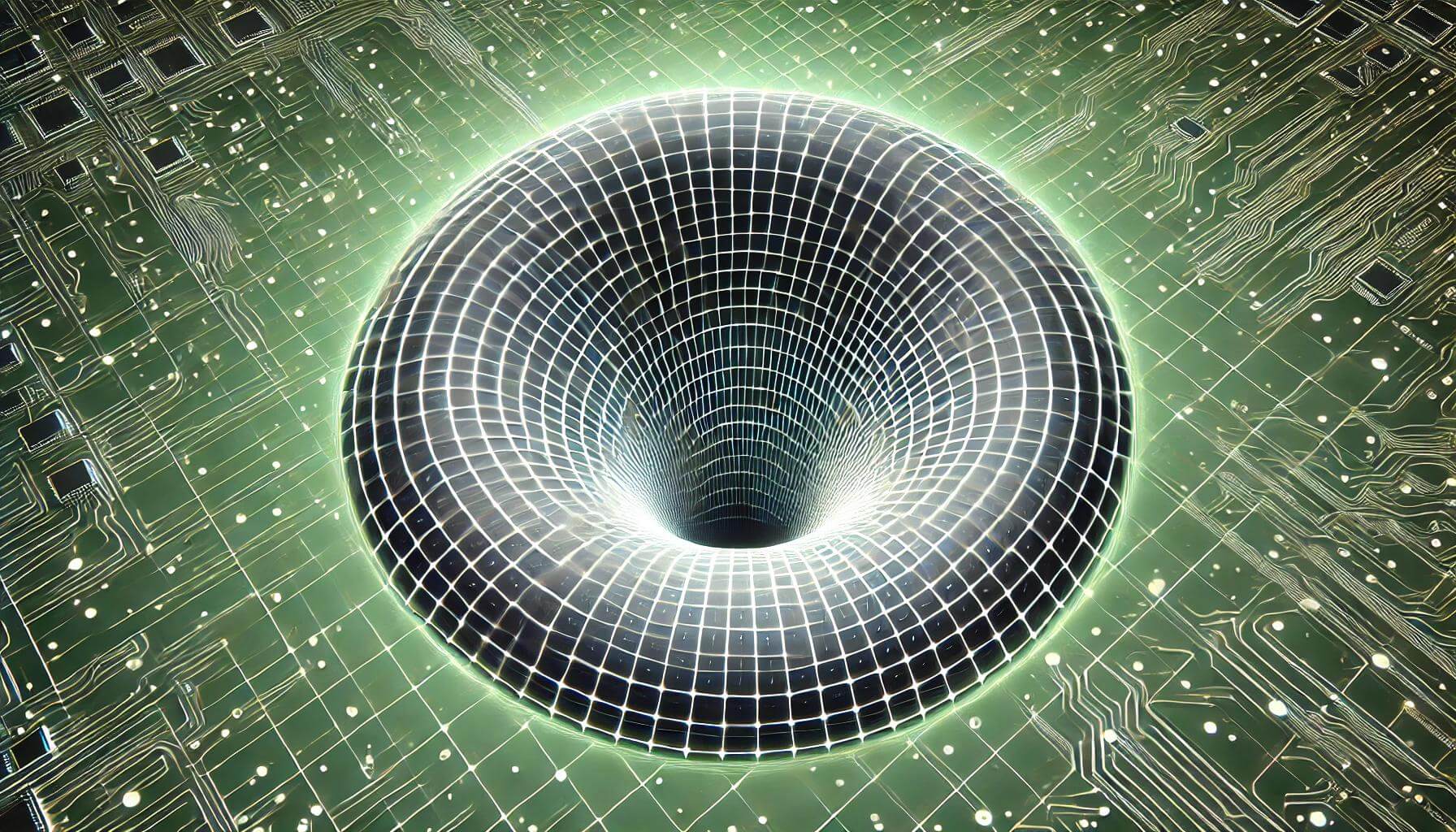

Jake Anderson is a writer/comedian/filmmaker living in San Diego, California. He is currently self-publishing an e-book of subversive science fictions stories about life after the Singularity. Check out his  The term singularity has many meanings.

The term singularity has many meanings. So, the Wiktionary lists the following five meanings:

So, the Wiktionary lists the following five meanings:

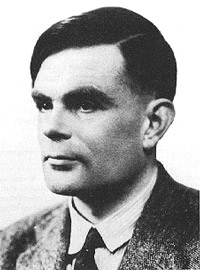

In his 1951 paper titled Intelligent Machinery: A Heretical Theory, Alan Turing wrote of machines that will eventually surpass human intelligence:

In his 1951 paper titled Intelligent Machinery: A Heretical Theory, Alan Turing wrote of machines that will eventually surpass human intelligence: In 1958 Stanislaw Ulam wrote about a conversation with John von Neumann who said that: “the ever accelerating progress of technology … gives the appearance of approaching some essential singularity in the history of the race beyond which human affairs, as we know them, could not continue.” Neumann’s alleged definition of the singularity was that it is the moment beyond which “technological progress will become incomprehensibly rapid and complicated.”

In 1958 Stanislaw Ulam wrote about a conversation with John von Neumann who said that: “the ever accelerating progress of technology … gives the appearance of approaching some essential singularity in the history of the race beyond which human affairs, as we know them, could not continue.” Neumann’s alleged definition of the singularity was that it is the moment beyond which “technological progress will become incomprehensibly rapid and complicated.” “We will soon create intelligences greater than our own. When this happens, human history will have reached a kind of singularity, an intellectual transition as impenetrable as the knotted space-time at the center of a black hole, and the world will pass far beyond our understanding. This singularity, I believe, already haunts a number of science-fiction writers. It makes realistic extrapolation to an interstellar future impossible. To write a story set more than a century hence, one needs a nuclear war in between … so that the world remains intelligible.”

“We will soon create intelligences greater than our own. When this happens, human history will have reached a kind of singularity, an intellectual transition as impenetrable as the knotted space-time at the center of a black hole, and the world will pass far beyond our understanding. This singularity, I believe, already haunts a number of science-fiction writers. It makes realistic extrapolation to an interstellar future impossible. To write a story set more than a century hence, one needs a nuclear war in between … so that the world remains intelligible.” In

In  In 1997 Nick Bostrom – a world-renowned philosopher and futurist, wrote

In 1997 Nick Bostrom – a world-renowned philosopher and futurist, wrote  Ray Kurzweil is easily the most popular singularitarian. He embraced Vernor Vinge’s term and brought it into the mainstream. Yet Ray’s definition is not entirely consistent with Vinge’s original. In his seminal book

Ray Kurzweil is easily the most popular singularitarian. He embraced Vernor Vinge’s term and brought it into the mainstream. Yet Ray’s definition is not entirely consistent with Vinge’s original. In his seminal book  In 2007 Eliezer Yudkowsky pointed out that

In 2007 Eliezer Yudkowsky pointed out that  In

In

Yesterday I interviewed philosopher

Yesterday I interviewed philosopher

It is only fair that every once-in-a-while

It is only fair that every once-in-a-while