Podcast: Play in new window | Download | Embed

Subscribe: RSS

Hans Moravec famously claimed that robots will be our (mind) children. If true, then, it is natural to wonder What to Expect When You’re Expecting Robots? This is the question that Laura Major and Julie Shah – two expert robot engineers, are addressing in their new book. Given the subject of robots and AI as well as the fact that both Julie and Laura have experience in the aerospace, military, robotics, and self-driving car industries, I thought that they’d make great guests on my podcast. I hope you enjoy our conversation as much as I did.

Hans Moravec famously claimed that robots will be our (mind) children. If true, then, it is natural to wonder What to Expect When You’re Expecting Robots? This is the question that Laura Major and Julie Shah – two expert robot engineers, are addressing in their new book. Given the subject of robots and AI as well as the fact that both Julie and Laura have experience in the aerospace, military, robotics, and self-driving car industries, I thought that they’d make great guests on my podcast. I hope you enjoy our conversation as much as I did.

During this 90 min interview with Laura Major and Julie Shah, we cover a variety of interesting topics such as: the biggest issues within AI and Robotics; why humans and robots should be teammates, not competitors; whether we ought to focus more on the human as a weak link in the system; what happens when technology works as designed and exceeds our expectations; problems in defining driverless (or self-driving) car, AI and robot; why, ultimately, technology is not enough; whether the aerospace industry is a good role model or not.

As always you can listen to or download the audio file above or scroll down and watch the video interview in full. To show your support you can write a review on iTunes, make a direct donation, or become a patron on Patreon.

Who is Julie Shah?

Julie Shah is a roboticist at MIT and an associate dean of social and ethical responsibilities of computing. She directs the Interactive Robotics Group in the Schwarzman College of Computing at MIT. She was a Radcliffe fellow, has received an National Science Foundation Career Award, and has been named one of MIT Technology Review’s “Innovators Under 35.” She lives in Cambridge, Massachusetts.

Julie Shah is a roboticist at MIT and an associate dean of social and ethical responsibilities of computing. She directs the Interactive Robotics Group in the Schwarzman College of Computing at MIT. She was a Radcliffe fellow, has received an National Science Foundation Career Award, and has been named one of MIT Technology Review’s “Innovators Under 35.” She lives in Cambridge, Massachusetts.

Who is Laura Major?

Laura Major is CTO of Motional (previously Hyundai-Aptiv Autonomous Driving Joint Venture), where she leads the development of autonomous vehicles. Previously, she led the development of autonomous aerial vehicles at CyPhy Works and a division at Draper Laboratory. Major has been recognized as a national Society of Women Engineers Emerging Leader. She lives in Cambridge, Massachusetts.

Laura Major is CTO of Motional (previously Hyundai-Aptiv Autonomous Driving Joint Venture), where she leads the development of autonomous vehicles. Previously, she led the development of autonomous aerial vehicles at CyPhy Works and a division at Draper Laboratory. Major has been recognized as a national Society of Women Engineers Emerging Leader. She lives in Cambridge, Massachusetts.

While many may be intrigued by the idea, how many of us actually care about robots – in the relating sense of the word?

While many may be intrigued by the idea, how many of us actually care about robots – in the relating sense of the word?

I first met Dr.

I first met Dr.  The end of the information age will coincide with the beginning of the robot age. However, we will not soon see a world in which humans and androids walk the streets together, like in movies or cartoons; instead, information technology and robotics will gradually fuse so that people will likely only notice when robot technology is already in use in various locations.

The end of the information age will coincide with the beginning of the robot age. However, we will not soon see a world in which humans and androids walk the streets together, like in movies or cartoons; instead, information technology and robotics will gradually fuse so that people will likely only notice when robot technology is already in use in various locations.

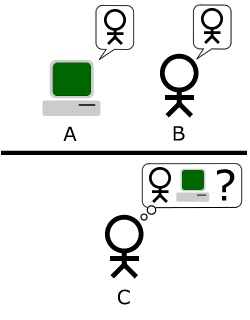

“A drone pilot’s nightmare came true when operators lost control of an armed MQ-9 Reaper flying a combat mission over Afghanistan on Sunday. That led a manned U.S. aircraft to shoot down the unresponsive drone before it flew beyond the edge of Afghanistan airspace.”

“A drone pilot’s nightmare came true when operators lost control of an armed MQ-9 Reaper flying a combat mission over Afghanistan on Sunday. That led a manned U.S. aircraft to shoot down the unresponsive drone before it flew beyond the edge of Afghanistan airspace.”