Podcast: Play in new window | Download | Embed

Subscribe: RSS

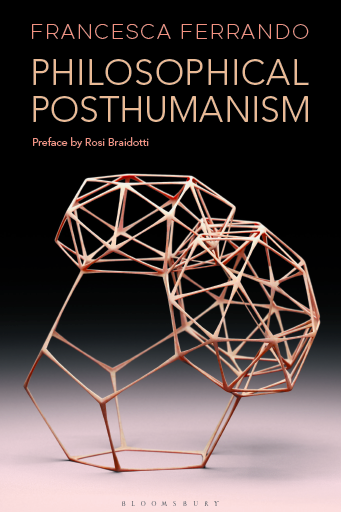

Though admittedly posthumanist, Francesca Ferrando‘s Philosophical Posthumanism is the best book on transhumanism that I have read so far. I believe that it is a must-read for transhumanists and non-transhumanists alike. In fact, one can argue that Ferrando’s book ranks right up there with the very best not only on the transhuman, but also on the human and the posthuman. The reason for that is simple: Philosophical Posthumanism cracks open, deconstructs, and demystifies all the major historical -isms. Furthermore, it not only lays bare words such as technology but also shows us how all the puzzle pieces fit together in the historical, ideological, theological, philosophical, etymological, scientific and decidedly political realms, like nothing else that I have read before. I hope you enjoy my conversation with Dr. Ferrando and invest the time and the effort to read her book.

Though admittedly posthumanist, Francesca Ferrando‘s Philosophical Posthumanism is the best book on transhumanism that I have read so far. I believe that it is a must-read for transhumanists and non-transhumanists alike. In fact, one can argue that Ferrando’s book ranks right up there with the very best not only on the transhuman, but also on the human and the posthuman. The reason for that is simple: Philosophical Posthumanism cracks open, deconstructs, and demystifies all the major historical -isms. Furthermore, it not only lays bare words such as technology but also shows us how all the puzzle pieces fit together in the historical, ideological, theological, philosophical, etymological, scientific and decidedly political realms, like nothing else that I have read before. I hope you enjoy my conversation with Dr. Ferrando and invest the time and the effort to read her book.

During this 2-hour interview with Francesca Ferrando, we cover a variety of interesting topics such as: why I believe Philosophical Posthumanism is a must-read; why the etymological and other roots of a movement matter; child sociology and social mythology; our shared love for Ancient Greek mythology; the definitions of humanism, transhumanism, and posthumanism; why post-modernism is like the Quantum Mechanics of the humanities; the false distinction between human and transhuman; why the Hedonistic Imperative is merely a new version of the White Man’s Burden; theism and techno-solutionism; Martin Heidegger and the definition, poiesis and ontological power of technology.

As always you can listen to or download the audio file above or scroll down and watch the video interview in full. To show your support you can write a review on iTunes, make a direct donation, or become a patron on Patreon.

Who is Francesca Ferrando?

Francesca Ferrando teaches Philosophy at NYU-Liberal Studies, New York University. A leading voice in the field of Posthuman Studies and founder of the Global Posthuman Network, she has been the recipient of numerous honors and recognitions, including the Sainati prize with the Acknowledgement of the President of Italy.

Francesca Ferrando teaches Philosophy at NYU-Liberal Studies, New York University. A leading voice in the field of Posthuman Studies and founder of the Global Posthuman Network, she has been the recipient of numerous honors and recognitions, including the Sainati prize with the Acknowledgement of the President of Italy.

Ferrando has published extensively on these topics culminating with her latest book Philosophical Posthumanism (Bloomsbury 2019) and, in the history of TED talks, she was the first speaker to give a talk on the topic of the posthuman. Those are just some of the reasons why the US magazine “Origins” named Francesca Ferrando among the 100 people making a change in the world.

Have you ever felt lonely, struggled to fit in and be normal or been rejected just because you’re different?

Have you ever felt lonely, struggled to fit in and be normal or been rejected just because you’re different?

Today my guest on

Today my guest on