Podcast: Play in new window | Download | Embed

Subscribe: RSS

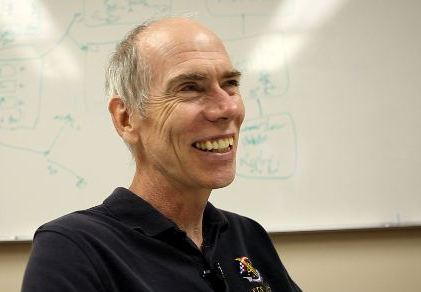

Gary Marcus is not only a professor in psychology but also a computer scientist, programmer, AI researcher, and best-selling author. He recently wrote a critical article titled Ray Kurzweil’s Dubious New Theory of Mind that was published by The New Yorker. After reading his blog post I thought that it will be interesting to invite Gary on Singularity 1 on 1 so that he can talk more about his argument.

During our conversation with Gary we cover a wide variety of topics such as: what is psychology and how he got interested in it; his theory of mind in general and the idea that the mind is a kluge in particular; why the best approach to creating AI is at the bridge between neuroscience and psychology; other projects such as Henry Markram‘s Blue Brain, Randal Koene‘s Whole Brain Emulation, and Dharmendra Modha‘s SyNAPSE; Ray Kurzweil’s Patern Recognition Theory of Mind; Deep Blue, Watson and the lessons thereof; his take on the technological singularity and the ethics surrounding the creation of friendly AI…

As always you can listen to or download the audio file above or scroll down and watch the video interview in full. To show your support you can write a review on iTunes, make a direct donation, or become a patron on Patreon.

Who is Gary Marcus?

Gary Marcus is a cognitive scientist, and author of The New York Times Bestseller Guitar Zero: The New Musician and the Science of Learning as well as Kluge: The Haphazard Evolution of the Human Mind, which was a New York Times Book Review editor’s choice. You can check out Gary’s other blog posts at The New Yorker on neuroscience, linguistics, and artificial intelligence and follow him on Twitter to stay up to date with his latest work.

Tracy R. Atkins

Tracy R. Atkins This Monday I interviewed Dr.

This Monday I interviewed Dr.

Last week, I interviewed

Last week, I interviewed

President and Founder, Denbar Robotics, Dan is a former NASA astronaut and a veteran of three space flights, four spacewalks, and two trips to the International Space Station. He retired from NASA in 2005 and started his own company, Denbar Robotics that creates robotic assistants for home and commercial use, concentrating on assistive devices for people with disabilities.

President and Founder, Denbar Robotics, Dan is a former NASA astronaut and a veteran of three space flights, four spacewalks, and two trips to the International Space Station. He retired from NASA in 2005 and started his own company, Denbar Robotics that creates robotic assistants for home and commercial use, concentrating on assistive devices for people with disabilities.

Sort of like runaway artificial general intelligence (AGI).

Sort of like runaway artificial general intelligence (AGI). When we conceptualize

When we conceptualize  ikki Olson is a writer/researcher working on an upcoming book about the Singularity with Dr. Kim Solez, as well as relevant educational material for the Lifeboat Foundation. She has a background in philosophy and sociology, and has been involved extensively in Singularity research for 3 years. You can reach Nikki via email at

ikki Olson is a writer/researcher working on an upcoming book about the Singularity with Dr. Kim Solez, as well as relevant educational material for the Lifeboat Foundation. She has a background in philosophy and sociology, and has been involved extensively in Singularity research for 3 years. You can reach Nikki via email at  It is the year 2045. Strong artificial intelligence (AI) is integrated into our society. Humanoid robots with non-biological brain circuitries walk among people in every nation. These robots look like us, speak like us, and act like us. Should they have the same human rights as we do?

It is the year 2045. Strong artificial intelligence (AI) is integrated into our society. Humanoid robots with non-biological brain circuitries walk among people in every nation. These robots look like us, speak like us, and act like us. Should they have the same human rights as we do?