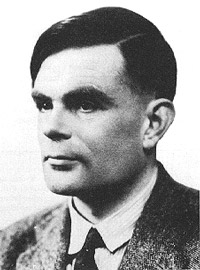

One grotesque error in an otherwise outstanding film, Ex_Mechina (2015), is when the character Nathan Bateman (Oscar Isaac) asks the character Caleb Smith (Domhnall Gleeson) if he knows what the Turing Test is, despite the fact that both Nathan and Caleb are programming geeks. In fact, Nathan brought Caleb to his home/research facility, expressly because of Caleb’s computer background and solitary status. What tech-head doesn’t know about the Turing Test?

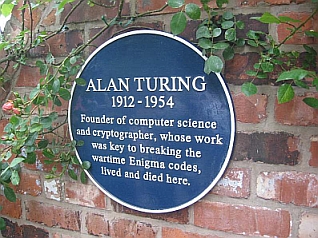

Knowledge of the Turing Test is a general phenomena in the tech world, but less people know all the detail and fewer still, understand the details. The paper Turing wrote, Computing Machinery and Intelligence, (published 1950, Mind 49: 433-460) is divided into seven sections. In the first section Turing poses a question “Can machines think?” And he immediately insists that to answer the question, requires that both terms “machine” and “think” must be defined.

He emphatically warns against trying to define the words based on common usage, comparing such an approach as being equivalent to taking a statistical survey like a Gallop poll. In effect implying that it would be like voting for particular meanings. He said the methodology would be absurd. Therefore, he suggests replacing the original question, with another question that he believes is closely related to the original question and that can be expressed in relatively unambiguous words.

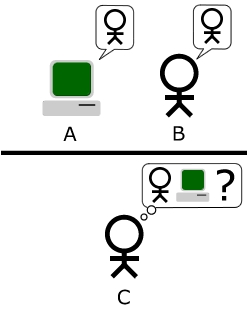

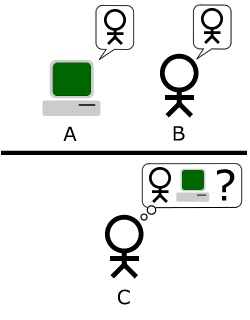

To accomplish the goal of finding proper terminology, he creates what he calls “the imitation game”. We now refer to it as the Turing Test. As most people know, the test involves an interrogator, or interrogators who, by asking questions of a player, can determine if the player is human. If the player succeeds in convincing interrogators that it is human, than the presumption is that the machine possesses some kind of intelligence, i.e., something related to thinking. The test and the idea it embodies has become nearly a cult.

A contest was started by Hugh Loebner that offers the Loebner prize (approximately $3,000). The contest has attracted a bevy of crazies and charlatans. However, two grand luminaries of the digital vista, Ray Kurzweil and Mitch Kapor, have a running bet of $20,000, with Ray wagering the Turing Test will be passed by 2029 and Mitch that it will not. This wager is administered by the Long Now Foundation. Winnings will be donated to a charitable organization determined by the winner.

There are many and significant supporters and detractors of the test. One notable critic, George Dvorsky (1970-Living), wrote an article, Why the Turing Test is Bullshit (io9, 2014). A more prominent critic – particularly in the realm of academic AI researchers – Marvin Minsky (1927-Living), commented on Singularity 1 on 1 (12 Jul 2013): “The Turing Test is a joke, sort of saying a machine would be intelligent if it does things that an observer would say, must be being done by a human. So it was suggested by Alan Turing as one way to evaluate a machine. But he had never intended it as a way to decide whether a machine was really intelligent.” He has also referred to it as a publicity stunt that does not benefit AI research.

Aristotle said that men have more teeth than women. He was not stupid and could count. He was a genius, but capable of a multitude of Errors. And such could be said of Alan Turing. But let’s think about how absurd – to coop Alan’s use of the term – the idea that convincing an interrogator, even a highly intelligent one, could substitute for understanding the principles of intelligence. Dear Alan – WRONG!

Not only was Turing wrong, but he was wrong in a particularly egregious way. Convincing people is what politician’s do, by utilizing a small number of, not especially intelligent tactics. Advertisers produce buy-in behavior, often in people that are not actually rationally decided on the product. My point is that “canned behavior” can substitute for intelligence, very effectively. The “imitation game” almost demands trickery. It certainly incentivizes it immensely.

But the real gunk in the goop here, is that the interrogator functions primarily, as a voter. Or to put it in starker terms, what Turing did was to replace something he explicitly said should not be voted on, with something that would, quintessentially be nothing more than something voted on. He effectively defined “think” or “intelligence” with “believable”. This reduces AI to a magic act.

The Turing Test tries to skirt around the meaning of “thought”. The history of the definitions of thought and/or intelligence have been a circus of semantics. People – including extremely educated ones – have described both as everything from “if-then” rules to spiritual privilege and everything imaginable between. And then there are the fatalistic few who espouse the idea that you can’t define thought or intelligence – it is impossible to do so.

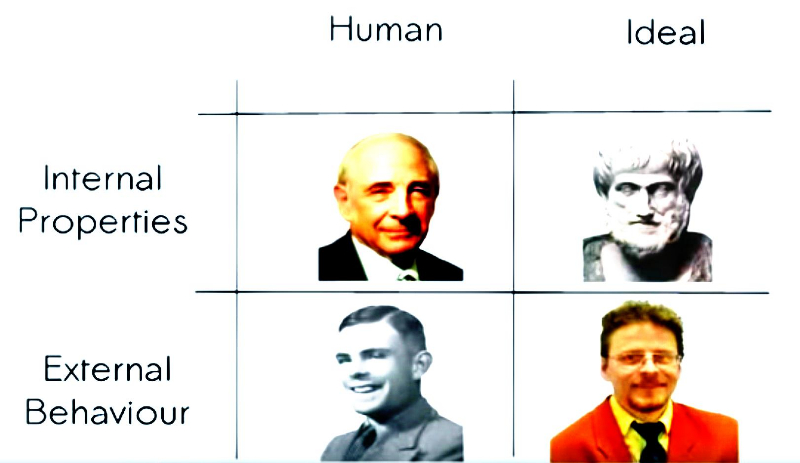

It seems to me that Shane Legg has cleared the big junk from the field of AI by characterizing the four major questions of intelligence, in such a way that there is less confusion about what is being talked about. He differentiates the big questions that concern intelligence (1) The internal workings of a human mind (thoughts), (2) The external workings of the human mind (behavior), (3) The internal workings of ideal intelligence (logic), (4) The external behavior of ideal intelligence (actions).

The Turing Test is a way of defining human intelligence based solely on behavior. John Searle’s thought experiment, The Chinese Room, is a way of defining human intelligence based solely on the internal activity of the human mind. Aristotle’s logic is a way of defining what is true for any intelligence based solely on the internal properties of the analysis. Marcus Hutter’s assessment of universal intelligence is based solely on the external behavior of the intelligent entity.

People confuse themselves by trying to articulate internal properties by referring to external properties, or vice versa. This is the mistake Turing made, because he did not know how to define the inner workings of intelligence. And people confuse themselves by speaking of universal intelligence as if it could only be a version of human intelligence or vice versa. The best example is the anthropomorphizing that people do when talking about all intelligence as if it were either inferior or superior to human intelligence.

In section six of his 1950 paper, Turing writes, “The original question, “Can machines think?” I believe to be too meaningless to deserve discussion.” What I believe Turing means, is that no one knows what thinking is and therefore, it is not possible to determine if machines are doing it. We of 2015 may not have a detailed, or even adequate, definition of thinking; but we are getting better at detecting things that are part of thinking and things that are not. The Turing test is not totally useless, because it is another thing that computers can be good, mediocre or bad at. But the Turing test is, in the broad context of serious AI research, a triviality. And its value is diminishing with time.

The best word on Technological Unemployment, that I know of is Carl Benedikt Frey and Michael Osborne, The Future of Employment: How Susceptible are Jobs to Computerisation (17 Sep 2013). And on page four they write: “While the computer substitution for both cognitive and manual routine tasks is evident, non-routine tasks involve everything from legal writing, truck driving and medical diagnoses, to persuading and selling. In the present study, we will argue that legal writing and truck driving will soon be automated, while persuading, for instance, will not.”

The assumption that Carl and Michael make regarding persuasion is based on the inability of machines to do creative thinking. They are basing the internal workings of machine intelligence on the external behavior of human beings. I would caution them not to base convictions about inner abilities on activities of outer behavior. I would also remind them that most people are only superficially creative; and there are ways of getting computer programs to produce novel output, by utilizing recursive stochastic routines that get culled by standards of valuation.

About the Author:

Charles Edward Culpepper, III is a Poet, Philosopher and Futurist who regards employment as a necessary nuisance…

Charles Edward Culpepper, III is a Poet, Philosopher and Futurist who regards employment as a necessary nuisance…

Computers, by their very nature, don’t need to have a point of view. However, for our purposes, it is often preferred that they do.

Computers, by their very nature, don’t need to have a point of view. However, for our purposes, it is often preferred that they do. Nikki Olson is a writer/researcher working on an upcoming book about the Singularity with Dr. Kim Solez, as well as relevant educational material for the Lifeboat Foundation. She has a background in philosophy and sociology, and has been involved extensively in Singularity research for 3 years. You can reach Nikki via email at

Nikki Olson is a writer/researcher working on an upcoming book about the Singularity with Dr. Kim Solez, as well as relevant educational material for the Lifeboat Foundation. She has a background in philosophy and sociology, and has been involved extensively in Singularity research for 3 years. You can reach Nikki via email at

Runaround. The three laws state that:

Runaround. The three laws state that:

“A drone pilot’s nightmare came true when operators lost control of an armed MQ-9 Reaper flying a combat mission over Afghanistan on Sunday. That led a manned U.S. aircraft to shoot down the unresponsive drone before it flew beyond the edge of Afghanistan airspace.”

“A drone pilot’s nightmare came true when operators lost control of an armed MQ-9 Reaper flying a combat mission over Afghanistan on Sunday. That led a manned U.S. aircraft to shoot down the unresponsive drone before it flew beyond the edge of Afghanistan airspace.”