Podcast: Play in new window | Download | Embed

Subscribe: RSS

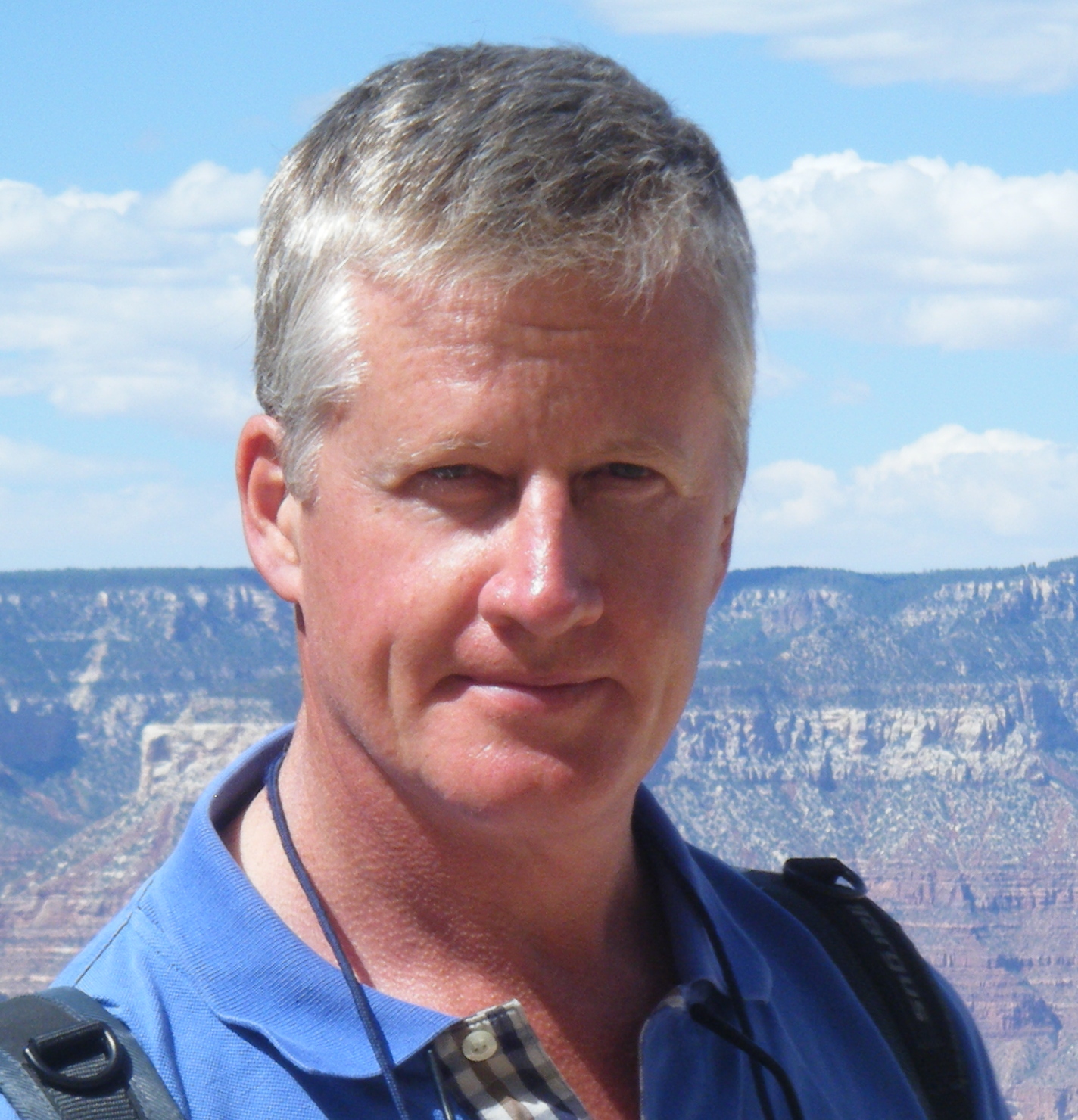

Marek Rosa is the founder and CEO of Keen Software, an independent video game development studio. After the success of Keen Software game titles such as Space Engineers, Marek founded and funded GoodAI with a 10 million dollar personal investment thereby finally being able to pursue his lifelong dream of building General Artificial Intelligence. Most recently Marek launched the General AI Challenge with a fund of 5 million dollars to be given away in the next few years.

Marek Rosa is the founder and CEO of Keen Software, an independent video game development studio. After the success of Keen Software game titles such as Space Engineers, Marek founded and funded GoodAI with a 10 million dollar personal investment thereby finally being able to pursue his lifelong dream of building General Artificial Intelligence. Most recently Marek launched the General AI Challenge with a fund of 5 million dollars to be given away in the next few years.

During our 80 min discussion with Marek Rosa we cover a wide variety of interesting topics such as: why curiosity is his driving force; his desire to understand the universe; Marek’s journey from game development into Artificial General Intelligence [AGI]; his goal to maximize humanity’s future options; the mission, people and strategy behind GoodAI; his definitions of intelligence and AI; teleology and the direction of the Universe; adaptation, intelligence, evolution and survivability; roadmaps, milestones and obstacles on the way to AGI; the importance of theory of intelligence; the hard problem of creating Good AI; the General AI Challenge…

As always you can listen to or download the audio file above or scroll down and watch the video interview in full. To show your support you can write a review on iTunes, make a direct donation or become a patron on Patreon.

Who is Marek Rosa?

Marek Rosa is CEO/CTO at GoodAI, a general artificial intelligence R&D company, and the CEO at Keen Software House, an independent game development studio best known for their best-seller Space Engineers (2mil+ copies sold). Both companies are based in Prague, Czech Republic. Marek has been interested in artificial intelligence since childhood. Marek started his career as a programmer but later transitioned to a leadership role. After the success of the Keen Software House titles, Marek was able to personally fund GoodAI, his new general AI research company building human-level artificial intelligence, with $10mil. GoodAI started in January 2014 and has grown to an international team of 20 researchers.

Marek Rosa is CEO/CTO at GoodAI, a general artificial intelligence R&D company, and the CEO at Keen Software House, an independent game development studio best known for their best-seller Space Engineers (2mil+ copies sold). Both companies are based in Prague, Czech Republic. Marek has been interested in artificial intelligence since childhood. Marek started his career as a programmer but later transitioned to a leadership role. After the success of the Keen Software House titles, Marek was able to personally fund GoodAI, his new general AI research company building human-level artificial intelligence, with $10mil. GoodAI started in January 2014 and has grown to an international team of 20 researchers.

Recently we have seen a slew of popular films that deal with artificial intelligence – most notably

Recently we have seen a slew of popular films that deal with artificial intelligence – most notably

Dan Elton is a physics PhD candidate at the Institute for Advanced Computational Science at Stony Brook University. He is currently looking for employment in the areas of machine learning and data science. In his spare time he enjoys writing about the effects of new technologies on society. He blogs at

Dan Elton is a physics PhD candidate at the Institute for Advanced Computational Science at Stony Brook University. He is currently looking for employment in the areas of machine learning and data science. In his spare time he enjoys writing about the effects of new technologies on society. He blogs at

Louis Rosenberg received B.S., M.S. and Ph.D. degrees from Stanford University. His doctoral work produced the Virtual Fixtures platform for the U.S. Air Force, the first immersive Augmented Reality system created. Rosenberg then founded Immersion Corporation (Nasdaq: IMMR), a virtual reality company focused on advanced interfaces. More recently, he founded

Louis Rosenberg received B.S., M.S. and Ph.D. degrees from Stanford University. His doctoral work produced the Virtual Fixtures platform for the U.S. Air Force, the first immersive Augmented Reality system created. Rosenberg then founded Immersion Corporation (Nasdaq: IMMR), a virtual reality company focused on advanced interfaces. More recently, he founded  Jaan Tallinn, co-founder of Skype and Kazaa, got so famous in his homeland of Estonia that people named the biggest city after him. Well, that latter part may not be exactly true but there are few people today who have not used, or at least heard of, Skype or Kazaa. What is much less known, however, is that for the past 10 years Jaan Tallinn has spent a lot of time and money as an evangelist for the dangers of existential risks as well as a generous financial supporter to organizations doing research in the field. And so I was very happy to do an interview with Tallinn.

Jaan Tallinn, co-founder of Skype and Kazaa, got so famous in his homeland of Estonia that people named the biggest city after him. Well, that latter part may not be exactly true but there are few people today who have not used, or at least heard of, Skype or Kazaa. What is much less known, however, is that for the past 10 years Jaan Tallinn has spent a lot of time and money as an evangelist for the dangers of existential risks as well as a generous financial supporter to organizations doing research in the field. And so I was very happy to do an interview with Tallinn. Jaan Tallinn is a founding engineer of Skype and Kazaa. He is a co-founder of the

Jaan Tallinn is a founding engineer of Skype and Kazaa. He is a co-founder of the  “

“

Science fiction author Isaac Asimov’s

Science fiction author Isaac Asimov’s

While many may be intrigued by the idea, how many of us actually care about robots – in the relating sense of the word?

While many may be intrigued by the idea, how many of us actually care about robots – in the relating sense of the word?  There are those of us who philosophize and debate the finer points surrounding the dangers of artificial intelligence. And then there are those who dare go in the trenches and get their hands dirty by doing the actual work that may just end up making the difference. So if AI turns out to be like the terminator then Prof.

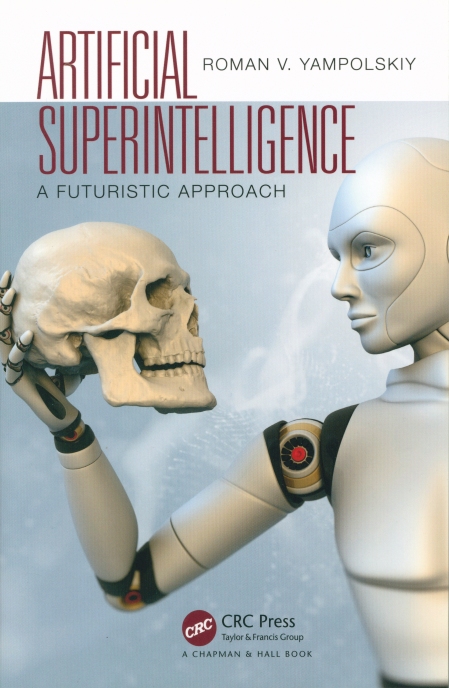

There are those of us who philosophize and debate the finer points surrounding the dangers of artificial intelligence. And then there are those who dare go in the trenches and get their hands dirty by doing the actual work that may just end up making the difference. So if AI turns out to be like the terminator then Prof.  Dr.

Dr.  Understanding the possibilities of the future of a field requires first cultivating a sense of its history.

Understanding the possibilities of the future of a field requires first cultivating a sense of its history.  The great influencers and contributors in the field of

The great influencers and contributors in the field of