Welcome to Life – the Singularity, Ruined by lawyers is a video that is as funny as it is smart. For this reason, even though it has been making the rounds on the internet for quite some time now, I decided to repost it below for your viewing pleasure. Enjoy!

Welcome To Life:

Hello, and welcome to Life. We regret to inform you that your previous existence ended on January 14, 2052 following a road traffic accident. However, your consciousness was successfully uploaded to the Life network by your primary care provider. You may be experiencing some confusion. Please remain calm. Life contains *ting sound* Your mental state is being temporarily adjusted in order to calm you. Please do not be alarmed. Life contains over thirty thousand unique activities, networking with millions of other digitized minds, and the ability to contact undigitized friends and family. Please accept these terms and conditions in order to continue Life. Your attention is particularly drawn to Section 2: Usage Rules and Limitations, Section 9: Privacy, and Section 11: Restricted Mental Activities. Thank you.

Hello, and welcome to Life. We regret to inform you that your previous existence ended on January 14, 2052 following a road traffic accident. However, your consciousness was successfully uploaded to the Life network by your primary care provider. You may be experiencing some confusion. Please remain calm. Life contains *ting sound* Your mental state is being temporarily adjusted in order to calm you. Please do not be alarmed. Life contains over thirty thousand unique activities, networking with millions of other digitized minds, and the ability to contact undigitized friends and family. Please accept these terms and conditions in order to continue Life. Your attention is particularly drawn to Section 2: Usage Rules and Limitations, Section 9: Privacy, and Section 11: Restricted Mental Activities. Thank you.

Please select a Life plan.

Terms and conditions

THE LEGAL AGREEMENTS SET OUT BELOW ARE BETWEEN YOU AND LIFE DIGITAL PERSONALITY MANAGEMENT INCORPORATED (HERAFTER “THE PROVIDER”) AND GOVERN YOU USE OF THE PROVIDER’S SYSTEMS WHICH INCLUDE, BUT ARE NOT LIMITED TO, THE COMPILATION AND SIMULATION OF YOUR DIGITAL PERSONALITY UNDER THE DIGITAL PERSONALITIES (RIGHTS OF DECEASED PERSONS) ACT 2050.

THE AGREEMENT APPLIES WITHOUT PREJUDICE TO ANY PREVIOUS AGREEMENTS AND CONTRACTS THAT YOU MAY HAVE ENTERED INTO WITH THIRD PARTIES, IMPORTANT” ACCEPTING SIMULATION AS A DIGITAL PERSONALITY MEANS YOU WAIVE YOUR RIGHT TO POST-MORTEM RELEASE OF DEBTS AND OBLIGATIONS. YOUR LIFE MAY BE AT RISK IF YOU DO NOT KEEP UP REPAYMENTS ON A LOAN SECURED ON IT.

1. PAYMENTS AND REFUNDS POLICY

You agree that you will pay for services purchased from the Provider, as well as upgrades, enhancements and experience (“Apps”) selected from third-party simulation enhancement entities (“App Providers”). The Provider accepts payment by direct transfer from bank accounts in the US, UK, France, Germany and Australia. In the event your payments become significantly in arrears, the Provider reserves the right to a) search your digital personality for the details, locations, and access requirements for assets that you owe in relation to its services (see: Section 9, Privacy) and/or b) terminate your simulation without notice (see: Section 13, Notice of Termination).

! Rejecting these terms will result in termination of your simulated personality.

Accept

Reject

Welcome To Life

Tier One is our premium offering, allowing full uninterrupted simulation of your pre-terminal state. It includes unlimited modification of your body plan, accelerated learning and recall, and full personal backup facilities. Tier Two is our advertiser-supported offering. It contains many of the features of Tier One, but at a significantly reduced cost. Some areas of the environment, such as the sky, may be replaced with targeted advertising, and your personal brand preferences may be altered to align with those of our sponsors. Tier Three is our value offering. Thanks to our commercial partners, your experience at this tier is unlimited. However, some activities, senses, and visual rendering options may be subject to a Fair Use Policy. More complicated mental processes, including subconscious thought, creativity and self-awareness, may be rate-limited or disabled at times of significant server load. Thank you.

Your stored mind contains one or more patterns that contravene the Prevention of Crime and Terrorism Act of 2050. Please stand by while we adjust these patterns. Your stored mind contains sections from 124,564 copyrighted works. In order to continue remembering these copyrighted works, a licensing fee of $18,000 per month is required. Would you like to continue remembering these works? Thank you.

Legal compliance

UNDER INTERNATIONAL TRACE AND COPYRIGHT LAWS, WE ARE UNABLE TO STOR WHOLE OR PART COPYRIGHTED WORKS AS PART OF A DIGITAL PERSONALITY WITHOUT THAT PERSONALITY TENDERING A LICENSING FEE DETERMINED BY THE COPYRIGHT HOLDER. THE FOLLOWING COPYRIGHTED WORKS ARE WHOLLY OR PARTLY CONTAINED WITHIN YOUR STORED PERSONALITY TO A DEGREE THAT CONTRAVENES THE RIGHT OF INTELLECTUAL PROPERTY HOLDERS:

MUSICAL WORKS OR PERFORMANCES: 57,384

VISUAL WORKS OR PERFORMANCES: 43,586

OLFACTORY WORKS OR PERFORMANCES: 124

OTHER WORKS OR PERFORMANCES: 23,470

You have insufficient funds in an financial reserves to pay this licensing fee.

! Copyrighted works are being deleted.

Welcome to Life.

Please stand by. Welcome to Life. Do you wish to continue?

© Published By Enyay tomscott.com

Why do we fear

Why do we fear

When

When

The term singularity has many meanings.

The term singularity has many meanings. So, the Wiktionary lists the following five meanings:

So, the Wiktionary lists the following five meanings:

In his 1951 paper titled Intelligent Machinery: A Heretical Theory, Alan Turing wrote of machines that will eventually surpass human intelligence:

In his 1951 paper titled Intelligent Machinery: A Heretical Theory, Alan Turing wrote of machines that will eventually surpass human intelligence: In 1958 Stanislaw Ulam wrote about a conversation with John von Neumann who said that: “the ever accelerating progress of technology … gives the appearance of approaching some essential singularity in the history of the race beyond which human affairs, as we know them, could not continue.” Neumann’s alleged definition of the singularity was that it is the moment beyond which “technological progress will become incomprehensibly rapid and complicated.”

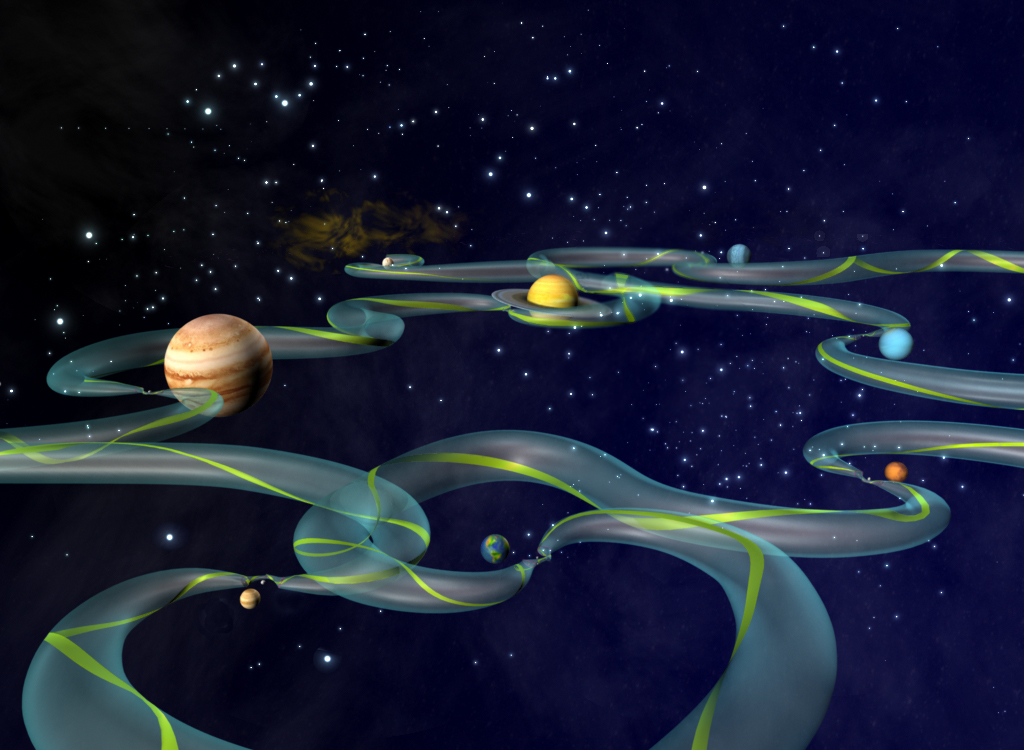

In 1958 Stanislaw Ulam wrote about a conversation with John von Neumann who said that: “the ever accelerating progress of technology … gives the appearance of approaching some essential singularity in the history of the race beyond which human affairs, as we know them, could not continue.” Neumann’s alleged definition of the singularity was that it is the moment beyond which “technological progress will become incomprehensibly rapid and complicated.” “We will soon create intelligences greater than our own. When this happens, human history will have reached a kind of singularity, an intellectual transition as impenetrable as the knotted space-time at the center of a black hole, and the world will pass far beyond our understanding. This singularity, I believe, already haunts a number of science-fiction writers. It makes realistic extrapolation to an interstellar future impossible. To write a story set more than a century hence, one needs a nuclear war in between … so that the world remains intelligible.”

“We will soon create intelligences greater than our own. When this happens, human history will have reached a kind of singularity, an intellectual transition as impenetrable as the knotted space-time at the center of a black hole, and the world will pass far beyond our understanding. This singularity, I believe, already haunts a number of science-fiction writers. It makes realistic extrapolation to an interstellar future impossible. To write a story set more than a century hence, one needs a nuclear war in between … so that the world remains intelligible.” In

In  In 1997 Nick Bostrom – a world-renowned philosopher and futurist, wrote

In 1997 Nick Bostrom – a world-renowned philosopher and futurist, wrote  Ray Kurzweil is easily the most popular singularitarian. He embraced Vernor Vinge’s term and brought it into the mainstream. Yet Ray’s definition is not entirely consistent with Vinge’s original. In his seminal book

Ray Kurzweil is easily the most popular singularitarian. He embraced Vernor Vinge’s term and brought it into the mainstream. Yet Ray’s definition is not entirely consistent with Vinge’s original. In his seminal book  In 2007 Eliezer Yudkowsky pointed out that

In 2007 Eliezer Yudkowsky pointed out that  In

In

I couple of days ago I interviewed

I couple of days ago I interviewed  Last week, I interviewed

Last week, I interviewed

Today

Today

Nikki Olson is a writer/researcher working on an upcoming book about the Singularity with Dr. Kim Solez, as well as relevant educational material for the Lifeboat Foundation. She has a background in philosophy and sociology, and has been involved extensively in Singularity research for 3 years. You can reach Nikki via email at

Nikki Olson is a writer/researcher working on an upcoming book about the Singularity with Dr. Kim Solez, as well as relevant educational material for the Lifeboat Foundation. She has a background in philosophy and sociology, and has been involved extensively in Singularity research for 3 years. You can reach Nikki via email at

The deep connection between human spirituality and advancing technology has proven to be intimate.

The deep connection between human spirituality and advancing technology has proven to be intimate.