Podcast: Play in new window | Download | Embed

Subscribe: RSS

Joscha Bach is a beautiful mind and a very rewarding interlocutor. No surprise I had many requests to have him back on my podcast. I apologize that it took 4 years but the good news is we are going to have a third conversation much sooner this time around. If you haven’t seen our first interview with Joscha I suggest you start there before you watch this one. Enjoy and don’t hesitate to let me know what you think.

Joscha Bach is a beautiful mind and a very rewarding interlocutor. No surprise I had many requests to have him back on my podcast. I apologize that it took 4 years but the good news is we are going to have a third conversation much sooner this time around. If you haven’t seen our first interview with Joscha I suggest you start there before you watch this one. Enjoy and don’t hesitate to let me know what you think.

During our 2-hour conversation with Joscha Bach, we cover a variety of interesting topics such as his new job at Intel as an AI researcher; whether Moore’s Law is dead or alive; why he is first and foremost a human being trying to understand the world; the kinds of questions he would like to ask God or Artificial Superintelligence; the most recent AI developments and criticisms from Gary Marcus, Marvin Minsky, and Noam Chomsky; living in a learnable universe; evolution and the frame problem; intelligence, smartness, wisdom, and values; personal autonomy and the hive mind; cosmology, theology, story, existence, and non-existence.

My favorite quotes that I will take away from this conversation with Joscha Bach are:

What is a model? A model is a set of regularities that we find in the world – the invariances like the Laws of Physics at a certain level of resolution, that describe how the world doesn’t change but is the same. [These are the things that remain constant.] And the state that the world is in. And once you combine these constants and the known state you can predict the next state of the world.

Intelligence is the ability to dream in a very focused way.

As always you can listen to or download the audio file above or scroll down and watch the video interview in full. To show your support you can write a review on iTunes, make a direct donation, or become a patron on Patreon.

Who is Joscha Bach?

Joscha Bach, Ph.D. is a cognitive scientist focused on cognitive architectures, mental representation, emotion, social modeling, and learning. He earned his Ph.D. in cognitive science from the University of Osnabrück, Germany. He is especially interested in the philosophy of AI, and in using computational models and conceptual tools to understand our minds and what makes us human. Joscha has taught computer science, AI, and cognitive science at the Humboldt-University of Berlin, the Institute for Cognitive Science at Osnabrück, and the MIT Media Lab, and authored the book “Principles of Synthetic Intelligence” (Oxford University Press). He currently works at the Harvard Program for Evolutionary Dynamics in Cambridge, Massachusetts.

ReWriting the Human Story: How Our Story Determines Our Future

ReWriting the Human Story: How Our Story Determines Our Future

It’s been 7 years since my

It’s been 7 years since my  Gary Marcus is a scientist, best-selling author, and entrepreneur. He is Founder and CEO of

Gary Marcus is a scientist, best-selling author, and entrepreneur. He is Founder and CEO of

There are those of us who philosophize and debate the finer points surrounding the dangers of artificial intelligence. And then there are those who dare go in the trenches and get their hands dirty by doing the actual work that may just end up making the difference. So if AI turns out to be like the terminator then Prof.

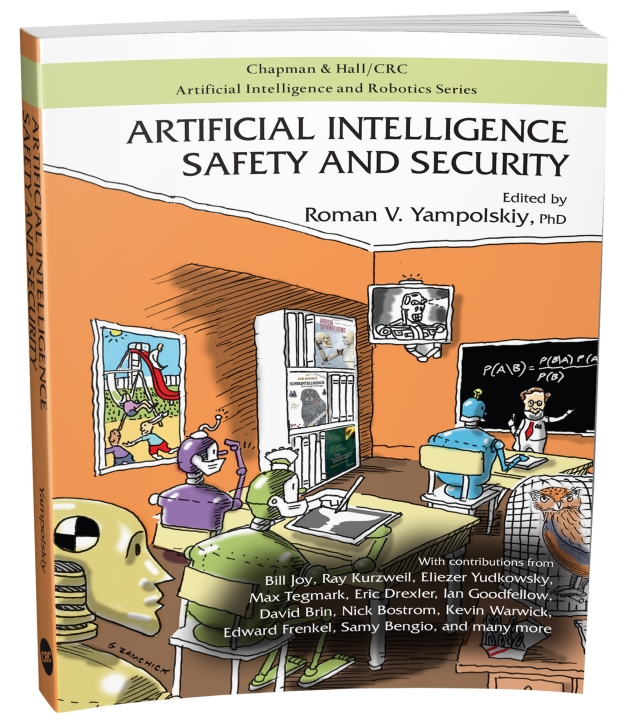

There are those of us who philosophize and debate the finer points surrounding the dangers of artificial intelligence. And then there are those who dare go in the trenches and get their hands dirty by doing the actual work that may just end up making the difference. So if AI turns out to be like the terminator then Prof.  Dr. Roman V. Yampolskiy is a Tenured Associate Professor in the Department of Computer Engineering and Computer Science at the Speed School of Engineering, University of Louisville. He is the founding and current director of the Cyber Security Lab and an author of many books including

Dr. Roman V. Yampolskiy is a Tenured Associate Professor in the Department of Computer Engineering and Computer Science at the Speed School of Engineering, University of Louisville. He is the founding and current director of the Cyber Security Lab and an author of many books including  At the Heart of Intelligence is an emotionally compelling and well-made short film discussing artificial intelligence. It was produced in collaboration between popular futurist

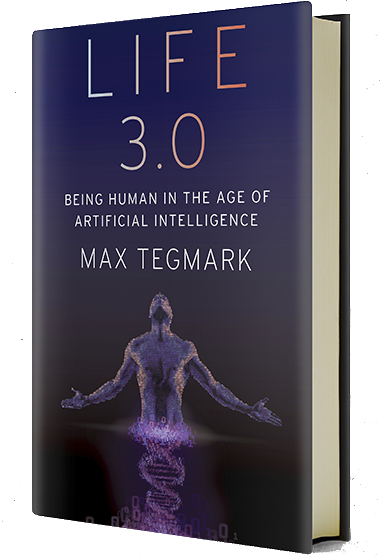

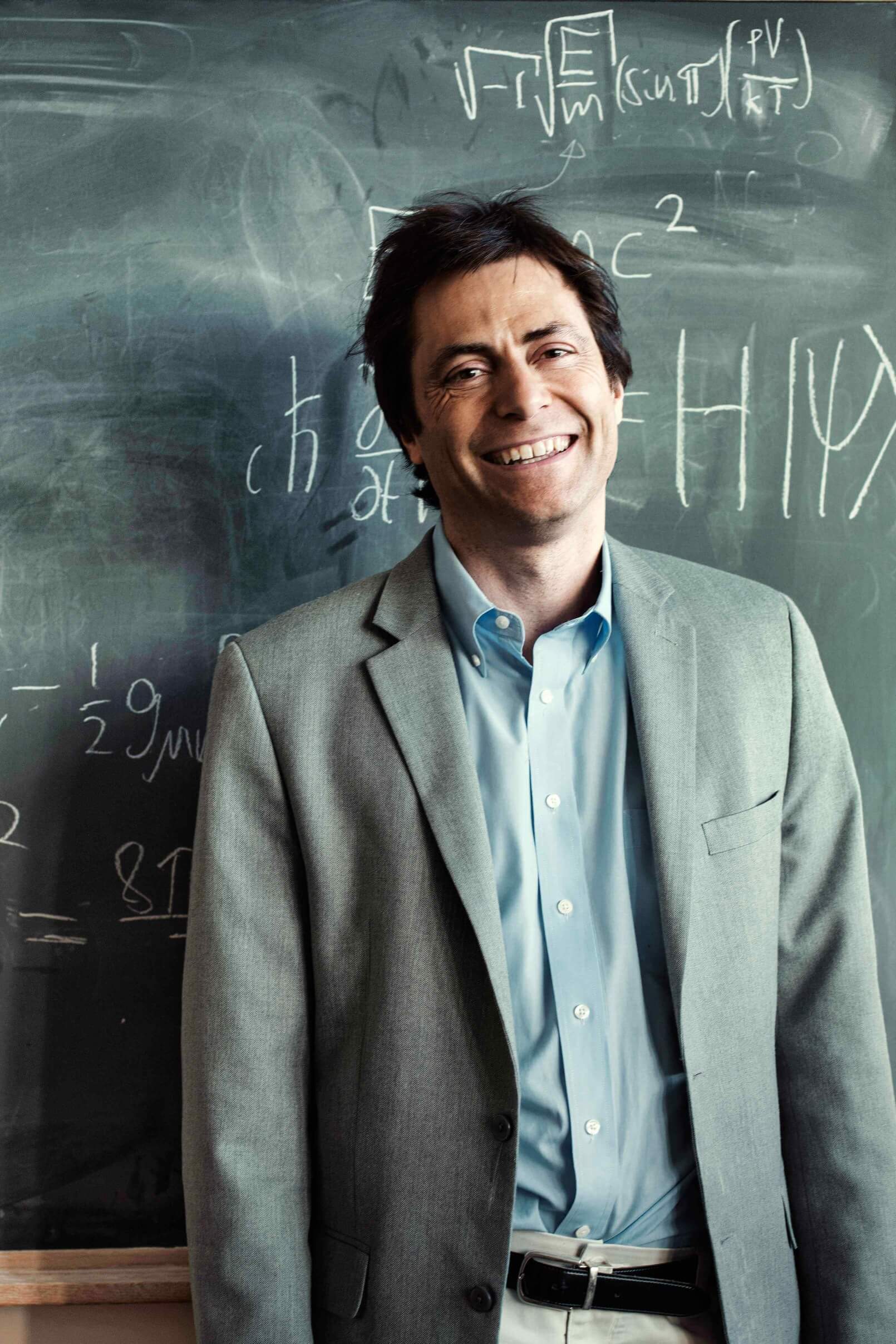

At the Heart of Intelligence is an emotionally compelling and well-made short film discussing artificial intelligence. It was produced in collaboration between popular futurist  Some people say that renowned MIT physicist

Some people say that renowned MIT physicist  Max Tegmark is driven by curiosity, both about how our universe works and about how we can use the science and technology we discover to help humanity flourish rather than flounder.

Max Tegmark is driven by curiosity, both about how our universe works and about how we can use the science and technology we discover to help humanity flourish rather than flounder. General AI research and development company GoodAI has launched the latest round of their General AI Challenge, Solving the AI Race. $15,000 of prizes are available for suggestions on how to mitigate the risks associated with a race to transformative AI.

General AI research and development company GoodAI has launched the latest round of their General AI Challenge, Solving the AI Race. $15,000 of prizes are available for suggestions on how to mitigate the risks associated with a race to transformative AI. The Intelligence Explosion is a hilariously witty short sci fi film about AI ethics. The film is asking questions such as:

The Intelligence Explosion is a hilariously witty short sci fi film about AI ethics. The film is asking questions such as: